Are AI Detectors Accurate? The Definitive Guide

So, you're wondering if AI detectors are actually accurate. The short answer is yes, but that "yes" comes with some major asterisks. These tools are pretty good at spotting text that's 100% AI-generated, but their reliability takes a nosedive when humans have edited that text. Even worse, they can sometimes flag perfectly human writing as AI-made—a serious problem we call a false positive.

The Real Answer on AI Detector Accuracy

To get to the bottom of AI detector accuracy, we have to move past a simple "yes" or "no." The whole field is a constant game of cat and mouse. Every time a new model like GPT-4 gets better at sounding human, the detection tools have to scramble to adapt and figure out the new "tells." This means a detector's performance isn't a fixed guarantee; it's a moving target.

It helps to think of an AI detector less like a perfect, definitive lab test and more like a skilled detective hunting for clues. It's trained to spot the subtle, almost invisible patterns that machines tend to leave behind. The result you get isn't a final verdict but a confidence score—basically, an educated guess based on the evidence it found.

Why Perfect Accuracy Is Impossible

Chasing 100% accuracy is a bit of a fool's errand, and there are a few good reasons why it's still out of reach. For one, AI-generated text can be tweaked and polished by a human, blurring the lines between machine patterns and human creativity. On the flip side, some human writing—especially if it's very formal or follows a rigid structure—can accidentally look predictable enough to trigger a false positive.

This all points to the real purpose of these tools. Their job isn't to be an infallible judge and jury, but to act as a signal that something might be worth a closer look.

Here's how to think about it in the real world:

- It's an Indicator, Not a Judge: A high AI score is a reason to investigate, not a reason to jump to conclusions.

- Context Is Crucial: You always have to consider the writer's known style and the type of content you're looking at.

- Human Oversight Is Essential: The final call on where a piece of text came from should always, always be made by a person.

The best AI detectors are really just powerful assistants. They're great at flagging text that looks suspicious, but they struggle in the gray area of human-AI collaboration. That’s why using them responsibly is so important.

At the end of the day, AI detectors are getting better all the time, but their results demand critical thinking. They’re a powerful resource in the quest for authenticity, but they are far from perfect.

How AI Detectors Spot Digital Fingerprints

To get a handle on the accuracy of AI detectors, we have to first peek under the hood at what they're actually looking for. These tools don't "read" for meaning like you or I would. Think of them more like linguistic forensic analysts, searching for the subtle mathematical patterns that AI models tend to leave behind.

I like to think of human writing as a sprawling, unpredictable city map—full of winding roads, unexpected turns, and unique neighborhoods. AI-generated text, in contrast, often looks more like a perfectly planned grid system. It’s efficient and logical, but it just doesn't have that natural, chaotic feel of human creation. Detectors are trained to spot this underlying order.

The Core Clues: Perplexity and Burstiness

Two of the biggest giveaways these tools hunt for are perplexity and burstiness. They sound a bit academic, but the concepts are pretty simple.

Perplexity is really just a measure of how predictable a string of words is. As human writers, we're often wonderfully unpredictable. We use surprising word choices, idioms, and unique turns of phrase. AI, on the other hand, is trained on mountains of data to pick the most statistically probable next word. This makes its writing very smooth, but often formulaic. A low perplexity score signals that the text is too predictable, raising a red flag for AI generation.

Burstiness looks at the rhythm and flow of the writing. Humans naturally write in bursts. We might follow a long, winding sentence with a short, punchy one to make a point. This constant variation in sentence length and complexity creates a dynamic rhythm. AI models, however, tend to churn out sentences of similar length and structure, creating a flatter, more monotonous cadence that a detector can pick up on.

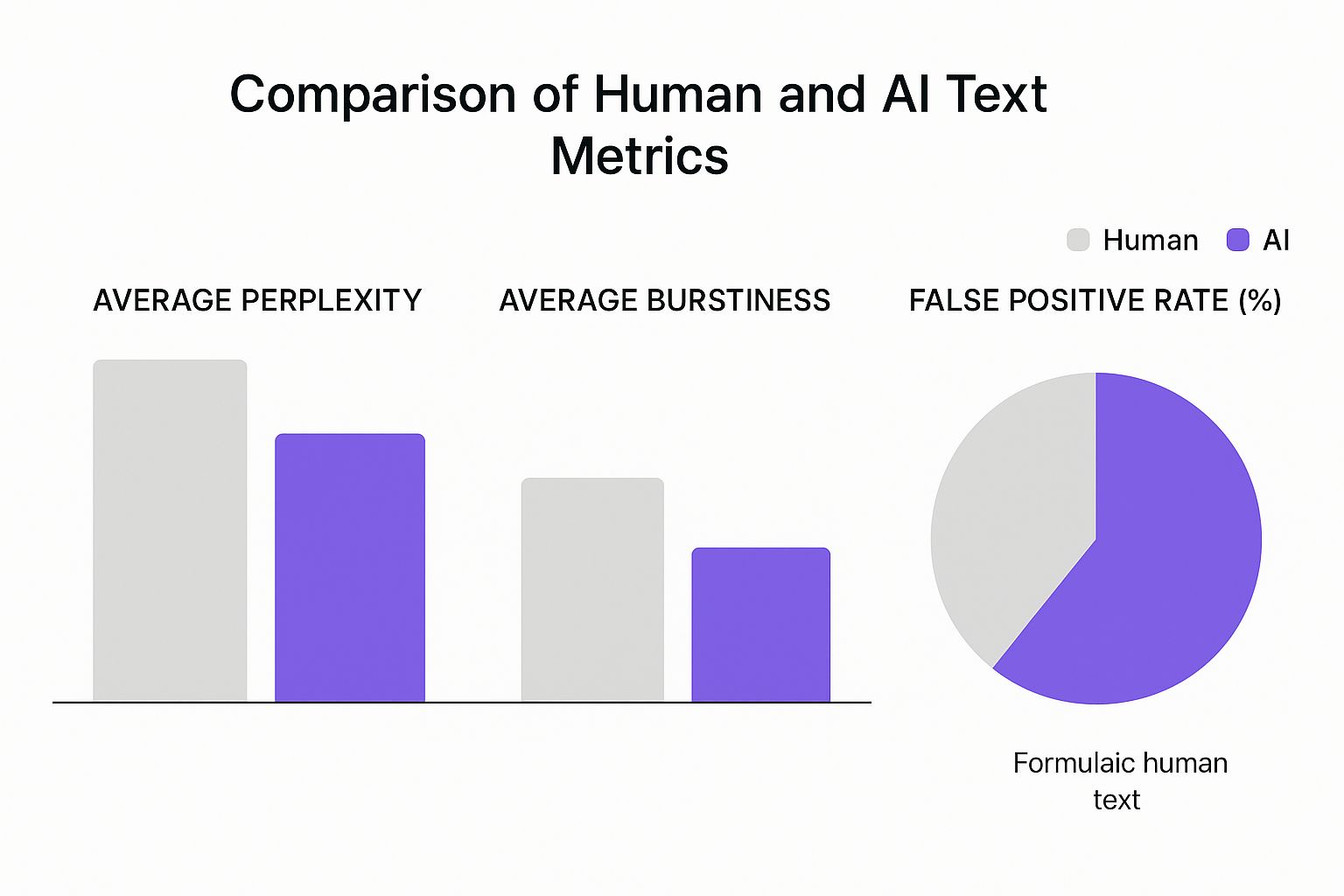

This infographic breaks down how these metrics typically stack up between human and AI-generated content.

You can see that AI text usually has lower perplexity and burstiness. But the key takeaway here is the high false positive rate for formulaic human writing—this points to a major weakness in the system.

Why This Method Isn't Bulletproof

While this pattern-spotting approach is clever, it's far from perfect. It’s exactly why these detectors can get things so wrong and why 100% accuracy is still out of reach.

- Smarter AI Can Evade Detection: The goalposts are always moving. Newer AI models are specifically being trained to write with more variation, mimicking human-like burstiness and using less common words to fool detectors.

- Formulaic Human Writing Gets Falsely Accused: Anyone writing in a very structured, simple, or repetitive style can trigger a false positive. Think of technical manuals, certain academic papers, or even a list of instructions. This content can naturally have low perplexity and burstiness.

- Non-Native Speakers Are at a Disadvantage: People who learned English as a second language often rely on more structured, foundational sentence patterns. Their writing can be flagged as AI-generated simply because it doesn't have the same "bursty" rhythm a native speaker might use.

At its heart, an AI detector is just a pattern recognition machine. It isn't judging quality, creativity, or originality. It’s simply running the numbers to see how statistically likely it is that a machine wrote the text based on these digital fingerprints.

Getting this part is crucial. It means a detection score isn't a final verdict, but an assessment of probability. This distinction is everything when it comes to interpreting the results responsibly and accepting the tool's built-in limitations.

Putting Leading AI Detectors to the Test

When we move from theory to reality, things get messy. So, are AI detectors accurate? The honest answer is: it depends. It depends heavily on the tool you're using, what you're asking it to check, and how the AI content was made.

You'll often see developers claim one level of performance, while independent tests tell a completely different story. This gap creates a lot of confusion, so let's break down how these tools actually fail and what the numbers really mean.

False Positives vs. False Negatives

Before you can trust any detector, you need to understand the two ways it can get things wrong. These aren't just technical terms; they represent two very different kinds of failure, each with its own real-world consequences.

- False Positive: This is the big one. It's when a detector flags a perfectly human-made image as being AI-generated. Think of it as a false accusation, which can have serious ramifications in professional or academic environments.

- False Negative: This happens when an AI-generated image slips past the detector, which labels it as human. This error erodes the tool's entire purpose, letting synthetic media pass through unchecked.

Many experts argue that a high false positive rate is the more dangerous flaw, as it can unfairly penalize innocent creators and damage reputations. On the flip side, a high false negative rate just makes the tool useless for verification. The perfect detector would have zero of both, but that's a standard no current tool can meet.

A Look at the Data

Real-world testing shows a massive performance gap between different tools. For instance, a text detector that OpenAI briefly released in 2023 only caught 26% of AI content correctly and, more worryingly, had a 9% false positive rate on human writing before they pulled it.

On the other end of the spectrum, some commercial detectors now claim accuracy as high as 99%. One tool, available at https://aiimagedetector.com, even reports 92% accuracy against content specifically designed to fool detectors.

However, it's wise to be skeptical of marketing claims. The U.S. Federal Trade Commission has even called out some providers for inflating their success rates.

A detector’s proclaimed accuracy score means very little without context. You must also know its false positive rate and how it performs on content that has been edited or paraphrased—that’s where most tools begin to struggle.

To give you a clearer view of the landscape, here’s how some of the most prominent tools have stacked up in various tests.

AI Detector Performance Comparison

The table below summarizes the performance of several key players, based on both their own claims and third-party analysis. Notice the wide discrepancies, especially when it comes to flagging human work.

| Tool/Provider | Reported Accuracy | Observed False Positives | Context/Notes |

|---|---|---|---|

| OpenAI (2023 Text Tool) | 26% | 9% on human text | This tool was retired due to its low accuracy and high false positive rate, highlighting the difficulty of the task. |

| Turnitin | Claims 98% overall | Varies; some studies show significant rates on human writing | Primarily used in academia. Its results have been contested, with some universities dropping the feature. |

| Originality.ai | Up to 99% | Low, but not zero. Can be higher on non-native English. | Known for being one of the more aggressive and accurate detectors, but can still be fooled. |

| Winston AI | 99.6% on GPT-4 | Reported as low, but testing is ongoing. | Positions itself as a highly reliable tool for educators and content publishers. |

| Copyleaks | Claims 99.1% | Generally low in controlled tests. | Offers broad language support and an API, making it a popular choice for integration. |

This data just reinforces a crucial point: you can't take a developer's marketing claims at face value. The performance of even the best AI detectors can plummet when faced with hybrid human-AI content or images that have been subtly edited.

Ultimately, these numbers tell us that while the technology is getting better, it is far from perfect. These tools are best used as an initial screening step—one data point among many that still requires a thoughtful human in the loop to make the final call.

AI Detection in the High-Stakes World of Academia

Nowhere is the question of AI detector accuracy more important than in education. For a student, a single accusation of academic dishonesty can derail their future before it even starts. For a university, its entire reputation is built on integrity. This has turned the academic world into the ultimate proving ground for these detection tools.

https://www.youtube.com/embed/GG1wpWTEXrk

Academic integrity platforms like Turnitin are at the center of this, processing an absolutely staggering number of student papers. Between 2023 and 2024, its AI detection system scanned roughly 200 million papers. This massive scale gives us an incredible look into how students are using AI and how well the detectors are keeping up.

The data from that period showed that 11% of student papers had at least 20% AI-generated writing. That's a huge number, and it signals a fundamental shift in how students research and write.

The Nuance of Accuracy in Education

When a tool claims high accuracy, that sounds great. But for an individual student, the reality is much more personal. The biggest fear is the false positive—when a detector incorrectly flags human-written work as AI-generated. Even a tiny error rate can cause immense problems when you're dealing with millions of students.

Turnitin, for example, reports an overall accuracy rate of 98%. That seems impressive, but they also acknowledge a 4% false positive rate at the sentence level. This means that while the tool is generally right about the whole document, it can still misidentify individual human-written sentences, leaving educators in a tough spot. Understanding how these tools handle data is key to ensuring fairness. You can learn about our commitment to privacy and see how we approach this.

This data highlights a critical distinction: macro accuracy is not the same as micro reliability. A tool can be right most of the time on a huge scale and still produce errors that dramatically impact individual lives.

In academia, the goal isn't just to catch AI-generated text; it's to do so without wrongly penalizing honest students. This dual responsibility makes the margin for error incredibly slim and places immense pressure on detection tools to refine their methods.

The Challenge of Mixed-Source Writing

Today's academic paper is rarely a simple case of all-human or all-AI. Students frequently use AI to brainstorm ideas, structure an outline, or polish a difficult paragraph. This creates a hybrid document that is notoriously tricky for any detector to analyze.

Here are some of the key challenges detectors face in an academic setting:

- Human-AI Collaboration: When a student takes an AI-generated draft and heavily edits it, those digital "fingerprints" start to fade, making a clear-cut judgment nearly impossible.

- Contextual Sensitivity: Research has found that 54% of incorrectly flagged sentences were located right next to actual AI-generated text. This suggests the detector's sensitivity can sometimes "bleed" over, misjudging the surrounding human content.

- Widespread Adoption: Global statistics for 2024 show that 86% of students use AI tools on a regular basis. Detectors are in a constant race to adapt to new models and new ways students are using them. You can discover more insights about these academic AI usage trends.

Ultimately, in the world of education, an AI detection score should never be the final word. It's just one piece of evidence. It's a signal that should start a conversation—a prompt for a more thoughtful, human-led discussion about a student's work and their creative process.

Why Our Brains Struggle to Spot AI Content

When we talk about AI detector accuracy, we usually point the finger at the technology. But what about us? Why do we even need these tools? It turns out, we’re surprisingly bad at telling the difference between human and AI-created work.

Our intuition, that gut feeling we rely on, often leads us astray when we're looking at something made by a sophisticated AI. We might hunt for clunky phrasing or weird factual mistakes, but today's models are incredibly polished. They can churn out text and images that feel so natural and convincing that they sail right past our internal BS detectors.

This isn't just a guess; the data confirms it. Our ability to spot AI content, especially images and video, is often just a little better than a 50/50 coin toss. This gap in our own perception is exactly why we need automated tools, but it also shows just how tough this problem is for everyone involved.

The Limits of Human Perception

Our brains are pattern-matching machines, built to make sense of chaos. But that very strength becomes a weakness when we’re up against AI. We’re suckers for cognitive biases, often assuming that something complex, creative, or emotionally powerful must have been made by a person. AI models know this and are designed to mimic the exact styles we associate with human creativity.

A recent massive study on AI-generated images put our struggle into sharp focus. Out of more than 193,000 images, people only correctly identified about 63% of the AI-generated ones. When you look at the whole picture—spotting both real and AI images—our overall accuracy dropped to a measly 62%. That's barely better than guessing randomly.

Interestingly, we were best at spotting AI-generated people. Our brains are hardwired to notice even the tiniest oddities in faces. But when it came to landscapes or cityscapes? Our performance tanked. These findings echo what we've seen in video deepfake detection, where human accuracy also hovers around 60%. You can explore the full findings to see just how tricky this has become.

This all highlights a critical reality.

While automated detectors have their own set of problems, they’re built to catch the subtle, mathematical fingerprints our eyes and brains completely miss. Our own unreliability is what makes these tools a necessary, if imperfect, partner.

At the end of the day, modern AI has simply outpaced our natural detection skills. We can't just rely on a vague feeling that something seems "off" anymore. That's the real role of an AI detector: to give us a data-driven starting point for a deeper, human-led review and to put the scale of the problem into a realistic perspective.

How to Use AI Detector Results Responsibly

After digging into the nuts and bolts of AI detection, one thing is crystal clear: the score is not a final verdict. It’s a starting point. When people ask if these detectors are truly accurate, the honest answer is they're accurate enough to raise a flag, but not reliable enough to pass judgment. Using these results responsibly means treating them as the beginning of an investigation, not the end of one.

A high AI probability score should never, ever lead to an immediate accusation. The risk of false positives is simply too high, especially for images with common subjects or simple compositions. You can’t risk damaging someone's reputation or academic standing based on an algorithm's guess. Instead, a high score should trigger a more thoughtful, human-led review of the image in question.

A Framework for Responsible Review

So, what do you do when a report flags an image with a high AI score? Before jumping to conclusions, it’s best to follow a more measured and fair process. This approach respects the limits of the technology while helping you maintain your own integrity.

Your first move should be to consider the bigger picture. Does the image's style match the creator's other work? Does the content show a level of creative nuance or subtle imperfection that AI often struggles to replicate? These initial questions can give you far more clarity than a simple percentage score.

Here are the key steps to take:

- Examine the Image Holistically: Look for other tell-tale signs of AI generation. Are there weird artifacts, unnatural textures, or strange inconsistencies in the lighting or shadows?

- Look for Human Elements: Search for unique artistic choices, personal touches, or the subtle flaws that are characteristic of human creation but not typical of a flawless AI output.

- Open a Dialogue: This is the most important step. Have a conversation with the creator. Ask them about their creative process, their tools, and how they developed their concept. A simple discussion is often the quickest path to the truth.

An AI detection score is just one piece of the puzzle. Responsible use means combining that data point with contextual analysis, critical thinking, and direct communication before reaching any conclusion.

Ultimately, these tools are powerful aids, but they are no substitute for human judgment. By understanding their role and their limitations, you can use their strengths to uphold standards without being misled by their flaws. For a deeper look into our tools and options, you can explore the AI Image Detector pricing and features.

Got Questions About AI Detector Accuracy? Let's Clear Things Up.

Even after diving into the details, you probably still have some questions floating around. That's completely normal. This is tricky technology, and the conversation around it seems to change by the week. Let's tackle some of the most common questions head-on to give you a clearer picture.

Can AI Detectors Actually Be 100% Accurate?

In a word, no. 100% accuracy just isn't possible right now for any AI detector on the market. Think of it as a constant cat-and-mouse game: as AI models get scarily good at mimicking human writing, the detectors have to scramble to keep up.

There are a few big reasons why perfection is always just out of reach:

- The Human Touch: When someone edits AI-generated content—even just a little—they're essentially smudging the digital fingerprints the detector is trying to find.

- Rewriting Tools: Modern paraphrasing tools can rephrase AI text so thoroughly that it slips right past many detectors.

- Predictable Human Writing: Sometimes, our own writing can be a bit formulaic. Think of technical manuals or simple step-by-step guides. This predictable structure can sometimes trigger a false alarm.

The best way to see a detector's score is as a probability, never a final verdict.

What's a "False Positive" and Why Is It Such a Big Deal?

A "false positive" is when a detector incorrectly flags a piece of human writing as being generated by AI. This is, without a doubt, the most serious mistake a detector can make. It can lead to wrongful accusations of cheating or plagiarism, and those accusations have real, damaging consequences for people.

A false positive isn't just a technical glitch; it's a machine making a false accusation. This is exactly why human judgment and critical thinking are non-negotiable when looking at a detector's results.

This is a particularly tough issue for non-native English speakers or anyone with a more direct, structured writing style, as their work is statistically more likely to get misidentified.

Do These Detectors Work on the Latest Models like GPT-4 and Gemini?

Yes, for the most part. The top-tier detectors are constantly being trained on the digital signatures of all the major players, including models from OpenAI, Google (like Gemini), and Anthropic (like Claude). The teams behind these tools work hard to update them as soon as new AI models are released.

That said, their effectiveness isn't always uniform. A tool might be fantastic at spotting text from GPT-4 but a little less reliable against a brand-new or less common model it hasn't seen as much. This is another reason why the question of "are ai detectors accurate" never has a simple, static answer.

Ready to verify your images with confidence? The AI Image Detector uses advanced analysis to give you a clear, reliable confidence score in seconds. Test it for free and make informed decisions. Check Your Image Now at AI Image Detector