Fake news detection: A Practical Guide to Spotting Misinformation

Fake news detection is, at its core, the skill of telling fact from fiction online. It’s about looking past the flashy headline and digging into a story's content, source, and the context surrounding it to figure out if it's the real deal. In today's world, it's less of a niche skill and more of a basic survival tool for navigating the internet.

Why We Urgently Need Fake News Detection

Think about a wild headline that pops up in your social media feed—maybe a "miracle cure" for a serious disease. Within hours, it's shared by thousands of people. Some might even start making real-world health decisions based on it before anyone has a chance to ask, "Wait, is this actually true?" This isn't just a thought experiment; it's a daily reality that shows just how high the stakes have become.

This constant flood of bogus content chips away at our trust in everything we read, from critical public health updates to political news. It's not a localized problem anymore, either. A huge Pew Research Center study found that a median of over 80% of adults across 35 different countries see fabricated information as a major threat. A closer look at these global perceptions of misinformation reveals just how widespread this challenge is.

Distinguishing Misinformation from Disinformation

To really get a grip on this problem, you have to understand the intent behind the bad information. Not all fake news is the same. The big difference is whether the person sharing it meant to deceive people or not.

It helps to break it down like this:

- Misinformation is like accidentally giving someone the wrong directions. It's false information, but the person sharing it genuinely believes it's true and has no intention to cause harm.

- Disinformation is the opposite. It’s like deliberately sending someone down a dead-end street just to mess with them. This is false information created and spread specifically to deceive, manipulate, or hurt someone.

This distinction is critical because disinformation is usually crafted to be more persuasive and is often part of a larger, coordinated effort to influence public opinion or stir up trouble.

The core challenge of fake news detection is that it requires us to fight against our own biases. We are naturally drawn to information that confirms what we already believe, making us vulnerable to content designed to provoke an emotional reaction rather than a rational one.

The Role of Technology in the Problem and Solution

Technology, especially AI, is a classic double-edged sword here. On one hand, it's fueling the problem by making it easier than ever to create incredibly realistic fake content. The rise of what is known as synthetic media means anyone can generate convincing images, audio, and even videos of things that never actually happened. This makes spotting fakes with the naked eye nearly impossible.

But on the other hand, that same technology is giving us powerful new ways to fight back. Smart algorithms can now scan text for tell-tale signs of manipulation, analyze pixels in an image for digital tampering, and track a story's digital footprints as it spreads online. For journalists, fact-checkers, and anyone dedicated to separating fact from fiction, these tools are becoming absolutely essential. By understanding both the threats and the tools at our disposal, we can start to build a more informed and resilient society.

How Misinformation Spreads Like Wildfire Online

To really get a handle on fake news detection, you first have to understand why a lie can race around the globe while the truth is still tying its shoes. The problem is baked into the very design of our modern information ecosystem, which has shifted from vetted, traditional media to the wild, algorithm-fueled world of social platforms.

In this new arena, the only thing that really matters is engagement—likes, shares, and especially those angry-face reactions. The algorithms that run these platforms are built to serve us more of whatever keeps us scrolling, and nothing grabs our attention quite like sensational, emotionally charged content. Accuracy is rarely part of the equation.

This creates the perfect environment for a false narrative, deliberately engineered to provoke a strong reaction, to completely dominate a carefully researched, nuanced news report. The entire system rewards speed and emotion over accuracy and verification.

The Psychology Behind Virality

But it’s not just the algorithms. Misinformation spreads so effectively because it hijacks the powerful psychological shortcuts our brains use every day. These mental tripwires make us susceptible to deception, often without us even noticing.

Three psychological hooks, in particular, make fake news so sticky:

- Confirmation Bias: We all have a natural tendency to believe information that confirms what we already think. When a fake story aligns with our worldview, it just feels right, so we’re far more likely to share it without a second thought.

- Emotional Triggers: Content that makes us angry, fearful, or outraged is practically guaranteed to be shared. Disinformation creators are masters at crafting headlines and stories that push these emotional buttons.

- Novelty Appeal: Research has shown that false stories are often more surprising and unusual than real news. Our brains are hardwired to notice anything new or different, which gives fabricated content a built-in advantage in the fight for our attention.

The real power of misinformation lies in its ability to short-circuit our critical thinking. By appealing directly to our biases and emotions, it bypasses the rational part of our brain that would normally pause and question what we're seeing.

This psychological vulnerability is made worse by a huge shift in how we get our news. The Reuters Institute's Digital News Report paints a pretty clear picture: as traditional news sources fade, social media and video platforms are taking over. This creates a massive, fertile ground for unvetted content to grow. With overall trust in news hovering at a low 40%, more than half of us are actively worried about telling what's real from what's fake online. It’s a concern so significant that the World Economic Forum now lists misinformation as a top global risk. You can explore the full story on changing news consumption habits for a deeper dive.

Accelerants of Deception

Throwing gasoline on this fire are two powerful forces: AI-generated content and influential social media accounts. AI can now spin up incredibly realistic (but completely fake) images, articles, and videos in seconds, flooding the internet with plausible-looking lies.

Then, when this AI-generated content gets picked up and amplified by an influencer with a massive following—whether they’re a bad actor or just an unwitting participant—a single piece of disinformation can reach millions of people in a few short hours. This blend of automated creation and high-speed distribution makes manual fake news detection feel like trying to catch rain in a bucket. We’re facing a scale of deception that demands equally powerful tools to fight back.

Understanding the Core Detection Techniques

Once we know how misinformation travels, we can start talking about how to stop it. Truly effective fake news detection isn't a silver bullet. It’s more like a detective's investigation, combining different analytical methods to build a solid case.

Each technique gives us a unique lens to look through. Think of it this way: you wouldn't solve a crime with just one clue. The same goes for misinformation. By examining a story from every angle—the words it uses, the images it shows, where it came from, and how it's spreading—we get a much clearer picture of whether it’s legitimate or designed to deceive.

Textual and Linguistic Analysis

First things first, we look at the words on the page. This is where automated systems comb through an article's text, hunting for the subtle red flags that even a careful human reader might overlook. It's like a detective checking a suspect's alibi—it might sound convincing at first, but the inconsistencies start to show under pressure.

These systems are trained to pick up on a few key signals:

- Sensational and Emotional Language: Fake news often leans on over-the-top, emotionally charged words like "shocking," "outrageous," or "secret." You'll also see excessive punctuation (!!!) meant to provoke a gut reaction instead of thoughtful consideration.

- Grammatical Errors and Odd Phrasing: Sloppy writing, frequent spelling mistakes, and awkward sentences can be a dead giveaway. This often points to a non-professional source or content churned out by a primitive AI.

- Overly Simplistic or Biased Wording: Real journalism usually explores nuance. Disinformation, on the other hand, often paints a simplistic, black-and-white picture to push a specific agenda.

The big idea here is that deceptive writing leaves a fingerprint. The style, tone, and complexity of the language offer powerful clues about its origin and intent. It’s often the clearest way to tell a well-researched report from a piece of hastily crafted propaganda.

Image and Video Forensics

With deepfakes and AI-generated visuals becoming commonplace, checking the authenticity of images and videos is absolutely essential. This process is like putting a photograph under a digital microscope to search for signs of manipulation. Even the slickest forgeries can leave behind tiny digital artifacts that specialized tools can sniff out.

Visual forensics is all about spotting anomalies the naked eye would miss. If you want to dive deeper, our guide on how AI detectors spot AI-generated content explains the technology in detail.

Here are the key methods at play:

- Error Level Analysis (ELA): This technique reveals parts of an image with different compression levels, which can instantly highlight areas that were pasted in or edited after the fact.

- Metadata Examination: Every digital file contains hidden data (known as EXIF data) that can reveal the camera model, the date the photo was taken, and even GPS coordinates. Checking this info for consistency can quickly expose a fake.

- Reverse Image Search: A simple but incredibly powerful tool. It helps you find the original source of an image, often showing if an old photo is being recycled in a new, misleading context.

Metadata and Provenance Tracking

Moving beyond the content itself, we can learn a ton by following a story's digital breadcrumbs. This technique, called provenance tracking, is all about tracing a piece of information back to its source to see where it came from and how it has morphed over time.

This line of questioning answers critical questions. For instance, was the article published by a news organization with a long history of credible reporting? Or did it pop up on a brand-new website with no reputation to speak of? Sometimes, the domain name is even designed to mimic a well-known source to trick you. This digital chain of custody provides crucial context.

Social Network and Propagation Analysis

Finally, one of the most powerful ways to spot a coordinated campaign is to watch how information moves through social networks. This method zeroes in on the behavior of the accounts sharing the content, not just the content itself. It's like tracking a virus outbreak to find "patient zero" and the super-spreaders.

Analysts look for patterns that scream "inauthentic activity." This could be a network of bots sharing the same link thousands of times in just a few minutes, or newly created accounts that immediately start blasting out politically charged content at an unnatural pace. These social signals are often the clearest sign that a story is part of an organized disinformation campaign.

To make these concepts easier to grasp, here’s a quick breakdown of how each technique works.

Comparing Fake News Detection Techniques

This table summarizes the different methods we've discussed, highlighting their focus, what they look for, and a simple way to think about each one.

| Detection Technique | What It Analyzes | Key Signals of Deception | Simple Analogy |

|---|---|---|---|

| Textual Analysis | The words, grammar, and tone of an article. | Emotional language, spelling errors, extreme bias. | Checking a story for plot holes. |

| Image/Video Forensics | The digital properties of a visual file. | Inconsistent compression, altered metadata. | Dusting for fingerprints at a crime scene. |

| Metadata & Provenance | A story's origin and publication history. | Anonymous sources, fake news site domains. | Tracing a rumor back to its source. |

| Social Signals | How content spreads across social media. | Bot-like sharing patterns, rapid amplification. | Tracking the spread of a virus. |

By combining these approaches, we create a robust defense system. No single technique is foolproof, but together, they make it much harder for misinformation to slip through the cracks.

How AI Is Changing the Game in Fake News Detection

Let's be honest: traditional fact-checking methods, as valuable as they are, just can't keep up with the firehose of misinformation online. The speed and scale are overwhelming. This is where Artificial Intelligence isn't just another tool in the toolbox; it’s a complete game-changer for fake news detection. Modern AI can sift through content at a speed and depth that no human team could ever match.

Think of it this way: early systems were like a spell-checker, flagging obvious errors or keywords. But they were clumsy and easily tricked. Today’s AI, driven by Natural Language Processing (NLP), is far more sophisticated. We're teaching it to understand nuance—to spot the subtle fingerprints of deception like sarcasm, emotional language, logical fallacies, and biased framing that often fly under the radar.

From Keywords to True Context

The real breakthrough is AI's ability to understand context. An older system might see a sensational word like "miracle" and immediately raise a red flag. But a modern NLP model does more. It looks at the surrounding sentences, analyzes the author's tone, and even considers the publication's track record to figure out if "miracle" is part of a direct quote, a satirical headline, or a bogus health claim.

This kind of contextual awareness is what’s needed to catch sophisticated disinformation. These AI models learn by digesting massive datasets—we're talking millions of articles, both legitimate and fabricated. By crunching all that data, they start to recognize the almost invisible linguistic patterns and signatures that separate credible reporting from propaganda.

The power of AI in fake news detection is its ability to go beyond checking individual facts. It learns the style of deception. This means it can flag brand-new misinformation simply by recognizing its structure and intent, not just its specific claims.

Specialized AI for Specific Threats

A one-size-fits-all approach rarely works, and that’s true here, too. The most powerful AI solutions are often trained for specific subject areas. Just as a doctor specializes, AI models can be fine-tuned to become experts at spotting falsehoods in high-stakes fields like finance, health, or politics. Gaining a grasp of the technologies that enable this, like OpenAI's Whisper AI, helps clarify how even spoken content can be rapidly transcribed and analyzed for red flags in these specialized domains.

Financial misinformation is a perfect example, where a single fake report can cause immediate and widespread economic damage. A recent groundbreaking study tackled this exact problem, creating a specialized model that shows just how effective this approach is. The research, published in a top AI journal, underscores the serious academic work driving these tools forward.

This screenshot highlights the journal where this kind of cutting-edge research is shared, showing the scientific foundation for these advanced detection tools.

The study introduced FinFakeBERT, an AI built from the ground up to detect financial fake news. After initial broad training, it was fine-tuned specifically on financial articles. The results were stunning: it achieved a 2.1% false positive rate, blowing past other general models. This proves that a targeted AI isn't just a little more accurate; it's an essential defense for protecting sensitive sectors from manipulation. You can read the full research about these AI advancements to dive into the methodology yourself.

This move toward specialized AI is where the future of fake news detection is heading. By developing expert models for different industries, we can build a much stronger, more targeted defense against the unique types of misinformation that threaten each one. It’s a smarter, more effective strategy that brings us closer to a more reliable information ecosystem. AI is no longer just an assistant in this fight—it's becoming a core part of the solution.

Your Practical Fact-Checking Workflow

Knowing the theory behind fake news is one thing. Actually spotting it in the wild is another. The best defense is a repeatable, methodical process you can pull out the moment a piece of content feels off. It's about building a mental habit.

Think of it as developing a reflex. Instead of passively consuming information, you become an active, critical thinker who automatically runs a quick mental checklist before hitting "share." This isn’t about being cynical; it’s about being a responsible citizen online. So, let's walk through building that workflow.

Step 1: Scrutinize the Source

Before you even read the headline, your first question should be simple: who is telling me this? The credibility of the source is the bedrock of any claim. An anonymous blog just doesn’t carry the same weight as a news organization with a public corrections policy and a history to protect.

Start with the URL. Does it look professional, or is it a bizarre spin-off of a well-known site? Look for an "About Us" page or author bios. If the people behind the site are hiding their identities or the domain was registered last week, your skepticism should be dialed way up. Brand impersonation is a classic trick, using lookalike sites to trick you into a false sense of trust.

Step 2: Cross-Reference the Story

A single source is never enough, no matter how much you trust it. If something big happens, multiple independent and reliable news outlets will be all over it. Your next move is to open a new tab and search for the story yourself.

Are other reputable organizations confirming the key facts? If the story only seems to exist on a handful of fringe blogs, that’s a huge red flag. The absence of corroboration from diverse, established sources usually means the story is either brand new or completely fabricated. This one step can shut down most sensationalist nonsense before it gets to you.

The core principle here is simple: extraordinary claims require extraordinary evidence. If only one source is reporting something monumental, the most likely explanation isn't that they have an exclusive scoop—it's that the story is probably false.

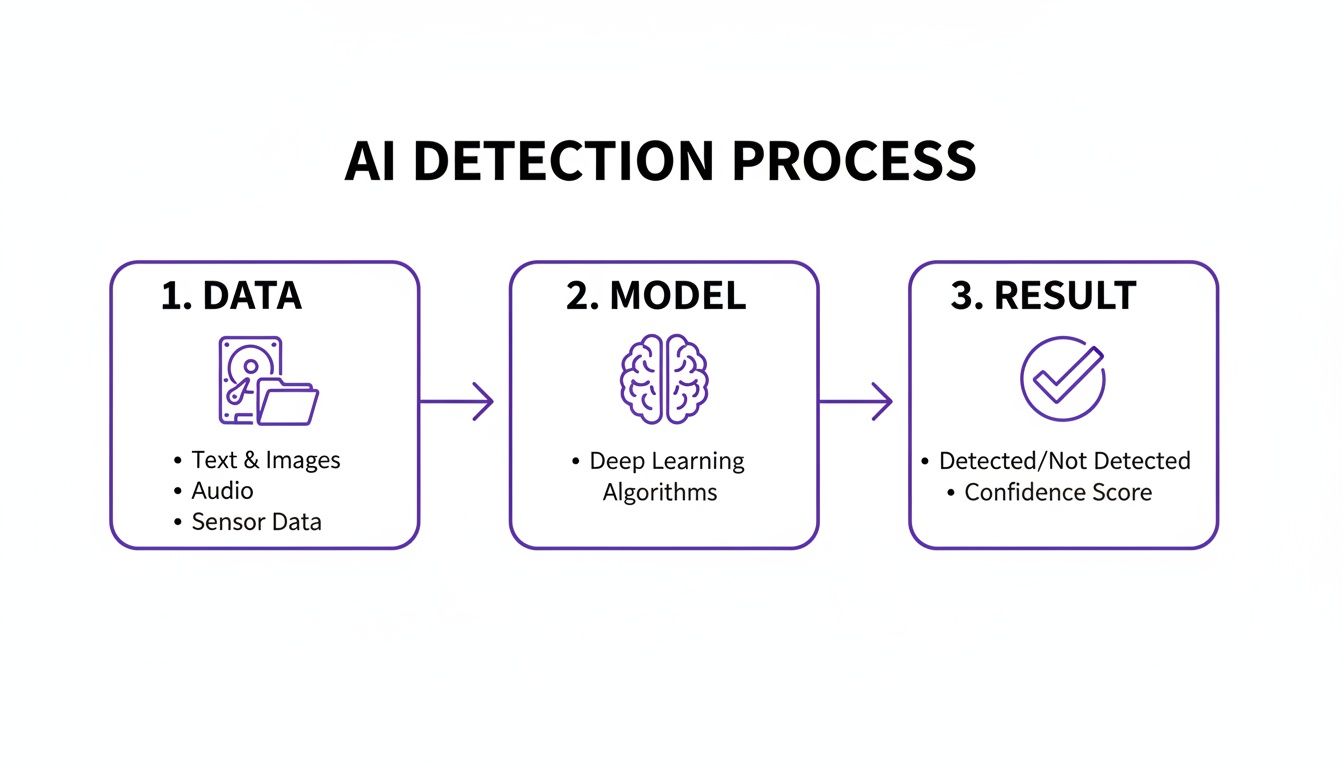

This kind of process, moving from input to analysis to a final result, is exactly how AI detection tools operate.

This visual breaks down the machine's "thinking" into clear stages, showing you exactly how it gets from point A to point B.

Step 3: Examine the Evidence

Good reporting is built on a foundation of verifiable evidence. A legitimate news story will cite its sources, quote experts by name, and link to original data or official reports. Your job is to pull on those threads. Does an article mention a scientific study? Go find that study and check if it really says what the article claims it does.

Are the quotes attributed to real, identifiable people? Be wary of vague phrases like "sources say" or "experts believe" without any names attached. For video content, a crucial step is to download YouTube transcripts for deeper analysis. This lets you search for specific phrases and check them against other records, which is much faster than re-watching a clip over and over.

Step 4: Use Verification Tools

Finally, bring in technology to help you check the media itself. Visual misinformation is everywhere, but there are powerful, free tools at your disposal.

- For Images: Run a reverse image search using tools like Google Images or our own AI Image Detector. This can instantly show you the original context of a photo, revealing if it's an old image being recycled to fit a new, misleading narrative. Our tool takes it a step further by analyzing the image for tell-tale signs of AI generation, a rapidly growing threat.

- For Videos: This is a bit trickier, but you can take screenshots of key frames in a video and run a reverse image search on those. It can help you spot if the footage was actually filmed at a completely different event.

By making these four steps—Source, Cross-Reference, Evidence, and Tools—a consistent habit, you build a powerful shield against deception. This systematic approach to fake news detection moves you beyond gut feelings and gives you a structured way to find the truth.

The Ethical Challenges of Content Moderation

Let's be clear: there's no magic bullet for this problem. The world of fake news detection is a minefield of thorny ethical questions. As our detection tools get smarter, they walk a razor's edge between shielding people from harmful content and upholding the basic right to free expression. This is the central struggle of modern content moderation.

One of the biggest headaches is the problem of false positives. This is when an automated system gets it wrong and flags legitimate content—think satire, parody, or even tough investigative journalism—as misinformation. The result isn't just a technical glitch; it can lead to outright censorship, silencing important conversations and creative works by mistake.

The Debate Between Safety and Free Speech

This tension fuels a constant, heated debate. On one side, we absolutely need to make the internet a safer place, free from dangerous lies that can spark violence or fuel a public health crisis. On the other side, people rightly worry that aggressive, automated moderation could be used to shut down dissent or simply remove content that's unpopular or challenging.

Well-meaning rules designed to stop fake news can, and have been, twisted. We’ve seen authorities use these very laws as an excuse to muzzle political opponents or journalists who question the official story. It’s a huge risk: tools built to protect can easily become tools of control without ironclad ethical guidelines and total transparency. This is where specialized content moderation services come in, blending smart technology with essential human judgment.

The ultimate challenge isn't just technological—it's philosophical. We have to figure out how to cultivate an informed public without building an echo chamber, and how to fight deception without killing the open exchange of ideas that a healthy democracy depends on.

An Unending Cat-and-Mouse Game

Finally, the dynamic between detection tools and the people creating disinformation is a never-ending cat-and-mouse game. As soon as a new detection technique starts working, the bad actors find a way around it. They might tweak an AI-generated image just enough to slip past a filter or develop new ways of writing to fool text analysis algorithms.

This constant back-and-forth proves that technology alone will never be the final answer. Real, effective fake news detection has to be a hybrid effort. It must combine the sheer speed and scale of AI with the contextual understanding, critical thinking, and ethical oversight that only a human can bring to the table.

Without that partnership, we’re stuck between two bad options: being drowned in a sea of misinformation or building automated systems that cause more harm than they prevent. The goal isn't to automate judgment, but to supercharge it.

Frequently Asked Questions

It's natural to have questions when you're trying to sort fact from fiction online. Let's tackle some of the most common ones that come up when we talk about fake news detection.

Can AI Fake News Detection Be 100% Accurate?

In a word, no. We’re not at a point where any tool can claim 100% accuracy. Even the smartest AI models can get it wrong. Sometimes they'll flag a legitimate news story as false (what we call a false positive), and other times, a well-crafted piece of disinformation will slip right through.

Think of these tools as a highly intelligent assistant, not the final judge. Their real power is in quickly scanning massive amounts of content and flagging things that need a closer look. The best defense is always a combination of AI's scale and a human's critical thinking.

The goal of AI in this space isn't to replace human judgment but to augment it. AI can sift through millions of data points in seconds, flagging suspicious content for a person to review, making the verification process manageable.

What Is the Difference Between Misinformation and Disinformation?

This is a great question, and it all comes down to intent.

Misinformation is simply false information that's shared without any malicious purpose. Imagine a friend sharing an old article, genuinely believing it's current news. They aren't trying to trick anyone; they just made a mistake.

Disinformation is far more sinister. It’s false information that is deliberately created and spread to deceive, manipulate, or cause harm. While our detection tools mainly focus on whether the content itself is true or false, understanding the why behind it is key to grasping its real-world impact.

Are There Free Tools I Can Use for Fake News Detection?

Absolutely. You don't need a huge budget to start verifying content. Some of the most powerful tools are completely free and easy to use.

For images, a quick reverse image search on Google Images or TinEye can work wonders. It will show you where else an image has appeared online, often revealing if it's old, stolen, or used completely out of context.

And don't forget the pros! The web is full of dedicated fact-checking organizations that have already done the heavy lifting on thousands of claims:

- Snopes: The classic go-to for debunking everything from political rumors to viral memes.

- PolitiFact: Perfect for checking the accuracy of statements from politicians.

- FactCheck.org: A nonpartisan project that keeps a close eye on U.S. politics.

Making a habit of checking these resources is a simple, effective step you can take right now.

Ready to add a powerful layer of verification to your workflow? The AI Image Detector offers free, fast, and privacy-focused analysis to help you spot AI-generated images in seconds. Try it now at aiimagedetector.com and make informed decisions with confidence.