how do ai detectors detect ai: A Practical Guide

AI detectors are trained to spot the subtle "digital fingerprints" that machine learning models leave behind. Think of it like a forensic analyst looking for clues at a crime scene. But instead of human DNA, these tools hunt for statistical giveaways—patterns in word choice, sentence structure, and rhythm that are just a little too perfect or predictable compared to creative, and often messy, human writing.

How AI Detectors Find Digital Fingerprints

To get a grip on how AI detectors work, we first need to talk about pattern recognition. At its core, an AI detector is a sophisticated pattern-matching system trained on massive datasets containing both human and AI-generated text. It learns to pick out the unique characteristics, or "tells," that separate one from the other.

This process isn't about understanding the content's meaning. It's about analyzing its statistical makeup.

Imagine a seasoned musician who can instantly tell the difference between a song played by a master pianist and one played by a computer program. The computer might hit every note with flawless precision, but the human musician can hear the subtle, almost imperceptible variations in timing and pressure that give the performance its soul. AI detectors do something very similar, but for language.

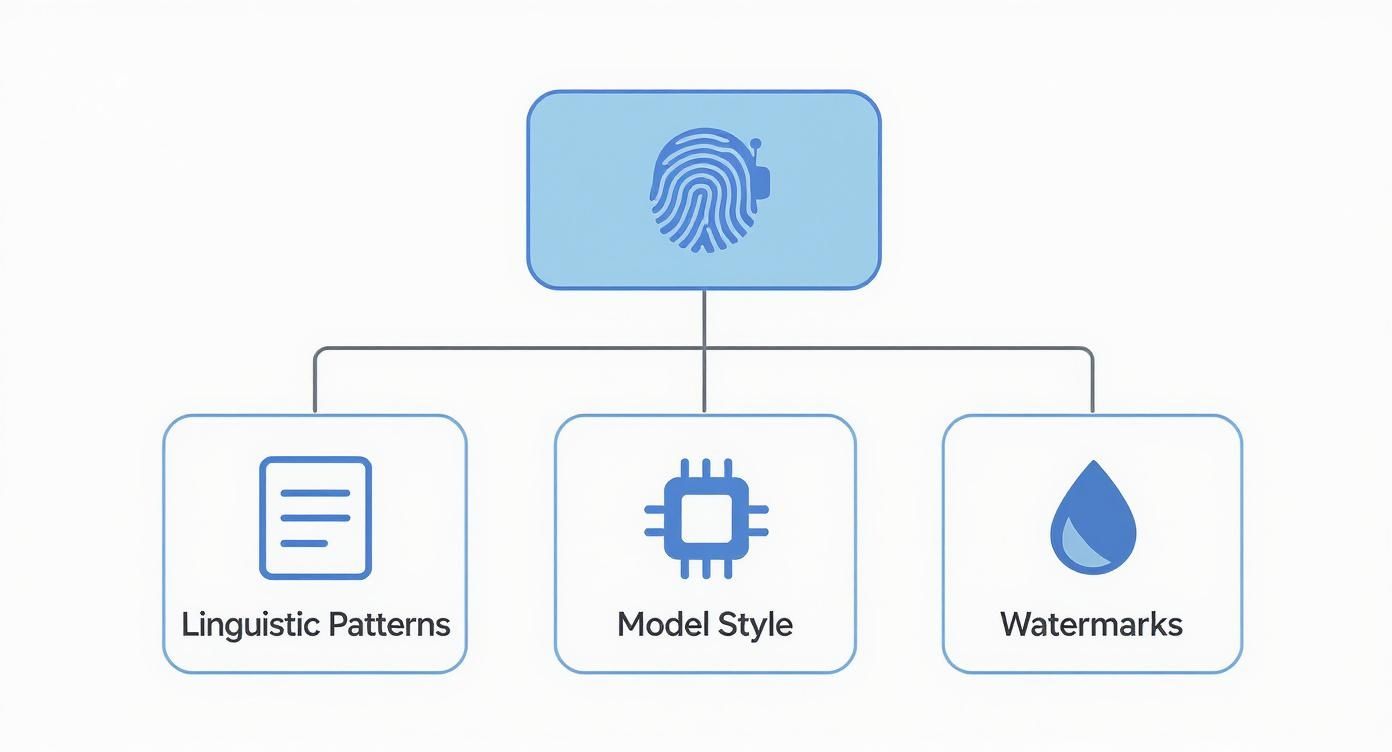

The Three Pillars of AI Detection

These tools typically rely on a combination of three primary methods to form their conclusions. Each approach examines the content from a different angle, and when used together, they create a much more accurate and nuanced picture of its origin. This infographic breaks down these core pillars.

As the diagram shows, detection is built on analyzing linguistic patterns, identifying a model's unique stylistic signature, and finding hidden watermarks.

This combination of techniques allows detectors to move beyond simple checks and perform a more holistic analysis. AI content detection tools have evolved dramatically, examining sentence structure, token patterns, and predictability metrics to estimate the likelihood of machine generation.

However, hybrid content—where AI-generated text is edited by a human—often confuses these classifiers, presenting a persistent challenge. You can find more insights about the evolution of AI detection at Wellows.com.

The Core Idea: An AI detector doesn't "read" for meaning. It scans for mathematical and structural clues left behind during the content's creation, using these clues to calculate the probability of machine involvement.

To give you a clearer picture, here’s a quick breakdown of the main detection methods these tools use.

Core AI Detection Methods at a Glance

| Detection Method | Core Principle | Example Application |

|---|---|---|

| Statistical Analysis | AI models often produce text with low "perplexity" and "burstiness"—meaning it's too predictable and evenly paced compared to human writing. | A tool flags a paragraph because every sentence is roughly the same length and uses very common word pairings. |

| Model Fingerprinting | Each AI model has a unique "style" or signature based on its training data and architecture, which can be identified. | An image detector recognizes the specific way a certain version of Midjourney renders hands or textures. |

| Watermarking | Developers embed an invisible, statistically detectable signal or pattern into the model's output. | A text generator subtly encodes a pattern in its choice of punctuation or synonyms that is invisible to a human but readable by a machine. |

Understanding these fundamental approaches is the first step. Next, we'll dive deeper into each one. You'll learn exactly what linguistic signals give AI away, how a specific model like GPT-4 can be "fingerprinted," and the clever ways developers are using invisible watermarks to make AI content easier to trace. This knowledge is key to interpreting detection scores and using these tools effectively.

Decoding the Patterns of AI Writing

The most reliable way to spot AI-generated text is to look for its fingerprints—the subtle linguistic and statistical patterns it leaves behind. Human writing is wonderfully messy and unpredictable. AI writing, for all its sophistication, often carries consistent mathematical signatures that trained detectors can pick up on.

Think of it like telling a natural forest apart from a tree farm. To the casual eye, both are just woods. But an expert immediately sees the unnaturally perfect spacing, the uniform tree heights, and the lack of biodiversity in the farm. AI detectors are that expert for text, hunting for patterns that are just a little too orderly to be human.

Two of the most telling metrics they look for are perplexity and burstiness. Getting a handle on these two concepts is the key to understanding how this all works.

The Predictability Problem: Perplexity

Imagine you’re playing a word-guessing game. If a friend starts a sentence with, "The cat sat on the…," you’d probably guess "mat" or "couch." These are predictable, low-surprise words. But what if they said, "The cat sat on the… crescendo"? That’s a high-surprise, or high-perplexity, choice.

Perplexity is really just a "surprise meter" for language.

- Low Perplexity: The text sticks to common, predictable words and phrases. The next word is almost always the most statistically likely choice.

- High Perplexity: The writing is full of unexpected vocabulary, complex sentences, and less common word pairings.

Real human writing is all over the place, which gives it a naturally higher perplexity. We use slang, metaphors, and creative phrasing that an AI, trained to find the most probable next word, tends to avoid. AI writing often hugs that statistical middle ground, producing text that feels smooth but is ultimately very predictable—a dead giveaway for a detection algorithm.

The Rhythmic Clue: Burstiness

Next up is the rhythm and flow of the writing, something we call burstiness. Human writing is rarely uniform. We tend to write in bursts, mixing long, flowing sentences that explore a complex idea with short, punchy ones that drive a point home. This creates a varied and dynamic rhythm.

AI models, on the other hand, really struggle with this natural cadence. They often produce text where sentences are of a similar length and complexity, which results in a monotonous, almost machine-like rhythm.

An AI detector measures this ebb and flow. A lack of variation—or low burstiness—is a strong signal that the text was built piece-by-piece with statistical consistency, not flowing from a human mind.

Diving deeper, these patterns are a direct result of how Large Language Models (LLMs) are actually designed to generate text in the first place.

Comparing Human vs. AI Writing

Let's put these concepts into practice with a quick example.

| Feature | Human-Written Paragraph | AI-Generated Paragraph |

|---|---|---|

| Sentence Structure | "I went to the store. It was chaos! People were everywhere, and I couldn't even find the milk. After 20 minutes of searching, I gave up." | "I visited the local grocery establishment to procure some dairy products. The environment was notably crowded with numerous patrons. Unfortunately, I was unable to locate the desired item after a thorough search." |

| Word Choice | Uses informal, emotive words like "chaos!" and simple phrasing like "gave up." High perplexity. | Uses formal, stilted language like "procure" and "establishment." Very predictable and low perplexity. |

| Rhythm | A mix of very short and longer sentences creates a natural, varied rhythm. High burstiness. | Sentences are of a similar, uniform length, creating a flat, robotic tone. Low burstiness. |

As you can see, the AI version is grammatically flawless, but it lacks the authentic, varied texture of the human example. These are the exact clues an AI detector is built to find. They're trained on millions of examples like these, learning to associate low perplexity and low burstiness with machine generation.

For a closer look at the different signals these tools track, check out our guide on what AI detectors look for.

Tracing Content Back to the AI Model

Knowing a piece of text was AI-generated is one thing, but the really interesting question is: which specific AI model created it? This is where detection gets sophisticated. Instead of just looking for general AI traits, advanced tools are starting to pinpoint the source, kind of like how a forensic expert can trace a letter back to a specific typewriter by its unique key impressions.

Two powerful techniques make this possible: model fingerprinting and watermarking. They both leave clues about where the content came from, but they operate in completely different ways. Getting to know them shows just how deep the rabbit hole of AI detection goes.

Identifying a Model's Unique "Accent"

Think of every AI model as having its own unique digital fingerprint. It’s like a subconscious accent or a stylistic tic. Just as a person might consistently use a certain phrase, an AI model develops subtle but ingrained habits based on its architecture, its training data, and the precise math it uses to string words together.

These fingerprints aren't put there on purpose; they're just organic byproducts of how the model was built. A detector that’s been trained on enough examples can start to pick up on these tiny differences and learn to distinguish the stylistic quirks of, say, Google's Gemini from those of OpenAI's GPT-4.

This is a passive detection method, meaning the tool is looking for clues that are already there, such as:

- Vocabulary Preferences: One model might just have a habit of choosing certain synonyms more often than others.

- Structural Tendencies: You might find a model has a knack for starting paragraphs in a very particular way.

- Punctuation Habits: Even something as small as how an AI uses commas or semicolons can act as a signature.

By piecing together these almost invisible traits, a detector can make a highly educated guess about who—or what—the author is. It’s a bit like digital forensics, where the evidence left behind in the text helps reconstruct the writer's identity.

Embedding a Hidden "Serial Number"

If fingerprinting is about passive observation, watermarking is an active, intentional act. This is where the AI developer purposely embeds an invisible signal right into the generated content. It functions like a hidden serial number, completely undetectable to a human reader but easy for a corresponding scanner to find.

Think of it like writing a message with invisible ink. The paper looks blank to the naked eye, but when you shine a special light on it, the hidden words appear. AI watermarking is based on the same idea, only it uses statistical patterns instead of ink.

A developer can program a model to subtly favor a specific, pre-determined set of words or punctuation in a sequence that seems totally random to a person. A detection algorithm, however, knows the "secret rule" and can scan the text for that exact pattern to confirm its origin.

This approach is much more reliable than fingerprinting because it's a deliberate and verifiable signal. Researchers are constantly coming up with new watermarking techniques that are tough to remove, even if someone tries to paraphrase or edit the text. It creates a much stronger chain of custody for anything an AI produces.

The main difference really comes down to intent:

| Detection Method | Core Principle | Analogy |

|---|---|---|

| Fingerprinting | Passively identifying a model's unintentional stylistic habits. | Recognizing a friend's handwriting by their unique loops and slants. |

| Watermarking | Actively embedding a secret, verifiable signal into the output. | Finding a watermark held up to the light on a genuine banknote. |

Both of these techniques are playing a huge part in the push for greater transparency. By moving beyond a simple "human vs. AI" verdict, they make it possible to attribute content to a specific source. That’s a critical step for accountability, sorting out copyright, and fighting misinformation. As these methods improve, identifying not just if AI was used but which AI it was will likely become standard practice.

How AI Image Detectors Spot Fake Visuals

Detecting AI-generated text is one thing, but spotting a fake image is a whole different ballgame. The goal is the same—find the digital fingerprints a machine leaves behind—but the clues are hidden in pixels and frequencies, not words. AI image detectors are designed to hunt for these giveaways, most of which are completely invisible to the human eye.

Think of an AI image detector as a digital art authenticator, but one with superhuman vision. A human expert might spot weird brushstrokes or inconsistencies in how a canvas has aged. An AI detector, on the other hand, dives down to the pixel level, searching for the tell-tale signs of digital creation that even the most advanced generative models can't quite hide.

These tools don’t just "look" at an image; they break it down into raw data to find the statistical anomalies that give away its artificial origins.

Uncovering Statistical Artifacts

The most common approach is to look for statistical artifacts. These are the subtle, almost imperceptible imperfections and patterns that creep in because of how generative models are built. They’re the visual equivalent of an AI writer overusing the same sentence structure.

We all remember the early days of AI images—people with six fingers or teeth that blurred into a single, horrifying strip. Modern models have gotten much better, but they still struggle to get complex details perfectly right.

Detectors are now trained to spot much finer errors, like:

- Unnatural Textures: Look closely at surfaces like skin, wood, or fabric. They might seem real at first glance, but they often lack the tiny, random variations you’d find in the real world.

- Flawless Geometry: AI has a habit of creating unnaturally perfect circles, perfectly straight lines, or repeating patterns that are just a little too clean to be authentic.

- Inconsistent Lighting: This is a big one. Shadows might fall in the wrong direction, or reflections in a mirror or a window might not accurately match their surroundings.

For example, an AI image might show a person with beautifully rendered hair, but if you look close enough, you might see that individual strands repeat in a subtle, unnatural pattern. A detector can spot that repetition instantly, even if a human would never notice.

By piecing together these tiny imperfections, an image AI detector builds a case for whether the visual was cooked up by a machine.

Listening for a Digital Hum

Another fascinating technique involves analyzing an image's frequency-domain signals. It sounds complicated, but the idea is pretty simple. Every image has a unique "sound" or frequency signature based on the arrangement of its pixels.

Here's an analogy: a photo from a real camera has a bit of natural noise and texture—think of it as a faint, organic "hiss." An AI-generated image, built from the ground up by an algorithm, often has a different kind of digital hum. Detectors can convert an image into its frequency components to "listen" for this machine-made signal, which usually looks smoother and more uniform than what a real camera sensor captures.

It's worth noting that accuracy can really vary. While text detectors can be incredibly precise, spotting AI images is much harder. GPTGuard currently leads the pack in this area, hitting 94.3% accuracy with its visual anomaly detection. In contrast, tools designed primarily for text see their accuracy drop to around 85% when trying to analyze images. You can find more details in the latest AI detection accuracy benchmarks on Hastewire.com.

Checking the Digital Birth Certificate

Finally, there’s a growing field focused on provenance and metadata. This method doesn't just analyze the pixels in the image; it looks for external proof of where it came from. It's like checking a painting’s certificate of authenticity instead of just staring at the canvas.

Initiatives like the Coalition for Content Provenance and Authenticity (C2PA) are pushing for a secure "digital birth certificate" for all kinds of media. When a C2PA-compliant camera or AI model creates an image, it cryptographically signs the file with information about its origin and any edits made afterward.

This creates a verifiable digital trail that confirms:

- Creator: Was it a specific camera model or an AI service?

- Creation Date: When was the file first made?

- Edit History: Has the image been altered since it was created?

An AI image detector can look for this C2PA manifest. If it’s there and signed by a known AI generator, the case is closed. If it’s missing, the detector has to fall back on other methods, like artifact analysis, to make a call.

Making Sense of AI Detection Scores

When an AI detector gives you a result, it’s almost never a simple "yes" or "no." What you get is a probability score—something like "98% confidence of AI generation." It's critical to understand what this number really means. It's not proof; it's an educated guess.

Think of it as the tool saying, "Based on all the patterns, statistical quirks, and digital fingerprints I can see, here’s how likely I think it is that a machine created this." A 98% score is a very strong signal, while a 60% score suggests the tool found a few AI-like traits but is far from certain.

Treating these scores as probabilities is the key. It helps you use the tool effectively without jumping to conclusions based on a single number.

The Problem of False Positives and Negatives

Since this is a game of probabilities, mistakes are going to happen. They come in two flavors, and you need to be aware of both: false positives and false negatives.

A false positive is when the detector flags human work as AI-generated. This is a serious issue because it can lead to false accusations. We’ve seen studies showing this can happen more often with text from non-native English speakers, whose writing style might unintentionally mimic the more predictable patterns an AI produces.

Then you have the false negative, where AI-generated content slips by completely unnoticed. This is a constant game of cat and mouse. It often happens when AI content is heavily edited by a person or when a brand-new, more sophisticated model is used that the detector hasn't been trained on yet.

Key Takeaway: No detector is perfect. False positives and negatives are real-world problems that remind us why human judgment and critical thinking are still essential when interpreting the results.

For a deeper dive into how these error rates play out, you can learn more about how accurate AI detectors really are.

How to Interpret Your AI Detection Score

A good detection report gives you more than just one number. When you get a result, your job is to look at the whole picture, not just that final percentage. It’s the starting point for your investigation, not the final word.

To help you get started, we've put together a quick guide on how to read these scores with the right amount of scrutiny.

How to Interpret Your AI Detection Score

| Confidence Score Range | What It Means | Recommended Action |

|---|---|---|

| 0-49% (Likely Human) | The content shows natural variation and complexity—the hallmarks of human writing. | You can generally trust this result. Just keep in mind that heavily human-edited AI content can sometimes fall into this range. |

| 50-89% (Mixed/Uncertain) | The tool is on the fence. It found some AI-like patterns but not enough to make a confident call. This often happens with AI-assisted writing. | Time to dig deeper. Compare the writing style to other known works, check the sources, and maybe even talk to the author. |

| 90-100% (Likely AI) | The text has all the tell-tale signs of machine generation, like overly consistent sentence structures and low randomness. | Treat this as a strong signal. This score justifies a much closer review, always factoring in the broader context of the situation. |

Ultimately, AI detection comes down to recognizing patterns and calculating probabilities. By remembering that these scores are informed judgments, not absolute truths, you can use these tools responsibly. Always back up their digital analysis with your own human intelligence.

The Constant Cat-and-Mouse Game of AI Detection

The relationship between AI content generators and the tools built to spot them is a never-ending technological tug-of-war. For every new detection method that comes out, a new way to get around it quickly follows. It's a high-stakes game that defines the entire field.

On one side, you have users deploying adversarial techniques—clever methods designed to fool AI detectors. These tactics intentionally reintroduce the subtle imperfections and human-like randomness that detection algorithms are trained to flag as "not AI." Think of it as digital camouflage for machine-generated content.

On the other side, detector developers are in a constant state of catch-up, updating their models to see through these new tricks. This cycle of adaptation and counter-adaptation is what makes AI detection so challenging.

Common Ways to Fool an AI Detector

To make AI content fly under the radar, people have come up with some go-to strategies. The core idea is to disrupt the clean, statistical patterns of AI-generated text, making it much harder for a detector to find a clear signal.

- Paraphrasing & "Humanizer" Tools: These tools are built specifically to rewrite AI text. They swap out words, restructure sentences, and even add a few intentional grammatical quirks to break up the predictable rhythms that most detectors look for.

- Good Ol' Manual Editing: This is often the most effective method. A human editor can take an AI-generated draft and weave in personal stories, fix clunky phrases, and adjust the flow. This blending of human and machine input can effectively erase the most obvious AI fingerprints.

- "Jailbreaking" the Prompt: You can also trick the AI itself. By giving it prompts like, "Write this in the style of a non-native English speaker" or "Use overly academic vocabulary," you can force it to produce output that doesn't fit the standard patterns detectors are trained on.

These tactics expose a fundamental problem: as AI models get better and better at mimicking all the different ways humans write, the line between what's human and what's machine gets incredibly blurry.

The Codebreaker Analogy: Think of AI generators as codebreakers and detectors as codemakers. Every time the codemaker creates a new, more sophisticated code (a better detection model), the codebreakers work around the clock to crack it (an evasion technique). This forces the codemaker to go back to the drawing board and invent an even stronger code.

How Detectors Are Fighting Back

Developers aren't just sitting back and watching this happen. They're actively working to stay one step ahead, making their own models more resilient and capable of spotting the very tricks designed to fool them.

One of the most powerful countermeasures is using ensemble methods. Instead of betting everything on a single detection algorithm, this approach combines several different models into one. It’s like having a panel of experts review a case; each one brings a unique perspective, and by combining their insights, they arrive at a much more reliable conclusion.

Developers are also constantly retraining their models on new data, specifically feeding them examples of content that has been run through "humanizer" tools or manually edited. By showing the detector what these evasive tactics look like, they teach it to spot even the most subtle signs of AI generation. The challenges aren't limited to text, either; in fields like software development, learning about detecting and fixing AI-generated code issues is essential for keeping projects secure and functional.

Your Questions About AI Detection, Answered

As more people start using AI detectors, a lot of good questions are popping up. Let's break down some of the most common ones about how these tools really perform day-to-day.

Can AI Detectors Ever Be 100 Percent Accurate?

The short answer is no. You'll never find a detector that's 100% perfect, and it's important to understand why. These tools work on probability, not certainty. They're looking for tell-tale patterns and statistical clues, which means they're essentially making a highly educated guess.

This reality leads to two big challenges:

- False positives: This is when a tool mistakenly flags human work as being AI-generated.

- False negatives: This happens when AI-generated content slips through completely undetected.

A detector's performance hinges on a few things, like how advanced the AI that created the content was, how much a human edited it, and the quality of the data used to train the detector itself.

Do AI Detectors Work for Languages Other Than English?

Their effectiveness really takes a nosedive once you move away from English. The top-tier detection models have been trained on mountains of English text, so that's where they're sharpest. While many are adding other languages, their accuracy is almost always lower.

Languages have unique grammar, idioms, and sentence structures that can completely throw off a model trained on English. Because of this, you should expect a much higher error rate when you're checking content in other languages. Always check to see if the tool you're using was specifically built for the language you need.

One of the most critical findings from recent studies is that AI detectors often show a bias against non-native English writers. Their writing can sometimes have patterns—like simpler sentence structures or a more formal tone—that algorithms mistake for AI, leading to unfair false positives.

Are AI Detection Tools Biased?

Unfortunately, yes. Bias is a real and well-documented problem in this space. As noted above, the most glaring example is with non-native English speakers. When the model’s idea of "human writing" is based on a narrow, native-English standard, it can easily misinterpret writing styles from different cultural or linguistic backgrounds.

Developers are working hard to fix this. The main strategy is to train their models on much more diverse datasets that reflect a global range of human writing. The aim is to build a more nuanced understanding of what people actually sound like, but it’s still very much a work in progress.

Ready to verify your images with confidence? AI Image Detector offers a free, privacy-first tool to check for AI generation in seconds. Get a clear verdict without uploading your files. Try it now at aiimagedetector.com.