A Guide to User Generated Content Moderation

At its heart, user-generated content moderation is simply the process of reviewing what people post on your platform—comments, reviews, photos, you name it—to make sure it aligns with your community rules and the law. Think of it as the essential gatekeeper that keeps your online space welcoming and constructive, rather than letting it spiral into chaos.

Why Content Moderation Is Now Essential

Picture your online community as a lively city park. When it's well-cared for, it’s a wonderful place where people connect, share ideas, and build relationships. But if left untended, it quickly gets overrun with litter, arguments break out, and it becomes a place people avoid. That’s the exact challenge brands face online every single day.

User-generated content (UGC) is the fuel for authentic engagement. But letting that content run wild is a risk no one can afford anymore. An unmoderated platform can rapidly become a dumping ground for spam, hate speech, and illegal content, which can wreck your brand's reputation and obliterate user trust.

From Afterthought to Front-and-Center

What used to be a side task handled by an intern is now a critical business function. The sheer volume of content being uploaded every second is mind-boggling, making a proactive moderation plan a matter of survival. The trick is to strike a delicate balance: you want to encourage genuine interaction while shielding your community—and your business—from harm.

Effective content moderation isn't about censorship. It’s about cultivation. Think of it like tending a garden; you have to pull the weeds so the flowers can thrive.

This need has turned moderation into a massive industry. The global market for content moderation services was valued at USD 12.48 billion in 2025 and is expected to balloon to USD 42.36 billion by 2035. This explosive growth, detailed in recent market trend reports, is a direct result of our increasingly digital lives.

A solid moderation strategy isn't just one thing; it rests on several core pillars that work together to turn UGC from a potential risk into a genuine asset.

Why UGC Moderation Is a Business Imperative

Here’s a breakdown of the key drivers that make effective content moderation an absolute necessity for any modern business.

| Pillar | Core Function | Business Impact |

|---|---|---|

| Brand Protection | Shields your brand from being associated with harmful or inappropriate content. | Prevents reputational damage that can alienate customers, investors, and partners. |

| Community Safety | Creates a secure, welcoming space where users feel comfortable sharing and interacting. | Boosts user engagement, builds loyalty, and encourages positive contributions. |

| Legal Compliance | Ensures adherence to complex local and international laws regarding online content. | Mitigates the risk of steep fines, lawsuits, and regulatory penalties. |

| User Experience | Keeps the platform clean and focused on high-quality, relevant content. | Encourages repeat visits, deeper participation, and a stronger community bond. |

Ultimately, these pillars show that investing in robust moderation is a direct investment in your brand's future. It's about building a sustainable, credible, and thriving online presence.

Balancing the Power and Peril of UGC

User-generated content is a genuine game-changer. It can be an incredible source of authentic marketing that builds trust and drives growth. But there's a flip side. If left unchecked, it also holds the potential for serious brand damage, legal headaches, and a toxic community.

The solution isn't to shy away from UGC. It's to manage it with a smart, proactive strategy.

Think of your brand’s online presence as a community garden. The most vibrant gardens don't just spring up on their own—they're carefully tended. The gardener's job is to cultivate the healthy plants while diligently pulling the weeds that would otherwise choke them out. User generated content moderation is that essential gardening work for your digital community.

The Immense Power of Authentic Content

The upside of UGC is impossible to ignore. When a customer posts a glowing review, shares a photo of themselves loving your product, or writes a helpful comment, it’s a powerful, third-party endorsement. This kind of authentic content connects with people in a way that polished, brand-created advertising just can't.

And the numbers prove it. Nearly 84% of consumers say they trust UGC far more than traditional branded content. This trust translates directly into sales; brands that embed customer photos on their product pages see a 29% higher conversion rate. This data clearly shows how real peer content is a major factor in purchasing decisions, as highlighted in reports on UGC campaign effectiveness.

But the power of UGC goes beyond the bottom line. It creates a sense of community, making customers feel heard and valued. This engagement builds a loyal following that is far more resilient than one built on ad spend alone.

Uncovering the Hidden Perils

Without a moderation plan, that beautiful garden can quickly get overrun with weeds. The dangers of unmoderated UGC are severe and can pop up in several damaging ways. Failing to manage this content isn't just a minor oversight; it's a major business risk that can undo years of hard work.

Here are the primary dangers you face:

- Brand Reputation Damage: A single hateful comment, spammy post, or piece of misinformation can tarnish your brand’s image, tying it to toxicity and a lack of control.

- Community Decay: When harmful content is allowed to fester, your best contributors leave. The space becomes dominated by negativity, driving away the very users you want to attract.

- Legal and Compliance Risks: Platforms can be held liable for illegal content, such as defamation, copyright infringement, or harassment. Ignoring these issues can lead to costly lawsuits and fines.

- Erosion of Trust: A platform that feels unsafe or unreliable will quickly lose its users' trust. And once trust is broken, it’s incredibly difficult to win back.

Moderation isn't about silencing voices; it's about setting the stage for productive conversations. It ensures that the most valuable, positive, and authentic content can be seen and heard above the noise.

By understanding both the incredible benefits and the serious risks, it becomes clear why a thoughtful user generated content moderation strategy isn't just a defensive move. It's an active cultivation of your brand's most valuable asset: its community. This is especially true for visual content, where maintaining trust often involves ensuring the authenticity of user-submitted images. You can learn more about using images for authenticity in our detailed guide.

Navigating Today's Legal and Ethical Maze

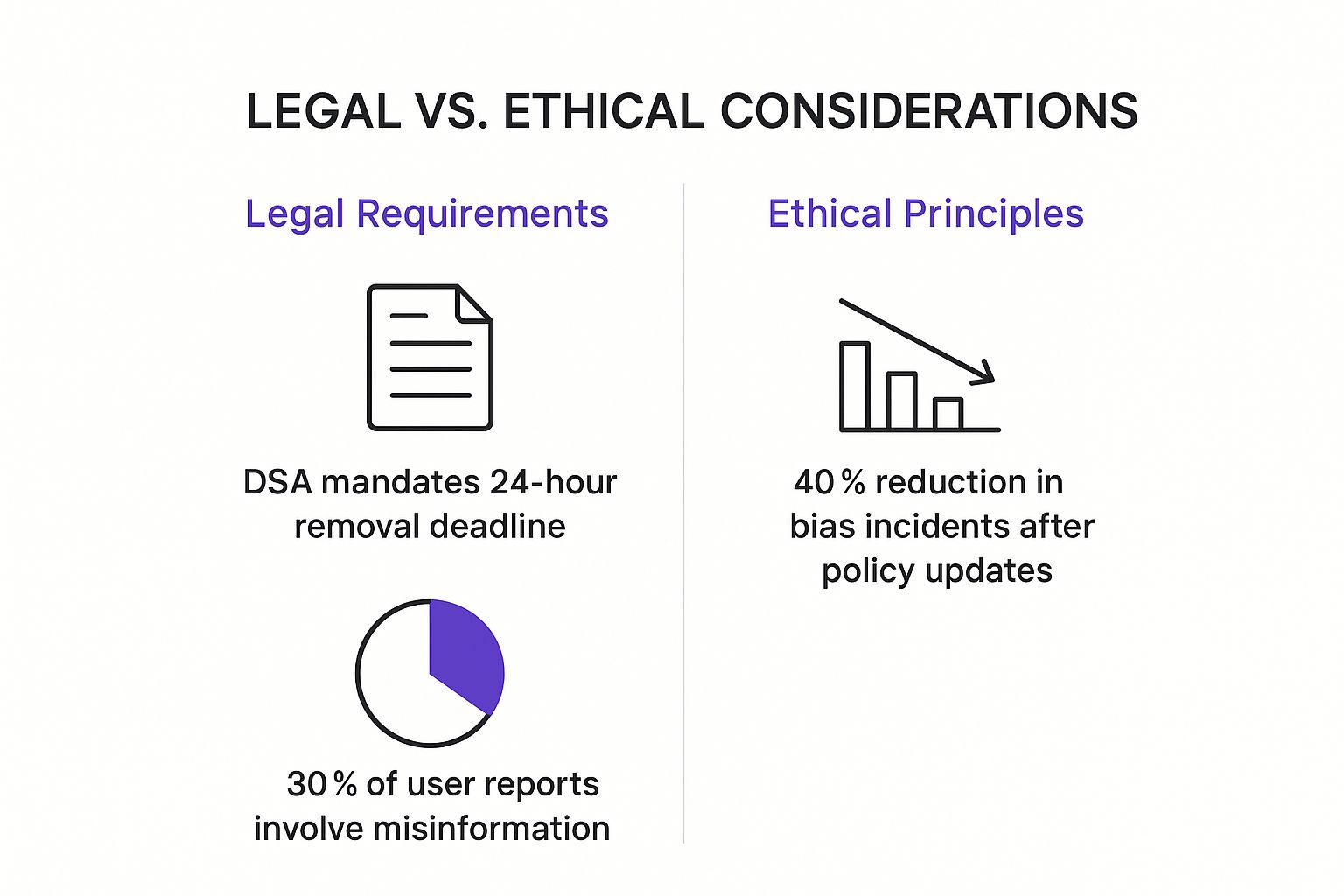

Handling user-generated content moderation is no longer just about cleaning up a few comments. It’s about finding your way through a dense and ever-changing landscape of legal obligations and ethical minefields. As online platforms have become the new public square, governments are stepping in with stricter rules to keep users safe.

This shift has turned content moderation from a simple brand safety task into a critical matter of legal compliance.

Major regulations are already changing the game. In Europe, the Digital Services Act (DSA) and the UK's Online Safety Act now force platforms to run annual audits, submit detailed risk reports, and prepare for serious financial penalties. We're talking fines of up to £18 million or 10% of global turnover in the UK for getting it wrong.

Add to that the explosion of AI-generated deepfakes and the fact that misinformation spreads six times faster than truth, and it's clear: effective moderation isn't just good practice, it's a survival strategy.

What Is Your "Duty of Care"?

At the core of these new laws is a concept called "duty of care." It’s a simple but powerful idea: platforms are now legally required to think ahead about potential harm to their users and take reasonable steps to prevent it. It's not enough to just clean up a mess after someone reports it. You have to proactively build safety into the very design of your platform.

This screenshot from the European Commission gives a great overview of the principles behind the Digital Services Act.

As the image shows, the DSA is about creating a safer digital world where people's rights are protected and businesses can compete fairly.

So what does this mean for you in practical terms? Here are some of the new ground rules:

- Risk Assessments: You have to regularly look for and analyze systemic risks. This could be anything from the spread of illegal content to coordinated disinformation campaigns targeting your users.

- Transparency Reports: Platforms must now publicly report on their moderation efforts. This includes how much content they're removing and how accurate their automated tools are.

- User Empowerment: Your users need simple, clear ways to report illegal content. They also need to be able to appeal your decisions and understand exactly why their post was taken down.

The Ethical Tightrope Walk

Once you've checked all the legal boxes, you're still left with a huge gray area of ethical challenges. Staying compliant is the bare minimum. Building a community people actually trust means wrestling with tough questions that don't have easy answers.

This is where your community guidelines stop being a legal document and start becoming a statement of your company's values.

One of the trickiest ethical hurdles is deciding what to do with content that's "harmful" but not technically illegal. A comment might be deeply offensive or upsetting, but it doesn't break any laws. Finding the right balance here is everything. Moderate too heavily, and you'll be accused of censorship and stifling free speech. Too little, and your platform can quickly become a toxic swamp that drives good users away.

Another huge ethical blind spot is cultural bias. If your moderation policies are written from a single cultural viewpoint, you're going to make mistakes. Slang, satire, and cultural in-jokes can be completely lost on AI models and even human moderators who don't have the right context. This can lead to unfairly penalizing users from different backgrounds.

Ultimately, getting through this maze means being diligent about the law while also being willing to do some serious ethical soul-searching. It’s about creating a system that isn't just compliant, but also fair, transparent, and genuinely dedicated to fostering a healthy community.

Designing Your Moderation Workflow

Figuring out the right system for user-generated content moderation is a lot like planning the traffic flow for a new city. A quiet suburban street doesn't need a complex system of traffic lights, but a major downtown intersection would be chaos with just a single stop sign.

It's all about matching the solution to the situation. The ideal moderation workflow for your platform depends entirely on factors like your community's size, the kind of content people share, and how much risk you're willing to accept. There’s no single blueprint that works for everyone. Instead, you'll need to pick from a few core models—or more likely, blend them together—to build a system that truly fits.

https://www.youtube.com/embed/JLDqvOYi-GY

Understanding the Four Core Moderation Models

Every moderation model strikes a different balance between speed, community safety, and scalability. Getting a handle on how each one works is the first step toward building a workflow that protects your users without shutting down the conversation.

Let's break down the four main approaches you can take.

Pre-Moderation (The Gatekeeper): Think of this as a security checkpoint. Every single piece of content a user submits gets reviewed by a moderator before it's allowed to go live. Nothing sees the light of day until it gets a green light, offering the absolute highest level of control.

Post-Moderation (The Lifeguard): In this model, content is published instantly for everyone to see, and moderators review it afterward to catch anything that breaks the rules. This approach is great for encouraging fast-paced, real-time interaction, but it comes with the risk that someone might see harmful content before it’s taken down.

Reactive Moderation (The Neighborhood Watch): This approach leans on your community to be the first line of defense. Users can flag or report content they find inappropriate. Once a post hits a certain number of reports, it gets bumped into a queue for a professional moderator to review. It’s a great way to empower your community, but it shouldn't be your only strategy.

Automated Moderation (The Robot Sentry): This method uses AI to automatically scan and filter content the moment it's submitted. It's incredibly effective at blocking obvious violations like spam, profanity, or banned links in real-time. While it's lightning-fast and scales beautifully, it can sometimes miss the nuance of human conversation, like sarcasm or satire.

Choosing the Right Model for Your Platform

In the real world, the most effective workflows are almost always a hybrid of these models. For example, a platform might use automated moderation to instantly zap spam, lean on post-moderation for general discussion forums, and switch to strict pre-moderation for more sensitive areas, like user profiles or content sections for children.

The goal isn't just to catch bad content; it's to create a predictable, fair, and transparent environment. Your workflow is the engine that drives this, working behind the scenes to maintain community health at scale.

To help you figure out the best fit, let's look at how these models stack up against each other.

Comparison of Content Moderation Models

This table breaks down the four main strategies, highlighting their ideal use cases and the key trade-offs you'll need to consider.

| Moderation Model | How It Works | Best For | Pros | Cons |

|---|---|---|---|---|

| Pre-Moderation | Content is reviewed before publishing. | High-risk communities (e.g., kids' sites), legal or medical forums. | Maximum safety; prevents harmful content from ever appearing. | Slow; can kill real-time conversation and requires significant resources. |

| Post-Moderation | Content is published first, then reviewed. | Large, fast-moving communities like social media feeds. | Fast and engaging; encourages spontaneous interaction. | Risk of users seeing harmful content before removal. |

| Reactive Moderation | Relies on users reporting bad content. | Platforms with highly engaged and trustworthy user bases. | Low cost; empowers the community to self-police. | Ineffective on its own; harmful content can be missed entirely. |

| Automated Moderation | AI filters content based on rules. | High-volume platforms needing to manage spam and clear violations at scale. | Instant, 24/7 coverage; extremely scalable and cost-effective. | Lacks human nuance; prone to errors with context-heavy content. |

Ultimately, designing your workflow comes down to making strategic trade-offs. It's a balancing act. By clearly defining your community guidelines, establishing a solid process for handling tricky cases, and finding the right mix of human insight and AI efficiency, you can build a robust user-generated content moderation process that grows right alongside your platform.

Putting AI to Work in Content Moderation

How can any platform possibly keep up with millions of daily posts, comments, and images without an impossibly large army of people? The short answer is Artificial Intelligence. AI has become the essential first line of defense in modern user generated content moderation, acting as a tireless digital sentry that works 24/7.

Think of AI as a high-speed filter for your platform's content. It can instantly analyze text for obvious policy violations like profanity or spam and scan images for graphic material before a human ever has to see it. This automation is what makes moderation at scale even possible.

This whole process is built on powerful tech that learns to recognize the patterns of harmful content. Two of the most important tools in the box are Natural Language Processing (NLP) for text and computer vision for images.

How AI Learns to Moderate Content

AI models aren't just given a simple list of "bad words" and sent on their way. Instead, they’re trained on enormous datasets packed with millions of examples of both acceptable and unacceptable content.

- Natural Language Processing (NLP): This is the technology that lets machines understand the meaning and feeling behind human language. A good NLP model can be trained to spot not just specific keywords, but the actual context of hate speech, bullying, or harassment.

- Computer Vision: This is the field of AI that trains computers to interpret and understand the visual world. For moderation, computer vision models can identify nudity, violence, or other banned imagery by analyzing the pixels, shapes, and objects within a photo or video.

Through this kind of training, the AI learns to flag content that breaks community guidelines with incredible speed, handling the bulk of the moderation workload on its own. This frees up human experts to focus on the tricky stuff.

The Human-in-the-Loop Imperative

For all its power, AI is not a silver bullet. It’s an amazing tool, but one with clear limitations. Automated systems often get tripped up by the very things that make human communication so rich and complex.

AI can easily miss sarcasm, fail to grasp evolving cultural nuances, or completely misinterpret satire. A comment like, "That's just great," could be genuine praise or dripping with sarcasm—a distinction that often needs a human's judgment. This is exactly why the most effective moderation strategies always involve a human-in-the-loop.

The best moderation systems use both: AI for speed and scale, and trained human reviewers for the gray areas. AI becomes the partner that handles the heavy lifting, not a replacement for human oversight.

This hybrid model creates a powerful partnership. The AI tackles the high-volume, clear-cut violations, while human moderators manage appeals, review flagged content that the AI is unsure about, and make the final call on sensitive edge cases.

AI in Visual Content Moderation

Visual moderation is one of the toughest challenges, but it's also where AI delivers some of its biggest wins. Automated systems can pre-screen uploads to stop graphic content from ever appearing on a platform, protecting both users and human moderators from exposure.

For instance, advanced models can even analyze images for subtle artifacts that might indicate they were created by AI, a critical step in the fight against misinformation. You can get a much deeper look into what AI detectors look for in images to really understand how this technology works.

Ultimately, bringing AI into your user generated content moderation workflow isn't about getting rid of people. It's about making them more effective. By letting AI handle the repetitive, high-volume tasks, you allow your human team to apply their unique skills where they matter most: in understanding context, empathy, and the complex art of building a safe and thriving community.

Actionable Best Practices for a Healthy Community

A solid user generated content moderation strategy can turn your community from a potential minefield into your greatest asset. It all comes down to a foundation of clear rules, consistent enforcement, and a real commitment to the well-being of your users and moderators.

By putting a few core principles into action, you’ll build a system that doesn't just manage risk—it actively grows a positive, vibrant, and loyal online space. Here’s how you get it right.

Establish Crystal-Clear Community Guidelines

Think of your content guidelines as the constitution for your community. They can't be buried in pages of dense legalese. Write them in plain, simple language and provide concrete examples of what's okay and what's not.

Above all, make these rules easy to find. When users don't have to guess, they feel empowered to participate positively. This transparency gives your moderators a solid framework to work from and builds a massive amount of trust with your user base.

Adopt a Multi-Layered Moderation Strategy

Putting all your eggs in one basket is a recipe for disaster. The best moderation systems blend the brute-force speed of AI with the irreplaceable nuance of human judgment.

Let AI do the heavy lifting—filtering out obvious spam, hate speech, and graphic content in the blink of an eye. This frees up your human team to tackle the tricky gray areas that demand cultural context and critical thought.

This hybrid approach really gives you the best of both worlds:

- AI-First Filtering: Catches the low-hanging fruit and clear violations at a scale no human team could match.

- Human Review: Steps in for complex cases, appeals, and content that needs a real person to understand the context.

- Community Reporting: Turns your user base into an extra set of eyes, empowering them to flag content that automated systems might miss.

Invest in Your Moderation Team

Your human moderators are on the front lines, and they often see the worst of what your platform has to offer. Looking after their mental health isn't just the right thing to do; it's a business imperative. A supported team is an effective team.

Providing robust mental health resources, ongoing training, and clear paths for escalating difficult content is non-negotiable. A burned-out team can't protect your community.

Create a Fair and Transparent Appeals Process

Mistakes are going to happen. It's inevitable. Both AI and human moderators will get it wrong sometimes. A simple, easy-to-find appeals process demonstrates a commitment to fairness that users deeply appreciate.

When people feel like they have a voice and can challenge a decision, it builds faith in your platform, even if they don't agree with every call. This kind of transparency is vital for long-term community health and for validating content authenticity, a topic we dive into in our guide on how to check if a photo is real.

Answering Your Content Moderation Questions

As you start to think seriously about user-generated content moderation, a few big questions always pop up. It's totally normal. From figuring out a budget to writing the rules of the road for your community, getting these answers straight is the first step to building a system that actually works without becoming a massive headache.

Let's walk through some of the most common questions teams have when they first dive into moderating their platforms. Nailing these fundamentals from the start will save you a ton of time and money later.

How Much Does Content Moderation Cost?

This is the big one, and the honest answer is: it depends. The price tag for moderation can swing wildly based on how much content you're dealing with, what kind of content it is, and the moderation model you put in place.

For a small business just getting started, a few hundred dollars a month might be enough for a basic AI tool to do some initial filtering. On the other end of the spectrum, a massive social network could easily spend millions on round-the-clock human moderation teams spread across the globe. Your main costs will break down into AI software subscriptions, salaries and training for human moderators, and—crucially—mental wellness programs to support those on the front lines.

The sweet spot for most businesses is a hybrid model. Let AI handle the first pass to catch the low-hanging fruit (obvious spam, hate speech, etc.), and then escalate the tricky, nuanced cases to your human team. This approach gives you the best bang for your buck, balancing cost with quality.

How Do I Create Great Community Guidelines?

Think of your community guidelines as the constitution for your platform. To be effective, they need to be clear, direct, and impossible for users to miss.

Start by thinking about the vibe you want for your community. What's its purpose? Then, write your rules in plain English. Ditch the corporate jargon and use real-world examples to show what you mean by things like harassment, spam, or hate speech. It’s also vital to be upfront about the consequences—what happens when someone breaks the rules? It could be anything from a simple warning to a permanent ban.

The three pillars of good guidelines are:

- Clarity: Write in simple, direct language that leaves no room for confusion.

- Visibility: Put them everywhere. A link in the footer, a step in the sign-up process—make them easy to find.

- Consistency: This is about building trust. Your team has to apply the rules fairly to everyone, every time.

Can a Small Business Manage Moderation In-House?

Yes, absolutely. For many small businesses, handling user-generated content moderation in-house is not only possible but often the best way to start. A reactive moderation model is a great entry point; you can rely on your platform's built-in filters and, most importantly, on your users to report content that crosses the line.

As your community gets bigger, you might want to introduce pre-moderation for specific areas, like customer reviews or user profiles, where the stakes are a bit higher. The real key is making sure someone (or a small, dedicated team) officially owns moderation and has a clear policy to follow so they can make consistent decisions. When you reach a point where the volume is just too much to handle, that's the perfect time to look into affordable AI tools or specialized moderation services to help you scale up.

Ready to bring trust and safety to your visual content? AI Image Detector offers a fast, privacy-first solution to verify if an image is human-created or AI-generated. Combat misinformation and protect your platform with our powerful, easy-to-use tool. Try it free today.