Your Guide to Using a Fake News Detector

So, what exactly is a fake news detector? It's not one single magic button. Think of it more like a digital detective's toolkit, packed with specialized gadgets designed to analyze everything from text and images to video and audio for signs of manipulation.

This toolkit uses technologies like AI and data analysis to hunt for clues—emotional language, tell-tale signs of image forgery, and questionable sources. At its core, it’s built to help you separate credible information from the noise.

Understanding the Digital Detective Kit

Let's stick with that detective kit analogy. Inside, you don't just have one tool; you have a team of experts. One specializes in spotting emotional triggers in headlines. Another is a photo forensics expert, checking for subtle digital edits. A third is a meticulous researcher, cross-referencing claims against a library of trusted sources. Together, they automate the heavy lifting of content verification.

In a world where we’re all swimming in information, these tools have become essential. They’re no longer just for journalists or researchers; they're for anyone trying to make sense of their social media feed. Manually fact-checking every single post is simply impossible, which is where automated help becomes a necessity.

Why Automated Detection Is Crucial

False stories move at the speed of a share. A single fabricated image or a sensationalized headline can circle the globe in hours, long before a human fact-checker has even had their morning coffee. A fake news detector is our first line of defense, offering a near-instant analysis to flag suspicious content before it gains unstoppable momentum.

This technology gives us a way to tackle a global problem at scale. While a human expert's final say is still the gold standard, these detectors empower everyone by offering:

- Speed: Getting an initial assessment in seconds, not days.

- Scale: Handling thousands of articles, images, or posts at once.

- Consistency: Applying the same rigorous rules to every piece of content.

Modern detectors are surprisingly sophisticated, examining content from multiple angles to catch deception. The table below breaks down some of the most common methods they use.

Key Functions of a Modern Fake News Detector

| Detection Method | What It Analyzes | Common Use Case |

|---|---|---|

| Image Forensics | Pixel patterns, EXIF data, compression artifacts, and AI signatures. | Detecting a photoshopped image or a deepfake video. |

| Metadata Analysis | File creation dates, author information, and location data. | Flagging an old photo being passed off as a recent event. |

| Natural Language Processing | Emotional tone, sensational language, grammar, and unusual phrasing. | Identifying clickbait headlines and emotionally manipulative text. |

| Cross-Referencing | Claims, names, and statistics against trusted news archives and databases. | Verifying if a shocking "quote" was ever actually said. |

Each of these functions provides a different piece of the puzzle, giving users a much clearer picture of whether the content they're looking at is trustworthy.

A Rapidly Expanding Market

The demand for reliable verification tools is booming. The industry for detecting AI-generated and manipulated content is on track to become a multi-billion dollar sector by the early 2030s. Some analysts are forecasting incredible growth rates between 19% and 42%, pushing the market's value to somewhere between $4.9 billion and $7.3 billion by 2032. You can read more about this explosive growth in market analysis reports.

This massive investment is fueling a new generation of fast, privacy-first tools built for media outlets, social media platforms, and corporate compliance teams who need answers now.

A modern fake news detector is more than a simple fact-checker; it's a multi-faceted analytical engine. It evaluates content through various lenses, from linguistic patterns and source reputation to the technical artifacts left behind by digital editing tools.

By piecing together clues that are often invisible to the naked eye, a good detector provides the evidence needed to make an informed call on a piece of content's authenticity. It strengthens our collective ability to push back against the tide of misinformation.

How AI Deciphers Misinformation in Text

While a doctored image might be what catches your eye, it's the text wrapped around it that often does the heavy lifting in a misinformation campaign. A truly effective fake news detector doesn't just skim for keywords; it digs deep into the language itself, using a field of AI known as Natural Language Processing (NLP).

Think of an NLP model as a linguist that has read the entire internet. It’s been trained on billions of sentences, so it understands the subtle dance of language—context, sarcasm, tone, and the emotional weight of words. It doesn't just see words on a page; it understands the complex relationships between them.

This is huge because it allows the detector to analyze the style of the writing, which is often the biggest giveaway. Fake stories are almost always engineered to trigger an emotional reaction, hoping you'll feel before you think.

Spotting the Tell-Tale Signs in Language

An AI text analyzer is trained to recognize the fingerprints of manipulative content. It’s not about policing opinions, but about flagging objective, measurable characteristics that are far more common in propaganda than in real journalism.

Here are a few key red flags an AI is looking for:

- Sensational and Emotional Language: Words intended to provoke anger, fear, or excitement are classic tells. An AI can literally score the emotional intensity of an article.

- Overly Simplistic or Absolute Claims: You’ll often see phrases like "everyone knows" or "it's just a fact." These are shortcuts used to assert an opinion as an undisputed truth without needing evidence.

- Vague Sourcing: Misinformation loves to attribute claims to "top experts" or "a recent study" without ever naming them. An AI picks up on this lack of specificity immediately.

- Grammar and Spelling Mistakes: While not a dealbreaker on its own, a high number of errors often suggests a lack of professional editing, which is standard practice for any credible news source.

Going Deeper with Sentiment and Stance

Beyond these basics, a sophisticated fake news detector relies on two powerful NLP techniques: sentiment analysis and stance detection.

Sentiment analysis is all about gauging the emotional tone. Is the article objective and balanced, or does it read like an angry tirade? A news report that’s overwhelmingly positive or negative is much more likely to be biased or completely made up.

Stance detection takes this a step further. It figures out the author’s position—their stance—on a specific topic or claim. The AI can tell if the writer is supporting an idea, refuting it, or just reporting on it. This is how it can distinguish a factual news story from an opinion piece masquerading as one.

For instance, an article could mention a verifiable fact but surround it with language that completely twists its meaning. Stance detection uncovers this by checking for consistency. If a headline screams a sensational claim but the article itself fails to back it up, the detector flags that contradiction.

If you're curious about the mechanics behind this, you can learn more from our guide on what do AI detectors look for when scanning different kinds of content.

Unmasking Deception in a Visual World

A fake news story can warp public opinion, but a single doctored image can set it on fire. We process visuals almost instantly, often before our critical thinking kicks in, which makes them a powerful weapon for anyone looking to spread a false narrative. This is exactly why a specialized fake news detector for images is no longer a nice-to-have—it's an essential part of the verification toolkit.

Think of this technology as a digital forensics lab for pictures. It doesn't just glance at an image; it gets down to the pixel level, hunting for the tell-tale fingerprints of forgery that are completely invisible to the naked eye. It’s like having a superpower that lets you see the hidden language of pixels.

This kind of analysis goes way beyond spotting clumsy Photoshop jobs. The best tools can uncover tiny, almost imperceptible inconsistencies in lighting, shadows, and textures that give away an AI-generated or manipulated image.

Digital Forensics for Images

Two of the classic methods in image verification are Error Level Analysis (ELA) and a deep dive into an image's metadata. ELA is a fascinating technique that works by spotting differences in an image's compression levels. When someone edits a photo, the altered parts often have a different compression signature than the original sections, and ELA makes these differences pop.

Metadata, also known as EXIF data, is like an image's digital birth certificate. It stores details like the camera model used to take the shot, the exact date and time it was taken, and sometimes even GPS coordinates. A fake news detector sifts through this data to see if the story being told actually lines up with the image's history. For instance, if a photo claims to show a protest from yesterday but its metadata reveals it was taken five years ago, the whole narrative crumbles.

Visual misinformation is a major driver of trust and safety incidents online. Market analysis shows that threats combining text and images are a high priority for detection vendors. This has led to a sharp increase in demand for image verification tools in newsrooms and a notable rise in enterprise spending on this technology across North America, Europe, and Asia. Scalable, cloud-based detectors are growing fastest as organizations need to process millions of images without retaining user data. You can explore more data on the growth of the fake image detection market.

These foundational techniques are still incredibly useful, but they’re now being supercharged by artificial intelligence, paving the way for a new generation of powerful verification tools.

The Rise of AI-Powered Image Verification

Modern tools like the AI Image Detector bring sophisticated AI models to the fight, performing a much deeper and more nuanced analysis. Instead of just checking compression levels or metadata, these AI systems have been trained on massive datasets containing millions of real and AI-generated images. Through this training, they learn to recognize the subtle artifacts and digital fingerprints that different AI models leave behind.

These digital clues often include:

- Unnatural Textures: AI can still stumble when trying to render perfectly realistic skin, hair, or the texture of fabric.

- Inconsistent Lighting: You might see shadows that don't quite match the light sources in the scene.

- Geometric Flaws: Keep an eye out for strange, repeating patterns in backgrounds or slight asymmetries in facial features.

- Pixel-Level Artifacts: These are unique patterns left behind by the generative process itself—invisible to us, but clear as day to a trained AI.

For a more detailed look at these visual giveaways, check out our guide on how to spot a deepfake.

The AI Image Detector cuts through the noise and provides a clear, actionable verdict, helping you quickly determine whether the visual content in front of you is authentic.

The interface is built for simplicity—just drag and drop an image for an immediate analysis. The tool then returns a straightforward verdict, classifying the image on a spectrum from "Likely Human" to "Likely AI-Generated," completely removing the guesswork.

Any truly effective fake news detector for images has to be fast, clear, and private. The AI Image Detector was designed around these core principles. It delivers results in seconds, gives you a simple confidence score, and—most importantly—does not store any images you upload, ensuring your verification process is both secure and private. This makes it an indispensable tool for journalists on a deadline, content moderators protecting their communities, and anyone committed to stopping the spread of visual misinformation.

Building a Practical Information Verification Workflow

Relying on your gut or a single tool to call out misinformation is a recipe for disaster. Think of it like trying to build a house with just a hammer—you're going to miss some critical steps. A truly solid verification workflow is more like a structured investigation, where you layer different techniques to build a strong, defensible case for whether a piece of content is authentic or not.

For journalists, moderators, and fact-checkers who have to make accurate calls under pressure, this isn't just theory. It's about turning abstract ideas into a concrete, repeatable action plan. Let’s walk through a practical, four-step workflow you can use to methodically pick apart any claim, story, or piece of media that crosses your desk.

Step 1: Scrutinize the Source

Before you even touch the content, look at where it came from. The vast majority of misinformation is peddled by sources pretending to be something they're not. This is like running a background check on the messenger before you even start to listen to their message.

Start with the basics. Look at the website's domain—is it legitimate, or is it a clever misspelling of a well-known site (like "cbs-news.com.co")? Dig around for an "About Us" page and real contact information. A lack of transparency is a huge red flag. While you're at it, check the author's credentials. Do they have a track record of credible work, or did they pop up out of nowhere?

Step 2: Corroborate the Core Claims

Here's the golden rule of verification: never, ever trust a single source. Your next move is to cross-reference the story's main claims with multiple independent, reputable outlets. If a truly shocking story is only being reported by one obscure blog, your alarm bells should be ringing.

Search for the same story from established news organizations known for their editorial standards. Real news, especially major events, gets picked up by lots of different outlets. If you can’t find anyone else reporting on it, the claim is almost certainly unsubstantiated or completely false. This simple act of getting a second and third opinion is one of the most powerful things you can do.

Step 3: Analyze the Textual Content

Okay, now it’s time to look at the words themselves. Misinformation often relies on specific linguistic tricks to bypass your rational brain and go straight for your emotions. You need to put on your critical thinking hat and look for the tell-tale signs.

Is the headline overly emotional or designed to make you angry? Is the language inflammatory, using absolute statements that frame opinions as unshakeable facts?

A key tactic in propaganda is to trigger an emotional response before a logical one. Language designed to provoke outrage or fear short-circuits critical thinking, making readers more likely to share without questioning the content's validity.

Pay attention to what isn't there, too. Does the article cite any sources? Are quotes attributed to real, verifiable people? An absence of evidence and a heavy reliance on vague phrases like "experts say" or "a source revealed" should make you immediately suspicious.

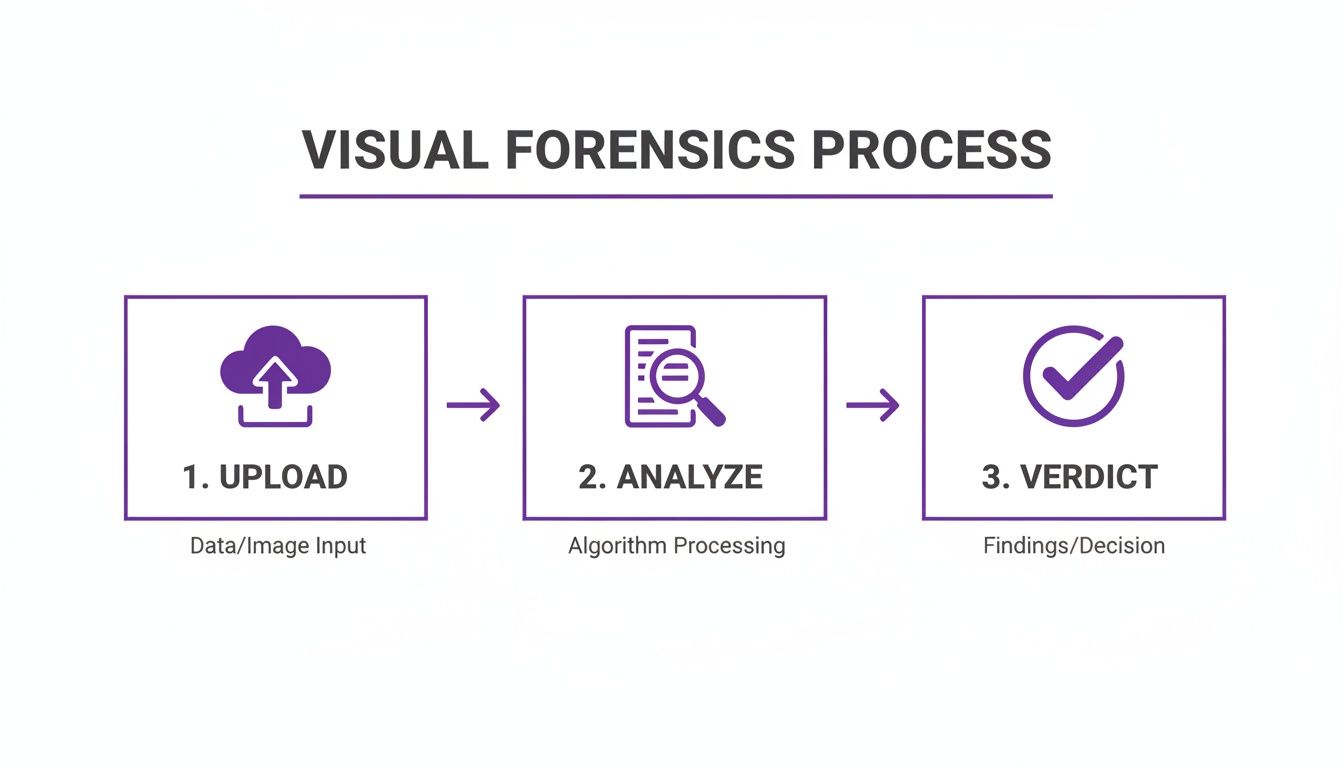

Step 4: Conduct Visual Forensics

Finally, if there’s an image or video involved, a dedicated forensic analysis is non-negotiable. Visuals are incredibly persuasive, and confirming their authenticity is the last critical piece of the puzzle. This is where a specialized fake news detector built for images becomes essential.

The process is often as simple as it looks here: upload, analyze, and get a verdict. This gives you a quick, actionable insight into an image's origins.

Tools like AI Image Detector are specifically designed to spot the subtle artifacts, pixel inconsistencies, and digital fingerprints that AI generation or digital manipulation leaves behind. To get a better feel for what these tools are looking for, our guide explains in detail https://www.aiimagedetector.com/blog/how-to-detect-ai in images and videos.

Ultimately, combining human expertise with powerful technology is the most effective strategy. While human fact-checkers bring nuance and critical context, AI tools offer speed and scale that would be impossible to achieve manually.

Manual vs Automated Detection Methods

| Aspect | Manual Verification (Human Fact-Checker) | Automated Detector (AI Tool) |

|---|---|---|

| Speed | Slow, meticulous, and time-consuming. Can take hours for one deep investigation. | Extremely fast, providing analysis in seconds. Ideal for high-volume content. |

| Scale | Limited. Impossible for a human to review thousands of pieces of content daily. | Highly scalable. Can process massive datasets and real-time feeds with ease. |

| Nuance | Excellent. Understands sarcasm, satire, cultural context, and complex narratives. | Limited. Can misinterpret context or satire, leading to false positives/negatives. |

| Consistency | Can be subjective and prone to individual bias or fatigue. | Highly consistent. Applies the same algorithmic criteria to every piece of content. |

| Cost | High. Requires skilled professionals, leading to significant labor costs. | Lower operational cost once deployed, reducing manual labor expenses. |

| Adaptability | Can adapt to new misinformation tactics based on intuition and experience. | Requires retraining with new data to keep up with evolving adversarial techniques. |

Integrating AI-powered verification tools can dramatically boost a team's efficiency and accuracy. By pulling together all four steps—source scrutiny, corroboration, textual analysis, and visual forensics—you create a powerful, comprehensive verification strategy that's tough to beat.

The Limitations of Detection Technology

While a powerful fake news detector gives us a serious edge in the fight against misinformation, we have to be realistic. These tools aren't magic bullets. Think of it as a constant cat-and-mouse game: as our detection algorithms get smarter, the tools used to create deceptive content evolve right alongside them.

This ongoing arms race means no detector will ever be 100% accurate. The people creating this stuff are always finding new ways to slip past the guards, like adding subtle digital "noise" to an image or tweaking text to mimic authentic writing patterns. A method that works perfectly today might be obsolete tomorrow.

That’s why these tools are best seen as powerful assistants, not infallible judges. They bring crucial evidence to the table and slash analysis time, but the final call still often needs a human eye.

The Challenge of Adversarial Attacks

One of the biggest headaches for any AI system is something called an adversarial attack. These are tiny, often invisible changes made to content with the specific goal of fooling a machine. For instance, someone could alter just a few pixels in an AI-generated image—a change no human would ever notice—that causes a detector to confidently label it as authentic.

These attacks essentially turn the AI's own logic against itself. Staying ahead requires developers to constantly update their models and train them on new adversarial examples, making it a resource-intensive and never-ending battle.

When Nuance Is Lost in Translation

Context is king, and this is where automated systems can really stumble. A fake news detector might struggle to tell the difference between actual misinformation and content that’s more complex.

- Satire and Parody: An AI could easily flag an article from a satirical site like The Onion as "fake news," completely missing the comedic intent.

- Opinion vs. Fact: A detector might see emotionally charged language in an opinion piece and flag it, even if the author is just making a passionate argument, not presenting false facts.

- Cultural Context: Things like sarcasm and irony don't translate well for algorithms. What’s obviously a joke in one culture can be misinterpreted by an AI trained on a different dataset.

This is where human critical thinking becomes irreplaceable. A person understands the context of a joke or the purpose of satire in a way an AI, for now, simply can't.

Another major hurdle is the "black box" problem. If a detector flags your content but can't explain why, it's hard to trust the verdict or even appeal it.

This push for transparency is one of the most important frontiers in AI development right now, and it’s why understanding What Is Explainable AI is so critical. Without it, we risk building automated systems that operate without any real accountability.

At the end of the day, a fake news detector is a vital part of a modern verification workflow, but it’s just one part. These tools give us speed and scale, but they have to be paired with human judgment and good old-fashioned critical thinking to work. Knowing their limits is the first step to using them wisely.

How AI Image Detector Strengthens Your Workflow

Knowing the theory behind misinformation is one thing, but actually putting that knowledge to work is what really counts. This is where a specialized tool for images, like AI Image Detector, can make a world of difference. It’s designed to get the right information to the right people, exactly when they need it, turning a slow manual chore into a fast, effective part of your workflow.

For a journalist on a tight deadline, every second matters. When a dramatic image surfaces with a breaking story, there's simply no time for a drawn-out forensic investigation. A tool that delivers a clear answer in under 10 seconds can mean the difference between publishing a credible scoop and accidentally spreading a lie.

It's also incredibly useful for educators teaching digital literacy. Instead of just talking about deepfakes, students can upload images themselves. They can see the analysis in real-time and learn firsthand what digital artifacts actually look like, making abstract concepts feel tangible and easier to remember.

Built for Professional and Educational Needs

Different jobs come with different pressures, so a one-size-fits-all approach to verification just doesn't cut it. AI Image Detector was built with this in mind, focusing on speed, clarity, and the ability to handle volume.

For Platform Moderators: Protecting an online community is a high-stakes job. An API lets moderators integrate automated image analysis directly into their systems, flagging potentially manipulated content at scale before it can go viral and cause real harm.

For Legal and Compliance Teams: When you're authenticating visual evidence, you need more than a gut feeling. A clear confidence score and detailed reasoning help build a much stronger case when verifying the true origin of a digital photo or document.

For Cautious Consumers: For the rest of us, it’s a simple first line of defense. It gives you a quick and easy way to check suspicious images you come across in emails or on social media, helping you avoid scams and misleading posts.

The core idea is to provide an immediate, understandable, and private analysis. The tool never stores uploaded images, which guarantees that sensitive investigations or personal photos remain completely secure—a non-negotiable feature for professionals.

This screenshot shows just how straightforward the process is with a simple drag-and-drop interface.

The interface is designed for pure efficiency. It returns a clear verdict, classifying an image on a spectrum from "Likely Human" to "Likely AI-Generated," which removes a lot of the guesswork from the process.

Features That Empower Your Workflow

A truly useful fake news detector does more than just give a "real or fake" verdict. It should offer insights that help you make a final, informed judgment. The goal isn't to replace your critical thinking, but to give it a serious technological boost.

AI Image Detector supports multiple file types, including JPEG, PNG, and WebP, so it works with almost any image you’ll find online. Crucially, every verdict comes with detailed reasoning that explains why the tool came to its conclusion, pointing out the specific digital artifacts or patterns it found. That kind of transparency is vital for building trust and even helps you sharpen your own detection skills over time.

By bringing all these features together in an easy-to-use platform, AI Image Detector is more than just another utility. It's an essential part of any modern verification toolkit, giving you the confidence to navigate an increasingly complex visual world.

Frequently Asked Questions

Got questions? You're not alone. When you're trying to figure out what's real online, it's natural to wonder about the tools designed to help. Here are some straightforward answers to the things people ask most.

Can a Fake News Detector Be 100 Percent Accurate?

The short answer is no. No tool is infallible. It's best to think of a fake news detector as an expert partner, not a magic eight ball. It’s incredibly good at spotting the subtle red flags and technical fingerprints that give away fakes, but it's not perfect.

Some extremely well-made fakes can fool the tech, and every so often, a real piece of content gets flagged by mistake (what we call a false positive). That’s why these tools are just one part of the puzzle. The smartest approach is to blend the raw power of AI analysis with good old-fashioned human critical thinking—like checking sources and seeing what other reputable outlets are saying.

How Do I Choose the Right Detector for My Needs?

The right tool really boils down to what you’re trying to check. If you’re dealing with images, you need a detector built for that job, like AI Image Detector. If you're analyzing a news article or a block of text, you'll want something that specializes in Natural Language Processing (NLP).

A key piece of advice: Look for tools that explain their reasoning. A simple "real" or "fake" verdict doesn't teach you much. The best detectors show you why they came to a conclusion, which helps you build your own expertise and make the final call.

Here’s what to look for:

- Focus: Is it built for images, text, video, or all of the above?

- Speed: How fast can you get an answer when time is critical?

- Privacy: Does the tool keep a copy of what you upload? (A big deal for sensitive content.)

- Ease of Use: Is it simple to understand and use right away?

Are Free Fake News Detectors Reliable?

Absolutely. Many free tools are surprisingly powerful and can be very reliable for everyday use. They often run on the same core technology as paid versions but might have limits, like how many times you can use them per day. For a student, a curious individual, or even a professional doing a quick spot-check, a free tool is often more than enough.

For example, the main AI Image Detector is free and gives you a solid, in-depth analysis of any single image. The paid plans are really for organizations that need to check images constantly or want to plug the detection technology directly into their own systems using an API.

Ready to see for yourself how AI can cut through the noise of visual misinformation? Give AI Image Detector a try. You'll get a fast, private, and clear analysis of any image. Get your free image analysis now.