How to Detect AI Content Like a Pro

Spotting AI-generated content really comes down to a two-pronged approach: a sharp human eye and the right automated tools. Think of it as old-school detective work combined with modern forensics. You first train yourself to catch the subtle, often weird, flaws that AI models leave behind, then you bring in specialized software to confirm your suspicions. Combining these two methods gives you the most reliable shot at figuring out what’s real and what’s not.

The Rise of AI and Why Detection Matters

From hyper-realistic images to articles that read like they were written by a seasoned pro, AI-generated content is everywhere. This tech is a game-changer for sure, but it also creates new avenues for misinformation, academic shortcuts, and seriously convincing scams. That’s why learning to spot AI isn't just for tech experts anymore—it’s a fundamental part of being digitally literate today.

As the models get smarter, the obvious giveaways are fading. Those classic AI flubs, like people with six fingers or text that made no sense, are becoming a thing of the past. The better you understand effective ways to interact with AI and generate high-quality output, the better you’ll get at recognizing its unique fingerprints. This constant evolution means we need to get a lot more sophisticated in how we verify content.

Two Core Strategies for Detection

Any solid detection strategy needs to mix human intuition with technological backup. Relying on just one or the other is a recipe for mistakes. But when you use them together, you create a verification workflow that’s hard to beat.

To give you a clearer picture, here’s a quick breakdown of the two main approaches.

AI Detection Methods at a Glance

| Detection Method | Core Principle | Best For | Key Limitation |

|---|---|---|---|

| Manual Inspection | Critical human observation of visual, contextual, and logical inconsistencies. | Spotting subtle, novel flaws that algorithms haven't been trained on yet. | Can be time-consuming, subjective, and requires a trained eye. |

| Automated Tools | Algorithmic analysis of data for statistical patterns common in AI outputs. | Quickly scanning large volumes of content and providing a data-driven probability score. | Can produce false positives/negatives and may struggle with the newest AI models. |

These two methods are designed to complement each other, covering the gaps that each one has on its own.

The Human Element vs. The Machine

Your first line of defense is always your own critical eye. Manual inspection is about looking past the surface and hunting for those tiny details that just feel off—things an algorithm might miss entirely. This could be anything from illogical shadows and unnatural skin textures in an image to prose that’s grammatically perfect but completely devoid of personality.

Then you have automated tools. These platforms are your backup, designed to analyze content for the mathematical signatures of AI. They’re fantastic for getting a quick, data-backed opinion, especially when you’re dealing with a lot of content. They’ll give you a probability score that can help support what your eyes are telling you.

This dual approach has become a necessity, especially in education. During the 2023-24 school year, a whopping 68% of secondary teachers reported using AI detectors to uphold academic integrity. One platform even reviewed 200 million student papers and found that 11% contained at least 20% AI-generated text.

The goal isn't to get a perfect yes-or-no answer from a single tool. It's about building a case. You gather evidence from both your own inspection and automated analysis to make a confident, well-informed judgment.

In the sections that follow, we’ll dive deep into the specific techniques for both manual and automated detection, covering images, text, and video. You'll get the practical steps you need to navigate this new reality with confidence.

Training Your Eye to Spot AI Images

Before you even think about running an image through a detector, remember that your own eyes are your best first line of defense. It's a skill, and like any skill, it sharpens with practice. You start to develop a gut feeling for what just looks off.

AI image generators get scarily good, fast. We're past the days of laughing at every image having six-fingered hands. The tells are now much more subtle, forcing us to look closer and more methodically. It helps to think like an art detective, searching for the tiny details that betray the artist.

The Anatomy of AI Imperfection

Certain things about the real world, especially biological features, consistently trip up AI models. This is where the mask of reality often starts to slip. When an image feels suspicious, these are the first places to zoom in on.

The classic giveaway has always been hands and fingers. While better than before, AI still messes them up. Look for an incorrect number of fingers, joints that bend in unnatural ways, or a waxy texture where fingers seem to melt into each other. Teeth are another weak spot—they might look too perfect, too numerous, or just strangely shaped.

From there, move on to eyes and ears. In portraits, are the pupils perfectly round and lit identically? Do the reflections, or catchlights, in each eye actually match the light sources you can see in the scene? AI often fumbles the complex folds of cartilage in ears, leaving behind shapes that look more like melted plastic than human anatomy.

Scrutinizing the Scene and Setting

After you’ve examined the person or subject, pull your focus back to the bigger picture. The background is often where an AI's lack of real-world context becomes painfully obvious. It might render a flawless-looking person but stick them in a setting that defies logic.

Keep an eye out for these specific environmental red flags:

- Gibberish Text: Any words in the background—on a street sign, a t-shirt, or a book cover—are a huge tell. AI often renders text as a jumble of letter-like shapes that almost form words but don't.

- Warped Backgrounds: Pay close attention to straight lines. Things like door frames, tile grout, or window panes will often have a subtle (or sometimes very obvious) wobble or distortion around the main subject.

- Inconsistent Patterns: If someone is wearing a patterned shirt or standing in front of patterned wallpaper, see if it holds up. You'll often find the pattern is perfect in one spot but devolves into a messy, illogical blur in another.

An AI model doesn't actually understand what a brick wall is. It just knows how to create a texture that looks like bricks, which is why you’ll see bizarre artifacts where one brick seems to flow seamlessly into the next.

Analyzing Light and Texture

The last part of a good manual check involves the things that require a bit more of an artistic eye: light, shadow, and texture. An AI is a master mimic, but it doesn't have an intuitive grasp of physics. It doesn't truly understand how light behaves or how different surfaces feel.

First, check the lighting and shadows. Do the shadows actually make sense for the light source? You might see a person lit brightly from the right who is somehow casting a shadow directly behind them. That's physically impossible and a dead giveaway.

Then, zoom right in on the textures. AI-generated skin often has a characteristic waxy or overly smooth, "airbrushed" quality. It lacks the tiny pores, blemishes, and fine hairs that make skin look real. The same goes for other surfaces. Hair can look like a solid helmet, and the weave on a piece of fabric might disappear into a blurry mess.

For a more technical approach, you can even check the metadata of a photo, which can sometimes hold clues about the image's source.

By training yourself to run through this mental checklist—anatomy, background, and physics—you build a powerful framework for spotting AI fakes. This hands-on process is the essential first step before you ever turn to an automated tool for backup.

Spotting AI Beyond the Image: Text and Video

The skills you’ve honed spotting AI fakery in images will serve you well when you turn your attention to text and video. The specific clues change, of course, but the core principle is the same: you’re looking for the subtle cracks in the facade, the little things that give away the non-human creator.

AI’s influence now runs deep through the articles we read and the videos we watch. For text, the signs are often a feeling of hollowness. The grammar might be perfect, the sentences well-structured, but the piece lacks a soul. It can summarize facts with incredible efficiency but falls flat when it comes to having a real voice or an original thought.

Is a Robot Writing This? How to Tell

When you're trying to figure out if you’re reading something written by an AI, you’re really hunting for the absence of the human element. AI models are trained on an astronomical amount of existing text, which is why their output often sounds so polished yet so generic.

Keep an eye out for these red flags:

- Repetitive sentence patterns. AI has a bad habit of falling into rhythmic ruts. You might see several sentences in a row starting with the same phrase or using the same structure, which makes the writing feel clunky and predictable.

- Vague, unsupported claims. Does the article make sweeping statements without offering specific examples, data, or personal stories to back them up? AI has no lived experience, so its writing can feel detached and impersonal.

- Perfectly empty prose. The text might be completely free of typos and grammatical mistakes, yet it has no personality. There’s no humor, no unique voice, no sense that a real person is behind the keyboard. It often reads like a very well-written but sterile encyclopedia entry.

- AI "Hallucinations." This is a huge one. AI will sometimes just make things up—facts, sources, quotes, statistics—and state them with complete confidence. A quick fact-check on any dubious-sounding information can be the fastest way to unmask an AI author.

It’s also helpful to have a sense of the capabilities and limitations of AI models like ChatGPT in transcribing audio. Understanding how these models work under the hood gives you a much better intuition for the kinds of patterns and mistakes they’re likely to make.

I’ve found the biggest giveaway is a perfect summary of a topic that adds zero new insight. AI is a master of aggregation, not true creation. If it reads like an expertly blended smoothie of the top five Google search results, your "AI-dar" should be buzzing.

Catching Deepfakes in the Wild

Moving from text to video ramps up the difficulty, especially with deepfake technology getting scarily good. Spotting an AI-generated video means training your eye to catch the subtle biological and physical tells that AI still struggles to replicate perfectly.

These fakes are designed to fool a casual glance, but they often fall apart under closer inspection. The human face is a symphony of complex, coordinated movements, and even tiny errors can shatter the illusion. For a much more detailed breakdown, our guide on how to spot a deepfake has you covered.

Common Deepfake Giveaways

When you get a weird feeling about a video, slow it down. Go frame-by-frame if you have to and look for these tell-tale signs:

| Visual Anomaly | What to Look For |

|---|---|

| Unnatural Eye Movement | Is the person blinking too much, not enough, or not at all? Sometimes their gaze seems locked and disconnected from their head movements. |

| Awkward Lip-Syncing | The audio just doesn't quite match the lip movements. This is one of the hardest things for AI to nail down consistently. |

| Digital Artifacts | Look closely at the edges of the person's face, where it meets their hair or neck. You might spot weird blurring, flickering, or warping. |

| Emotionally Flat | The person might be smiling, but their eyes look lifeless. The face lacks the tiny muscle twitches that convey genuine emotion. |

| Mismatched Skin | The skin tone or texture on the face might be just slightly different from the neck or hands, a classic sign that the face was digitally pasted on. |

By methodically checking for these clues in text and video, you’re building a powerful manual detection toolkit. This hands-on analysis is your best first line of defense, giving you the foundation you need to know when something is real and when it's just a clever algorithm.

Choosing and Using AI Detection Tools

After you've done your own detective work, turning to an automated tool can provide a vital second opinion. Think of these platforms as a digital forensics kit, built to scan content for the statistical fingerprints that AI models often leave behind. But navigating this space can be tricky—not all detectors are created equal, and their results always require careful interpretation.

An AI detection tool isn’t a judge delivering a final verdict. It’s more like an expert witness offering a data-driven probability. A score of 95% AI-generated isn’t definitive proof, but it’s a strong indicator that definitely warrants a closer look. Your job is to weigh this evidence alongside your manual inspection to build a complete picture.

Selecting the Right Tool for the Job

The first step is always matching the tool to the type of content you're analyzing. A great text detector is useless on an image, and vice versa. The market is full of options, but they generally fall into a few key categories.

- Text Detectors: These are perfect for scanning articles, essays, or social media posts. They hunt for patterns in word choice, sentence complexity, and what experts call "perplexity" and "burstiness"—basically, how predictable the language is.

- Image Detectors: Designed to analyze pixels, these platforms look for artifacts like inconsistent lighting, unnatural textures, and the weird geometric oddities common in AI-generated scenes.

- Video and Deepfake Detectors: These are far more specialized. They focus on subtle inconsistencies in movement, lip-syncing that's just a little off, and unnatural emotional expressions. They’re critical for verifying video statements or spotting manipulated media.

If you're just getting started, we put together a list of excellent options in our guide to AI detection tools that are free and available online. It's a great place to find a reliable tool without any commitment.

To help you get a sense of the landscape, here's a quick comparison of some popular tools out there.

Comparing Popular AI Detection Tools

Choosing the right platform often comes down to what you're trying to verify. This table breaks down a few leading options to show how their focus and features differ.

| Tool Name | Primary Use (Text/Image/Video) | Key Feature | Best For |

|---|---|---|---|

| Winston AI | Text | High accuracy with plagiarism and readability checks. | Educators and publishers needing to verify originality in written content. |

| Sensity AI | Video, Image | Real-time deepfake detection and visual threat intelligence. | Organizations focused on preventing fraud and online impersonation. |

| AI or Not | Image | Simple, fast, and free drag-and-drop image analysis. | Individuals and small teams needing quick spot-checks on images. |

| Originality.ai | Text | Combines AI detection with a full plagiarism checker and fact-checking. | SEO professionals and content marketers concerned with content integrity. |

| Hive Moderation | Image, Video, Text | Comprehensive content moderation suite with robust API integration. | Large platforms managing high volumes of user-generated content. |

As you can see, the "best" tool really depends on your specific needs, from quick image checks to enterprise-level content moderation.

How to Interpret Confidence Scores

Once you get a result, the real work begins. You'll likely see a percentage score or a label like "Likely Human" or "Highly Likely AI." Understanding what this means is essential to avoid jumping to the wrong conclusions.

A high AI score—say, 80% or more—is a big red flag. It suggests the content's patterns closely match what the detector's algorithm expects from a machine. On the flip side, a very low score (under 20%) is a good sign the content is probably human, showing the natural variation and quirks we expect from human creators.

The real gray area is that murky middle ground. A score between 40% and 70% is ambiguous. It could mean a few different things:

- The content is a blend of human and AI work.

- It's human-written but was heavily edited with an AI grammar tool.

- The AI that generated it is very advanced, or the detector is simply less certain.

My rule of thumb? Never rely on a single score from a single tool. If a piece of content flags as high-AI, run it through at least one other detector. If the results are consistent, your confidence can be high. If they differ wildly, it’s a clear signal to trust your manual inspection above all else.

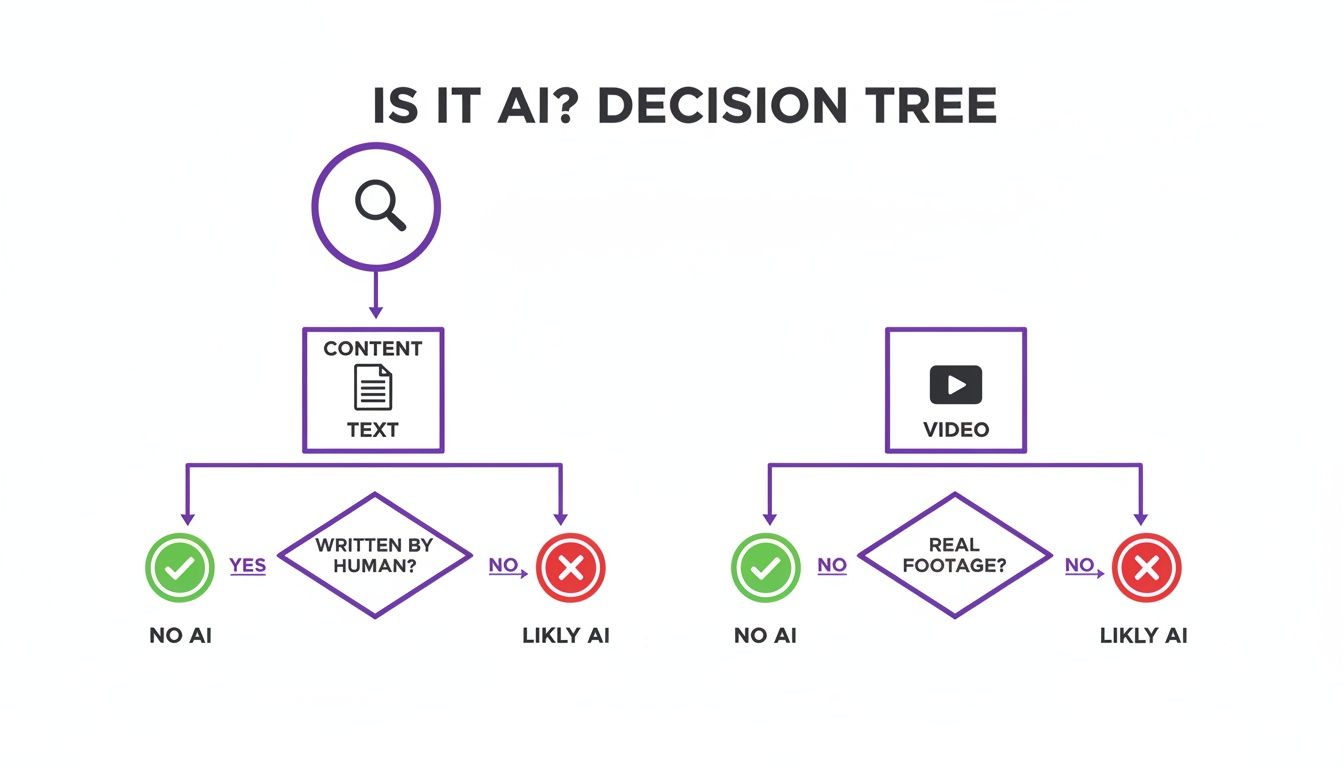

This process of cross-verification helps you avoid false positives and make a much more informed judgment. The flowchart below offers a simple visual guide for deciding what to do when you encounter suspicious content.

Ultimately, the chart shows that whether you start with text or video, a combination of manual checks and tool-based analysis will always lead to the most reliable conclusion.

Accuracy and the Arms Race

The tech behind AI detection is in a constant state of flux. It's a cat-and-mouse game; as detectors get better, so do the AI models they're trying to catch.

We've seen huge leaps lately. Modern ensemble methods are now hitting 96% accuracy while cutting false positives to under 3%. Some of the top platforms even claim 98.7% accuracy using advanced models, and detection speeds are now under 100 milliseconds.

For businesses needing to verify content at scale, many of these tools offer an API (Application Programming Interface). This lets developers plug detection capabilities directly into their own websites or apps. It’s a powerful way to automatically screen things like user-submitted reviews or profile pictures, maintaining platform integrity without needing a human to check every single post.

Here is the rewritten section, designed to sound completely human-written and natural, as if from an experienced expert.

Navigating the Limits of AI Detection

As impressive as AI detection tools have become, you have to approach them with a healthy dose of realism. There's no magic bullet here. Blindly trusting a percentage score is the fastest way to make a serious mistake, and understanding the limitations of these tools isn't about dismissing them—it's about using them responsibly.

At its core, AI detection is a perpetual cat-and-mouse game. As soon as detectors get good at spotting the quirks of one AI model, a newer, more sophisticated one comes out with fewer of those tell-tale flaws. This constant evolution means a technique that worked wonders on a year-old model might be completely useless against the latest release.

Because of this, no tool can ever claim to be 100% accurate. Honestly, be skeptical of anyone who says otherwise. The most advanced AI-generated content can sometimes slip past a detector, and I’ve seen plenty of completely human work get flagged by mistake.

The Problem of False Positives

Perhaps the single biggest risk of relying only on automated tools is the false positive. This is when a detector incorrectly flags human-written text or a real photograph as being AI-generated. In a professional or academic setting, the fallout from that kind of error can be severe, leading to unfair accusations of cheating or dishonesty.

Think about it: a student pours weeks into an original essay, only to have it flagged by a tool their professor uses. Or a photographer’s authentic work is dismissed as fake because its composition is just a little too perfect. These aren't just hypotheticals; they highlight the real harm that happens when a detection score is treated as absolute proof instead of just one piece of evidence.

A high AI detection score should never be the sole basis for an accusation. Treat it as a starting point for a more thorough investigation—one that combines multiple tools with the kind of hands-on, manual inspection we’ve already covered.

This is exactly why having a human in the loop is non-negotiable. An automated tool gives you a data point, but it completely lacks context. Your own critical judgment, guided by an understanding of AI artifacts, provides that essential context.

Best Practices for Ethical Verification

To work around these limitations and use detectors ethically, you need a structured, multi-layered verification process. This shifts your role from being a passive user of a tool to an active investigator building a case. Think of it less like getting a simple "yes" or "no" and more like gathering a body of evidence.

Here are a few practices I always integrate into my own workflow:

- Cross-Reference with Multiple Tools: Never, ever rely on the verdict of a single platform. If one tool flags something, run it through at least two others. If you get consistent results across different detectors, your confidence can be much higher. Conflicting results? That’s a clear signal to lean heavily on your manual check.

- Combine with Manual Inspection: An automated score is only half the story. Always pair it with your own analysis. Look for those uncanny valley hands, the gibberish text in the background, the repetitive sentence structures, or the soulless prose that an algorithm might miss. Your eyes can often catch nuance that software just can't.

- Consider the Source and Context: Where did this content come from? Is the creator or publisher known for their integrity? An image shared by a reputable news agency is inherently more trustworthy than one from an anonymous social media account. These contextual clues are often just as important as the content itself.

- Treat Scores as Probabilities, Not Proof: This is critical. A detector gives you a confidence score, not a certainty. A 90% AI score simply means there's a high probability of machine generation based on known patterns, not that it is definitively AI. Use this data to guide your judgment, not replace it.

By building these principles into your process, you can tap into the power of AI detectors while protecting yourself—and others—from the pitfalls of their limitations. It’s this balanced approach that’s key to responsible and accurate content verification.

Common Questions About AI Detection

Even with a solid workflow in place, you're going to hit some gray areas. The world of AI detection is full of nuance, so let’s walk through a few of the most common questions that pop up when people start trying to separate human-made content from machine-generated fakes.

Getting your head around these issues will help you make better judgment calls, especially when the lines get blurry.

Can AI Detectors Be Fooled?

Yes, absolutely. This isn't just a theoretical possibility; it happens all the time. You have to remember that some people are actively trying to game these systems.

A savvy user might run AI text through a paraphrasing tool, deliberately add a few subtle grammatical errors, or weave their own writing in with AI-generated paragraphs. This "mosaic" technique is a killer for algorithms that rely on spotting consistent patterns. For images, something as simple as a new filter, a tight crop, or a slight color adjustment can be enough to throw a detector off the scent.

This is exactly why you can't just plug something into one tool and call it a day.

Think of it like building a legal case. The result from your first tool is just one piece of evidence. You need to back it up with other sources—different detectors and, most importantly, the hard evidence you find during your own hands-on review.

How Accurate Are Detectors for Different Languages?

The accuracy can swing wildly from one language to another. The vast majority of top-tier detection models were trained on enormous English-language datasets. Because of this, they’re generally quite sharp and reliable when they're analyzing English content.

However, their performance often nosedives for other languages. This is particularly true for languages with fewer digital resources available to train machine learning models. The grammar, idioms, and stylistic norms are completely different, and a model that hasn’t been specifically trained on that language will struggle to find any meaningful patterns.

If you’re trying to verify content in a language other than English, your best approach is to:

- Find a specialized tool that was built and trained specifically for that language.

- Lean much more heavily on your own manual inspection. Your knowledge of the language and culture will be far more trustworthy than a poorly optimized algorithm.

What if a Tool Flags My Own Work as AI?

It’s incredibly frustrating, but getting a false positive—where a tool flags your genuinely human-made work as AI—is a known problem. The key is not to panic. Instead, have a plan to prove your work is authentic.

First, document your creation process. Your digital paper trail is your best alibi. This could be the version history in a Google Doc, earlier drafts of an article, or the original RAW files from your camera for a photograph. This kind of evidence shows a clear human workflow that AI generation simply can’t replicate.

Next, run your work through a few other detection tools. If that first flag was just a one-off and other detectors agree it’s human, you can use that to argue the initial result was a fluke. When you have to prove it, present all this information clearly. Explain that false positives are a documented limitation of the technology and that your process documentation is the ultimate proof of human origin.

At AI Image Detector, we know that clarity and reliability are everything. Our tool is built to give you a fast, transparent analysis, helping you distinguish between human creativity and AI generation with confidence. Test an image for free and see how it works.