How to Improve Media Literacy in the AI Era

Improving your media literacy isn't a passive activity—it's about actively questioning what you see. You have to learn to question sources, spot emotional manipulation, and get comfortable using tools to verify authenticity, especially now with the explosion of AI-generated content. These skills aren't just nice to have anymore; they're critical for making sense of the world.

Why Media Literacy Is More Critical Than Ever

We've gone way past simple "fake news." The real challenge today is sophisticated, AI-driven content that can look and feel completely real. We’re talking about everything from synthetic images popping up in news reports to automated propaganda campaigns flooding social media. The speed and scale of misinformation have created a whole new reality for everyone.

Old-school verification skills are still a good foundation, but they often don't cut it on their own. This new environment requires a modern toolkit and a more resilient mindset. Learning to be media literate isn't just an academic exercise—it’s a fundamental skill for anyone who wants to maintain their professional integrity, build public trust, and support a healthy democracy. This guide will give you practical strategies you can start using right away.

The New Information Battlefield

The core issue is that technology has made it incredibly easy for almost anyone to create highly convincing fakes. What used to take a Hollywood budget and a team of CGI artists can now be done with a simple app on your phone. This changes the game for a lot of people:

- Journalists are constantly challenged to verify photos and videos from sources that could be synthetically altered.

- Educators have to teach students how to navigate a world where essays, images, and even video presentations can be generated by AI.

- Corporate Teams must stay vigilant against deepfake scams that target employees with fake, urgent requests from their "boss."

The goal isn't to make you cynical about everything you see online. It's to foster a healthy, evidence-based skepticism. It's about empowering yourself to ask the right questions and knowing how to find the answers.

A huge part of building this new skillset is understanding what is synthetic media. These AI-powered creations are quickly becoming a dominant force in our online world.

A Global Imperative With Proven Solutions

The need for strong media literacy education isn't just a theory; we can see its real-world impact on a national scale. Take Finland, for example. For the last decade, countries that make media literacy a core part of their education system have shown far greater resilience to disinformation.

The 2023 Media Literacy Index puts Finland, Denmark, and Norway at the very top. What do they have in common? They all have long-standing national strategies for teaching these skills from a young age. The data is clear: structured, widespread education is one of the best defenses we have for creating a more informed and less vulnerable society. You can dive into the full country rankings and their methodologies to see the evidence for yourself.

Your Foundational Toolkit for Digital Verification

Before you can spot the sophisticated fakes, you need a solid, repeatable workflow for that first-glance analysis. This isn't about getting lost in theory; it's about knowing exactly what to do the moment you encounter a piece of questionable content.

Mastering these initial checks will honestly solve 90% of the low-effort misinformation you bump into every day.

It all starts by looking past the surface. A professional-looking website or a plausible-sounding URL isn't a green light anymore. You have to dig into the source itself—who's the author, what's their background, and what are the publication's known biases? Getting this right sets the stage for everything else.

Go Beyond the Source With Lateral Reading

One of the most powerful habits you can build is lateral reading. Instead of staying on a single webpage and trying to figure it out in a vacuum, you immediately open new browser tabs. The goal is simple: find out what other trusted, independent sources are saying about the claim, the author, or the website itself.

Let's say a shocking headline from a site you've never heard of pops into your feed. Before you even read the article, you should:

- Search for the publication’s name alongside words like "bias," "credibility," or "fact-check."

- Look up the author. Do they have a real professional history? What else have they written?

- Search for the core claim of the article. Are major news outlets or fact-checking organizations like Snopes or the Associated Press reporting the same thing?

This technique gives you context in seconds. If nobody else is reporting a massive story, or if the source has a documented history of pushing garbage, you have your answer without ever getting sucked into the manipulative details of the original piece.

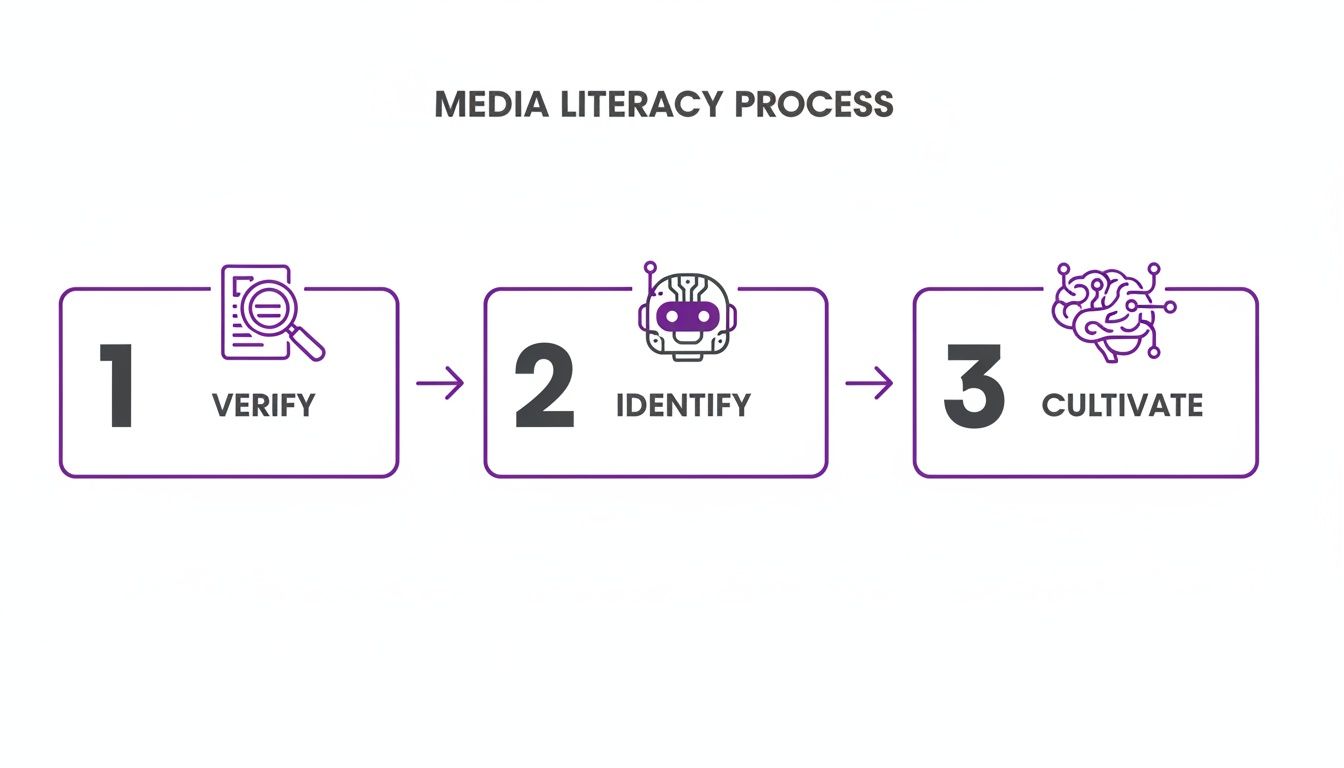

This simple flowchart breaks down the process: you start by verifying the basics, then you can move on to identifying specific threats, and finally, you cultivate a long-term critical mindset.

Verification is always the essential first step. It’s the foundation that allows you to confidently identify modern fakes and build those critical thinking muscles for the long haul.

Decode the Emotional Cues

Misinformation thrives on emotion. It’s often designed to make you feel a strong, immediate reaction—anger, fear, excitement—to shut down your critical thinking. Before you do anything else, just pause and analyze how the content is presented.

Keep an eye out for tells like:

- Emotionally loaded language in headlines (“SHOCKING,” “OUTRAGEOUS,” “Secret Exposed!”).

- Leading questions that frame a narrative before showing any evidence.

- "Us-vs-them" framing that pits one group of people against another.

A Quick Tip from the Trenches: If a piece of content gives you an intense emotional spike, that should trigger your skepticism, not your share button. That feeling is a deliberate tactic meant to bypass your rational brain.

To put this into practice, I've put together a simple checklist to run through when you first encounter a piece of digital content.

Digital Content Verification Checklist

This table provides a quick, step-by-step workflow for the initial verification of any digital media you encounter.

| Verification Step | Key Question to Ask | Example Action |

|---|---|---|

| Check the Source | Have I heard of this publication? Is it reputable? | Open a new tab and search for the source's name + "fact check" or "bias." |

| Investigate the Author | Is the author a real person with expertise on this topic? | Look up the author's name to find their professional bio, other articles, or social media profiles. |

| Analyze the Tone | Does the headline or language seem designed to make me angry or afraid? | Look for all-caps, excessive punctuation (!!!), or loaded words like "scandal" or "cover-up." |

| Cross-Reference Claims | Are other reliable news outlets reporting this same information? | Search for the main claim of the article on a search engine and filter for "News." |

| Examine the Evidence | Does the article link to its original sources? Are they credible? | Click on the links provided. Do they go to primary sources like studies or official reports? |

Running through these questions takes less than a minute, but it can stop the vast majority of low-quality misinformation in its tracks.

Master the Reverse Image Search

For any visual content, the reverse image search is your most powerful friend. It lets you trace an image back to its origin, showing you where it first appeared and if it's been altered or completely misrepresented.

Here’s a real-world scenario: A dramatic photo of a crowded protest goes viral, claiming to show massive support for a current cause.

Your workflow is simple:

- Save or copy the image.

- Upload it to a reverse image search engine like Google Images or TinEye.

- Analyze the results. Pay close attention to the earliest indexed version of the photo.

You’ll often find the photo is from a different event, a different year, or even a different country. You might also find fact-check articles that have already debunked it. It's a five-second action that can instantly expose a lie. As AI-generated visuals become more common, it's also smart to learn how to detect AI in images, adding another crucial layer to your toolkit.

Spotting AI Fakes and Synthetic Media

We've entered a new frontier of digital verification, and AI-generated content is the primary challenge. Learning to identify synthetic media isn't just for tech experts anymore; it's a fundamental part of modern media literacy. As the quality of these fakes accelerates, relying on our eyes alone is becoming a risky bet.

To stay ahead, we have to understand how the future of AI software development is shaping the information we see. As the models get smarter, our detection methods must evolve right alongside them. This means pairing a sharp human eye with the right technology.

Starting With the Obvious AI Tells

Before you reach for any tool, it’s worth training your eyes to spot the classic mistakes that AI models still make. These digital artifacts are often the quickest way to flag a suspicious image without needing any special software. While these flaws are getting harder to find, they’re still common enough to be a great first line of defense.

Keep an eye out for these tell-tale signs:

- Anatomical Oddities: AI generators are notorious for messing up hands, often adding an extra finger or creating bizarre, unnatural joints. Ears can also look strange, limbs might be oddly proportioned, and teeth can appear melted or misaligned.

- Garbled Text: If you see text in the background—on a sign, a book, or a t-shirt—zoom in. AI often produces a jumbled mess of nonsensical characters because it's replicating the look of text without understanding language. It’s a dead giveaway.

- Unnatural Textures: Skin sometimes looks too smooth and waxy, almost like a digital painting. Hair can also be a clue, appearing as a solid, uniform mass rather than individual strands.

- Background Inconsistencies: The environment is often where things fall apart. Look for illogical architecture, distorted objects, or patterns that warp and bend strangely. Shadows are another big one—does the lighting source actually match the shadows being cast?

Think of these manual checks as your first gut-check. If an image has several of these "tells," you can be fairly confident it's not authentic. But when those clues aren't there, you need to dig deeper.

A Hands-On Walkthrough of an AI Image Detector

When a visual inspection isn't enough, an AI image detector is the next step. These platforms are built to analyze images for the subtle, pixel-level artifacts and patterns that AI models leave behind—clues that are invisible to the human eye.

The process is usually incredibly simple. You just drag and drop your image file or upload it directly from your computer. The tool then runs the image through its detection models to look for signs of machine generation.

Within seconds, you’ll get a result. This typically includes a clear verdict—like "Likely AI-Generated" or "Likely Human"—along with a confidence score. Pay close attention to that score. A 95% confidence level is a much stronger signal than a 55% one.

Interpreting Nuanced Results in Practice

Not every analysis will give you a clean, yes-or-no answer. The digital world is messy, and your results will sometimes reflect that. Let's walk through three different scenarios to see how you might interpret what you find.

Case Study 1: The Obvious Human Photo

You upload a standard, unedited photograph taken on your phone.

- Expected Result: "Likely Human" with a high confidence score, like 98%.

- Interpretation: This is a clear-cut case. The tool found no significant markers of AI generation. You can move forward with a high degree of certainty that the image is authentic, though you should still do your basic source verification.

Case Study 2: The Clear AI Creation

Next, you test an image of an astronaut riding a unicorn in the style of Van Gogh—something that obviously isn't real.

- Expected Result: "Likely AI-Generated" with a near-perfect confidence score, like 99%.

- Interpretation: The detector easily picked up on the statistical fingerprints of a diffusion model. The verdict is definitive and confirms what your eyes already told you.

Case Study 3: The Complex Mixed-Media Piece

Finally, you analyze a real photograph that has been heavily edited. Maybe a graphic designer used AI-powered features in Photoshop to remove an object or generate a new background.

- Expected Result: A mixed or low-confidence result. It might say "Likely AI-Generated" but with only 60% confidence, or the tool might return an "inconclusive" verdict.

- Interpretation: This is where your critical thinking is essential. A low-confidence score doesn't mean the tool failed; it means the content is complex. It's a signal that while the base image might be human, it has likely undergone significant digital modification, possibly with AI tools. This result should prompt you to investigate how and why the image was edited.

An AI detection tool isn't an oracle. It's a powerful data point in your verification process, meant to augment your judgment, not replace it.

Weaving Media Literacy Into Your Daily Work

Knowing how to spot fakes on your own is one thing. Building that skill into a consistent, team-wide habit is a whole different ballgame. The real win is making media literacy an operational standard, a reflexive part of your culture that protects your organization from risk.

This is about moving from personal know-how to a shared, documented process.

Whether you're in a newsroom chasing a deadline, a classroom shaping young minds, or a corporate office protecting company assets, the goal is the same. We need to shift verification from a reactive chore to a proactive, ingrained workflow. When everyone on the team uses the same language and follows the same steps, your collective defense against manipulation gets a whole lot stronger.

Protocols for Newsrooms and Content Teams

For journalists and content creators, the stakes couldn't be higher. A single piece of unverified user-generated content (UGC) can vaporize credibility built over years. This is why a formal, multi-step verification protocol isn’t just a nice-to-have—it's an absolute necessity.

Your workflow needs to be a clear, documented process that every single person follows when handling content from outside sources. It’s all about consistency and accountability.

Here’s a practical protocol you can adapt:

- First-Pass Triage: The moment content comes in, run the image or video through a reverse image search and an AI Image Detector. Document what you find right away, including confidence scores and any potential source matches.

- Vet the Source: Who sent this? If you can, contact them directly. Work to independently verify their identity and location. Look for corroborating evidence on their social media profiles or other public activity. Is their story consistent?

- Geolocation Check: Use mapping tools to confirm the visual evidence actually matches the claimed location. Hunt for unique landmarks, street signs, specific architectural details—anything that anchors the image to a real place.

- The Final Review: Before anything goes live, a second team member must review the verification steps and sign off. Think of it as a "two-key" system. It's the simplest way to prevent an individual oversight from becoming a major public error.

Transparency is your best friend here. If you use an AI-generated image for illustrative purposes, label it clearly. When you debunk a fake, show your work. Explain to your audience how you verified a piece of content. This doesn't just build trust; it educates your community, creating a ripple effect of better media literacy.

Classroom Exercises for Educators

Teaching media literacy is all about hands-on, engaging activities, not dry lectures. The challenge is immense. A 2023 survey revealed that a staggering 77% of K–12 educators feel unprepared to teach the skills students need in an AI-driven world.

At the same time, about 75% of state social studies specialists report that media literacy is now required in their standards. This highlights a critical need for practical, effective classroom resources. You can get a deeper look into the current state of media literacy policy and its impact on education.

Here are a few exercises you can use tomorrow:

- The "Spot the Synthetic" Challenge: Give students a mix of five real photos and five AI-generated images. In small groups, have them identify the fakes and—this is the important part—explain why. They should point to specific clues like mangled hands, nonsensical text, or odd lighting.

- Trace the Meme: Pick a popular meme and have students work backward to find its origin. This is a brilliant way to teach them about context collapse, where images get stripped of their original meaning as they spread. It also gets them comfortable using reverse image search tools.

- Build a "Credible Source" Rubric: Don't just hand students a list of approved websites. Challenge them to build their own assessment rubric. What makes a source trustworthy? Guide them to define criteria like author expertise, cited evidence, publication date, and potential bias.

These kinds of activities turn abstract ideas into sticky, memorable skills that empower students to navigate their digital worlds with confidence.

Mitigating Risk in a Corporate Setting

In the corporate world, the threats look different but are just as damaging. Deepfake scams, doctored documents, and brand-focused disinformation campaigns can lead to huge financial and reputational losses. This is why media literacy has to be a core part of your security awareness training.

Consider integrating these practices into your operations:

- Deepfake Fire Drills: Run a simulation of a voice phishing or deepfake video call scam. Have a "CEO" make an urgent, out-of-character request for a wire transfer. The goal is to train employees to instinctively pause, question anything unusual, and verify the request through a completely separate channel.

- Document Verification Protocol: For teams in finance, legal, or HR, make it mandatory to check any official-looking document received digitally. This might mean using a metadata viewer to look for signs of tampering or, more simply, calling the sender on a known, trusted number to confirm they actually sent it.

By making these practices routine, you build resilience right into your company's DNA. You turn every employee into a human firewall, ready to spot and stop a manipulation attempt before it does any real harm.

Building a Mindset Resilient to Misinformation

Verification tools and technical know-how are crucial, but they're only half the battle. The other, much tougher side of the fight is psychological. To truly become media literate, we need to build a mindset that’s naturally resilient to manipulation. This starts with understanding the cognitive shortcuts and emotional triggers that make every single one of us vulnerable.

The biggest culprit here is confirmation bias. It's our brain's built-in reflex to find, favor, and remember information that confirms what we already think. It just feels good to be right, and our minds are hardwired to look for evidence that keeps that feeling going. This makes us prime targets for content perfectly engineered to fit our existing worldview.

This isn't a character flaw; it's just how our brains work. Simply recognizing this fact is the first step toward building a solid defense. The single most effective tactic I’ve found is to consciously slow down, creating a critical pause between seeing something and reacting to it.

Question Your Emotional Reactions

Misinformation is designed to hit you hard and fast with a strong emotional response—outrage, fear, excitement, you name it. Those feelings are a trojan horse, meant to bypass your rational brain and shove you toward a knee-jerk share, comment, or conclusion.

A core part of building a resilient mindset is learning to treat your own emotional spike as the first red flag.

When a headline or an image gives you that intense rush, just stop. Take a breath. Ask yourself a few questions:

- Why am I feeling this so strongly? Is the language loaded or designed to create an "us-vs-them" dynamic?

- Is this feeling clouding my judgment? Intense emotions are the enemy of critical thinking; they make us less likely to sweat the details.

- What if this content supported the opposite view? This simple thought experiment helps detach the claim from your emotional investment.

This habit of self-reflection moves you from being a passive target for emotional triggers to an active, conscious observer. You start to see the manipulation for what it is. To go deeper into how these tactics are used in large-scale campaigns, our guide on effective fake news detection strategies is a great next step.

Cultivate Healthy Skepticism Without Cynicism

There’s a fine line between healthy skepticism and corrosive cynicism. Skepticism is about asking questions and demanding evidence. Cynicism is about dismissing everything out of hand. The goal is to become a thoughtful skeptic, not a reflexive cynic who trusts nothing.

Healthy skepticism is an active state of inquiry, not a passive state of disbelief. It's the mindset that says, "That's an interesting claim. Now, show me the evidence."

This approach requires a dose of intellectual humility—the willingness to admit you might be wrong. It means being open to changing your mind when you encounter credible, verifiable evidence that contradicts what you thought you knew. Part of this resilience also comes from understanding how you can be targeted in the first place. Learning some basic strategies to stay anonymous online can actually reduce your exposure to these kinds of campaigns.

The Global Skill Gap in Critical Thinking

Boosting media literacy on a global scale is about more than basic education. It requires specific, targeted digital verification skills, especially for younger generations who have grown up online.

The gap between being able to read and being able to critically dissect an AI-generated image is massive. For instance, the International Computer and Information Literacy Study (ICILS) found that 43% of 14‑year‑old students across the EU don't reach a basic level of digital skills.

Think about that. Nearly half lack the fundamental competence to navigate online information critically, even though general literacy is high—around 86.3% of people 15 and over can read and write worldwide. This disconnect shows we have to move beyond traditional literacy and start equipping everyone with the mindset needed for digital scrutiny.

Ultimately, your mindset is your first and best line of defense. By understanding your own cognitive weak spots and actively practicing habits of slow thinking and emotional awareness, you can transform from a passive consumer into a deliberate, engaged, and resilient navigator of the information you encounter every day.

Common Questions About Media Literacy

Even with the best training and a solid game plan, you're bound to run into questions. Building media literacy isn't a one-and-done task; it's a constant process of learning and adapting as the information landscape shifts. This section is here to tackle some of the most common questions that come up along the way.

Think of this as your quick-reference guide for those moments when you're putting these skills to the test in the real world.

What’s the Single Most Important Skill for Media Literacy?

If I had to boil it all down to one thing, it's this: the habit of pausing to verify before you share. That simple act of stopping to ask, "Wait a minute, is this legit?" is the bedrock of everything else.

This intentional pause is your defense against an emotional, knee-jerk reaction to something shocking or outrageous. It gives you the mental space to switch from passive consumer to active investigator and actually apply the critical thinking skills you've learned. Without that moment of hesitation, all the advanced verification tools in the world won't do you any good.

The goal isn't just to consume information more wisely, but to share it more responsibly. That pause is the circuit breaker that stops misinformation from spreading through your network, making you part of the solution.

How Can I Teach Media Literacy to Students Without Overwhelming Them?

The trick is to make it relevant and hands-on, not some dry lecture about the dangers of the internet. You have to meet them where they are. Use examples from TikTok, YouTube creators, or even the video games they play.

Turn it into a detective game. "Alright, let's find the clues that show us this is real or fake." Focus on one core skill at a time.

- Spot the Ad: Start simple. Have them compare a sponsored post from their favorite influencer with one of their regular posts. What’s different?

- Track the Meme: Next, try a simple reverse image search on a funny meme to see where it really came from. The results are often surprising.

- Create Your Own "Fake News": This is one of my favorite exercises. Have students create their own (harmless) fake story. This forces them to think about how to use loaded language or out-of-context images to be persuasive. It’s an incredibly sticky lesson because they learn about manipulation tactics from the inside out.

When you make it an empowering, hands-on challenge, you give them skills they'll actually remember and use.

Are AI Detection Tools 100% Accurate?

No, and you should be wary of any tool that claims it is. The reality is that AI image generation and detection are locked in a constant arms race. As the generators get scarily good at creating realistic fakes, the detectors have to constantly evolve just to keep pace.

That said, good detectors offer a confidence score and often provide reasoning behind their analysis, which are incredibly valuable signals during an investigation. The key is to never treat an AI detector as the final word.

Instead, think of its output as one more piece of evidence. A "Likely AI-Generated" flag is a major red flag that tells you to dig much deeper. A "Likely Human" result doesn't give you a free pass, either—you still need to do your due diligence, check the source, and read laterally. It's expert testimony, not a final judgment.

Why Does Media Literacy Matter if I'm Not a Journalist?

Because in today's world, we're all publishers. Media literacy has become a fundamental life skill, right up there with financial literacy. It’s what protects you from sophisticated financial scams, dangerous health misinformation, and propaganda designed to sway your opinion without you even realizing it.

Every single time you share a post, forward an email, or repeat a story you saw online, you are broadcasting that information to your personal network of friends, family, and colleagues.

By improving your own media literacy, you ensure you're a trustworthy node in that network. You become the person who breaks the chain of misinformation, not just another link in it. Ultimately, it’s about taking control of your own information diet and helping to create a healthier, more fact-based online world for everyone.

Ready to add a powerful verification step to your workflow? The AI Image Detector delivers fast, reliable analysis to help you spot synthetic media in seconds. Try it for free and see for yourself.