A Guide to Social Media Content Moderation

Social media content moderation is how platforms review the stuff we all post and enforce their rules—what they often call 'community guidelines'. It's the system they use to decide what stays up and what comes down, trying to draw a line between free expression and user safety.

What Is Social Media Content Moderation

Think of a huge, bustling city square where billions of people gather every day. They're there to share news, connect with family, and debate ideas. Content moderation is the invisible work of keeping that square safe and usable for everyone. Without it, the space would quickly get overrun with spam, scams, and genuinely harmful behavior, driving most people away.

Every platform is constantly trying to live up to its own standards. With an unimaginable flood of posts, images, and videos hitting the internet every single second, it’s a Herculean task. Moderation has to deal with everything from obviously illegal content to the much grayer areas of harassment and misinformation.

Why Moderation Is Non-Negotiable

This isn't just about tidying up a website; it's a critical function for any online platform. The reasons they pour so many resources into it are straightforward.

- Protecting Users: The number one job is to keep people safe from things like graphic violence, hate speech, bullying, and financial scams. A platform that feels unsafe won't keep its users for long.

- Maintaining Brand Trust: Every platform is a brand, and that brand's reputation is built on the user experience. When moderation fails, you see major brand safety crises where advertisers yank their money, refusing to be associated with a toxic environment.

- Complying with Regulations: Around the world, governments are stepping in. Laws like the EU's Digital Services Act now hold platforms legally responsible for the content they host. Good moderation isn't just a good idea anymore—it's the law.

The real challenge in social media content moderation is the tightrope walk. Platforms have to figure out how to protect free and open debate while also stopping that debate from causing real-world harm. It’s a messy, high-stakes balancing act.

The Core Conflict: A Balancing Act

At its heart, content moderation is a constant tug-of-war. How do you protect people from harm without squashing legitimate free expression?

Every time a moderator takes down a post, someone, somewhere, will probably call it censorship. But if they leave it up, others might see it as negligence. This is the fundamental tension that makes the job so incredibly difficult.

Navigating this requires more than just a "delete" button. It demands clear and public policies, transparent enforcement, and a fair way for people to appeal decisions. As we'll get into, this is exactly where the combination of human judgment and powerful AI becomes absolutely essential to manage the sheer scale and nuance of online conversation today.

The Partnership Between Humans And AI

Modern social media content moderation isn't a case of humans versus machines; it’s a powerful alliance. Think of it like a hospital's emergency room. AI is the triage nurse, rapidly sifting through millions of incoming posts every minute to spot the obvious, urgent problems. The human moderators are the specialist surgeons, stepping in to handle the complex cases that demand nuanced judgment and a steady hand.

This hybrid model is the bedrock of nearly every major trust and safety operation today. Frankly, neither side could handle the sheer scale and complexity of online content alone. AI gives platforms the speed to scan the globe, while humans provide the depth of understanding that technology just can't match yet.

The Irreplaceable Human Element

For all the talk about automation, human moderators are still the cornerstone of effective content moderation. They bring a level of contextual understanding that algorithms simply can't replicate.

A person can tell the difference between a genuine threat and dark humor, or spot the sarcasm that an AI might mistakenly flag as hate speech. Humans get cultural nuances, evolving slang, and the subtle visual cues in a meme that can completely flip its meaning. This ability to read the room is absolutely essential for making fair and accurate calls, especially in those tricky gray areas.

Of course, this critical work comes at a steep personal cost. The psychological toll on moderators repeatedly exposed to traumatic and disturbing content is immense, creating a persistent challenge for the entire industry.

AI: The First Line Of Defense

While humans handle the nuance, AI handles the scale. Technologies like machine learning and computer vision are the tireless sentinels that never sleep, acting as the first filter for all user-generated content. These systems are trained on massive datasets to recognize patterns associated with policy violations.

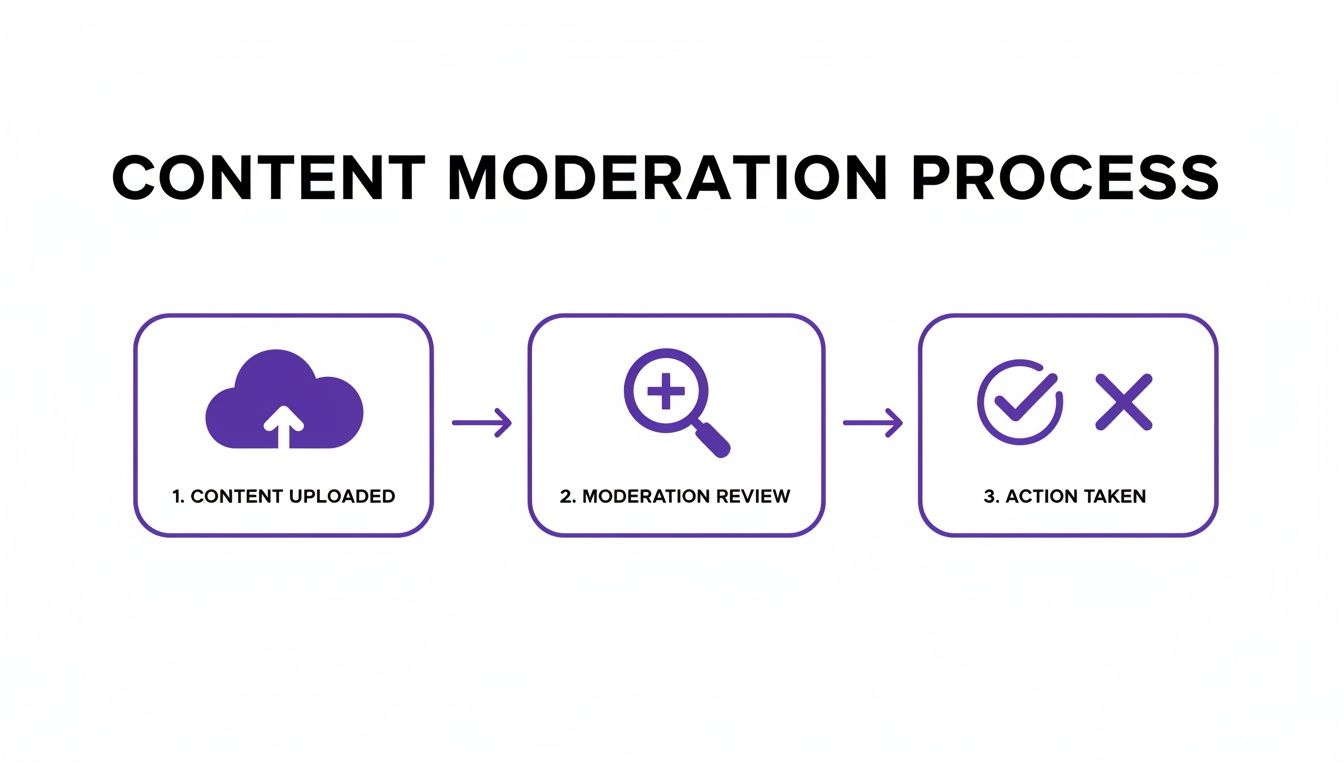

This flowchart gives a high-level look at how this process generally works.

As you can see, automation creates an essential buffer. It ensures the most obvious and harmful content is caught before a human ever has to look at it, dramatically cutting down the volume of material requiring expert review.

The results of this partnership are staggering. For example, major platforms like Meta have removed enormous volumes of violating content, acting on 15.6 million pieces on Facebook and over 5.8 million on Instagram in a single quarter. This level of enforcement is only possible with hybrid models, a trend that took off after the content explosion during the 2020 pandemic. You can find more data on the scale of modern content moderation services and their market growth.

The real goal of the human-AI partnership is to maximize both efficiency and accuracy. AI filters the noise, letting human experts focus their brainpower where it's needed most—on the ambiguous, high-stakes decisions that truly define a platform's commitment to safety.

Human vs AI Moderation: A Comparative Overview

To better understand why this hybrid approach is so dominant, it helps to see the strengths and weaknesses of each side laid out. This table breaks down what humans and AI each bring to the table.

| Feature | Human Moderation | AI Moderation |

|---|---|---|

| Scale & Speed | Slow; limited by individual capacity. Can't review millions of posts in real-time. | Massive scale; can process millions of items per minute, 24/7. |

| Context & Nuance | Excellent. Understands sarcasm, cultural context, slang, and evolving threats. | Poor. Struggles with context, satire, and complex human communication. Often makes literal errors. |

| Consistency | Can be inconsistent due to fatigue, bias, or subjective interpretation. | Highly consistent. Applies the same rules to every piece of content without tiring. |

| Cost | High. Requires salaries, training, and significant investment in wellness programs. | Lower operational cost at scale, but requires significant upfront investment in development. |

| Adaptability | Can adapt to new threats and policy changes quickly with proper training. | Requires retraining on new datasets, which can be slow and resource-intensive. |

| Psychological Impact | High risk of burnout, PTSD, and other mental health challenges for moderators. | None. Machines do not suffer from exposure to harmful content. |

Ultimately, the table shows that humans excel at quality and interpretation, while AI excels at quantity and speed. By combining them, platforms get the best of both worlds.

How The Hybrid Model Works In Practice

The synergy between humans and AI creates a workflow that is both incredibly fast and genuinely thoughtful. The process usually follows a few key steps:

- Automated Flagging: AI systems scan new content in real-time. They’re great at catching clear violations like nudity, graphic violence, or spam using predefined patterns.

- Confidence Scoring: The AI assigns a "confidence score" to each flag, guessing how certain it is that a violation occurred. High-confidence flags might trigger an automatic removal.

- Human Review Queue: Content with lower scores, or posts flagged by users, gets routed to a queue for a human to look at. This is where moderators apply their expertise.

- Feedback Loop: The decisions moderators make are fed back into the AI models. This continuous training helps the algorithm learn from its mistakes and get smarter over time.

Learning from other fields can also be useful. For instance, many help desks have perfected the art of blending AI automation and human expertise to provide better support. This same collaborative approach ensures that clear violations are handled swiftly by technology, while the tricky, context-heavy cases get the careful consideration only a human can provide. It's this balance that makes safer online communities possible at a global scale.

Building an Effective Moderation Policy

At the heart of any solid content moderation strategy is a clear and transparent rulebook. This policy, which you probably know as "community guidelines," is far more than just a stuffy legal document. It's the platform's handshake deal with its users—defining what’s okay, what’s not, and what happens when someone crosses the line.

Think of it as the constitution for your online community. Without it, you’re left with chaos. Enforcement feels random, users get frustrated, and trust evaporates. A well-crafted policy becomes the single source of truth for everyone, from human moderators to automated systems, ensuring decisions are fair and consistent, even at a massive scale.

Core Elements of a Robust Policy

Vague instructions like "be nice" just don't cut it. A truly effective policy gets specific. It breaks down what’s prohibited into crystal-clear categories and gives real-world examples, leaving very little room for guesswork.

You absolutely need to define categories like these:

- Hate Speech: Get granular. Specify which groups are protected (based on race, ethnicity, religion, sexual orientation, etc.). Show the difference between a legitimate critique and a targeted attack.

- Harassment and Bullying: Lay out exactly what this means on your platform. Think targeted insults, credible threats, and unwanted sexual advances.

- Graphic and Violent Content: Be explicit about your rules on depicting violence, self-harm, or nudity. It's also critical to explain the context for any exceptions, like newsworthy events.

- Misinformation and Disinformation: Detail what kind of false information you won't tolerate, especially content that could incite violence or endanger public health.

A policy is not just a list of prohibitions; it is a platform's public commitment to the health and safety of its community. Its clarity and transparency are the foundation upon which user trust is built and maintained.

This kind of detail is a game-changer for both your users and your moderation team. It turns abstract principles into concrete, enforceable standards, which is a cornerstone of effective user-generated content moderation.

The Non-Negotiable Appeals Process

Let's be honest: even the best moderation systems will make mistakes. That's why a transparent, easy-to-find appeals process isn't just a "nice-to-have"—it's a must. It gives users a way to be heard when they feel a call was wrong, proving that you're committed to fairness.

This isn't just about good PR; it's increasingly a legal requirement. As regulators put more pressure on platforms, companies are investing heavily in compliance. With content growing at an exponential rate, the only way forward is a smart mix of AI automation and human review. A fair appeals system is central to navigating this new reality.

Policies as Living Documents

The internet never sits still. New threats, slang, and cultural norms pop up all the time. Your policy can't be a "set it and forget it" document. It has to be a living, breathing guide that evolves with the digital world.

Regular updates are essential to stay ahead of new challenges, such as:

- The explosion of AI-generated deepfakes and synthetic media.

- The clever, coded language bad actors use to fly under the radar.

- Shifting societal standards and new legal goalposts.

The debates around these policies can get pretty intense. Just look at the recent Tiktok hearing discussions to see the kinds of political and cultural heat platforms are under. By constantly reviewing and updating your policies, you show your community that you're listening and adapting. It's this proactive mindset that builds a resilient and trustworthy online space.

The Technology Powering Content Detection

To make sense of the overwhelming flood of content, platforms rely on a sophisticated suite of technologies working around the clock. Think of these tools as digital detectives, scanning billions of posts, images, and videos for policy violations before a human ever lays eyes on them. This first line of defense is absolutely critical for managing social media content moderation at scale.

At the heart of this system are two foundational technologies. First up is Natural Language Processing (NLP), the science of teaching computers to understand human language. It goes far beyond just flagging keywords, instead analyzing context, sentiment, and the subtle relationships between words to spot threats, harassment, or hate speech with surprising nuance.

The second pillar is computer vision, which essentially gives machines the ability to "see" and make sense of visual information. This is what allows platforms to automatically detect things like graphic violence or nudity. By breaking down images and video frames into analyzable data, computer vision systems can classify visual content against a platform’s rulebook in near real-time.

Advanced Detection for Emerging Threats

As online threats evolve, so must the tools we use to fight them. The explosion of AI-generated media, or "deepfakes," has thrown a major wrench into trust and safety efforts. Spotting this kind of synthetic content isn't easy and requires a new breed of detector that can pick up on the subtle digital breadcrumbs AI models leave behind.

These AI image detectors are trained to look for the digital fingerprints and tell-tale signs of artificial creation. They hunt for technical markers that separate a genuine photo from a fake one.

- Lighting Inconsistencies: AI can still stumble when it comes to creating perfectly consistent shadows and realistic reflections across an image.

- Unnatural Textures: Look closely at skin, hair, or backgrounds in some AI images. You might notice a slightly-too-smooth, almost plastic-like quality that these detection models are trained to catch.

- Digital Artifacts: The process of generating an image can leave behind tiny patterns or artifacts that are invisible to us but stand out clearly to a specialized algorithm.

These tools are quickly becoming essential for journalists, platforms, and fact-checkers working to stop the spread of visual misinformation. For a deeper dive, you might want to check out some of the best AI content detection tools on the market.

This kind of analysis often provides a confidence score, which gives a human moderator or journalist a quick, data-backed assessment to help them decide on an image's authenticity.

The Shift to Proactive Moderation

The industry is making a crucial pivot from a purely reactive model. Instead of just waiting for users to report harmful content, platforms are now using AI to get ahead of the problem and prevent harm before it even happens.

The future of social media content moderation isn't just about faster takedowns; it's about building predictive systems that can identify risky behaviors and defuse toxic interactions before they poison a community. This proactive approach is the key to creating genuinely safer online spaces.

This shift is fueled by a massive investment in AI. The AI portion of the content moderation market was valued at $1.5 billion in 2024 and is on track to hit $6.8 billion by 2033. Take a tool like Modulate's ToxMod, for example—it has already analyzed 160 million hours of voice chat in online games, resulting in 80 million enforcement actions against toxic behavior.

It's clear the trend is moving away from simply cleaning up messes and toward preventing them from happening in the first place.

So, how do you know if your content moderation is actually working?

If you're just counting how many posts you've taken down, you're looking at the wrong number. That's a vanity metric, and it tells you almost nothing about the health of your platform. Measuring real success is a much more nuanced game, one that balances accuracy, speed, and the very real human cost of the work.

Think of it like judging a goalkeeper. You don't just count their saves. You look at their positioning, how they command their defense, and, most importantly, how few dangerous shots the other team even gets to take. That’s the kind of sophisticated thinking we need to apply here.

Moving Beyond Takedown Numbers

Focusing solely on the volume of removed content can backfire spectacularly. It often pushes teams toward overly aggressive moderation, which ends up punishing legitimate users and chilling free expression. It’s a classic case of hitting the target but missing the point.

Mature trust and safety teams know better. They focus on metrics that get to the heart of quality and impact. The two most important concepts to grasp here are precision and recall.

- Precision: Of all the stuff we took down, how much of it actually broke the rules? A high precision score means your moderators and AI systems are making the right calls, not just swinging a giant hammer.

- Recall: Of all the rule-breaking content out there, what percentage did we actually catch? High recall means your safety nets are working and you aren't letting tons of harmful material slip through the cracks.

The real trick is finding the right balance. If you tighten the screws too much, you might get great precision but your recall will plummet—you’ll be accurate on the few things you catch, but you'll miss most of the bad stuff. Loosen them too much, and your recall will go up, but your precision will suffer as you start taking down legitimate content.

The real goal isn't just to remove harmful content. It's to shrink its blast radius. Success is when a piece of violating content, even if it briefly exists, is seen by the fewest people possible before it's dealt with.

Navigating the Ethical and Legal Minefield

This isn't just a technical challenge; it's an ethical tightrope walk. Every platform has to constantly weigh the need for safety against a user's right to privacy. That tension gets dialed up to eleven when you have automated systems scanning private messages or analyzing user behavior to get ahead of potential harm.

Then there's the ever-present danger of algorithmic bias. If your AI model is trained on a skewed dataset, it can end up disproportionately targeting marginalized communities. This doesn't just destroy user trust—it can land you in serious legal trouble and spark a public relations nightmare. A huge part of measuring success is running regular audits on these systems to make sure they're enforcing the rules fairly for everyone.

The Overlooked Human Metric

There's one metric that’s arguably the most important, yet it’s almost always overlooked: the well-being of the human moderators.

These are the people on the digital front lines, exposed day in and day out to the absolute worst of humanity. Sky-high rates of burnout, PTSD, and turnover aren't just HR problems; they are glaring red flags that your entire moderation system is unsustainable and failing its people.

A truly successful moderation strategy invests heavily in its moderators. We're talking robust wellness programs, readily available mental health support, and fair working conditions. Tracking things like moderator attrition and conducting regular wellness checks are non-negotiable. They are core indicators of an ethical and sustainable trust and safety operation.

Because without healthy, supported moderators, the whole sophisticated system of humans and AI working together simply falls apart.

Best Practices for a Safer Internet

Let's be clear: building a better, safer internet isn't a job for one group alone. It’s a shared mission. While the big platforms hold most of the cards, the rest of us—journalists, fact-checkers, and even regular users—have a real part to play in steering our online communities toward healthier conversations.

Effective social media content moderation isn't just about platforms playing whack-a-mole with bad content. It's about a collective, proactive effort. When everyone adopts smart, clear practices, we can build a more resilient digital world where harm is reduced and genuine dialogue has a chance to thrive.

For Platforms and Trust and Safety Teams

The buck really stops with the platforms hosting all this user-generated content. Their policies, their tools, and where they choose to spend their money set the stage for everyone's online experience. It's a massive responsibility.

Here’s where they need to focus:

- Build a Strong Hybrid System: The future isn’t just AI or just humans—it’s both working together. Platforms must keep investing in a hybrid moderation model where AI handles the low-hanging fruit (obvious violations at scale) while skilled human moderators tackle the tricky, nuanced cases and appeals.

- Take Care of Your Moderators: This is non-negotiable. Platforms must provide mandatory mental health support, pay fairly, and ensure workloads are manageable. A high turnover rate among moderators isn't just a staffing issue; it’s a red flag that the entire system is under stress and failing.

- Stop Hiding Behind Vague Rules: Community guidelines need to be crystal clear, written in plain language, and full of real-world examples. Platforms should also publish regular, detailed transparency reports that show exactly how much content they're taking action on and how accurate their systems are.

A platform’s real commitment to safety isn't in its press releases. It's in the budget allocated to the people and the technology doing the tough, often invisible work of moderation every single day.

For Journalists and Fact-Checkers

In a world drowning in misinformation, journalists and fact-checkers are more than just reporters; they're our digital detectives. Their work is one of the most important defenses we have against harmful narratives before they go viral.

Their key responsibilities boil down to a few critical actions:

- Verify Everything: Never assume a user-submitted photo or video is authentic. Use every tool in the book, from reverse image searches to emerging AI image detectors, to look for signs of manipulation or synthetic media before you hit publish.

- Report Without Amplifying: When you have to cover harmful content, don't give it free publicity. Avoid linking directly to the original source, which only drives traffic and rewards the bad actors. Use descriptions or carefully cropped screenshots instead.

- Show Your Work: Don't just tell your audience what's true—show them how you figured it out. Explaining your verification process helps build trust and, just as importantly, equips your readers with the media literacy skills they need to spot fakes on their own.

For Everyday Users

Don't underestimate your own influence. Every single user has the power to clean up the online spaces they frequent. Think of it as digital citizenship. Small, consistent actions, when done by millions, can create a massive ripple effect.

Here’s how you can be an active part of the solution:

- Make Your Reports Count: When you see something that breaks the rules, don't just hit the "report" button. Take a moment to select the most specific policy violation from the menu. This little step helps route your report to the right team and gives the AI better data to learn from.

- Curate Your Own Experience: You have more control than you think. Use the tools available—blocking, muting, and keyword filters—to create a feed that works for you. Proactively removing toxic accounts from your timeline is a powerful way to protect your own mental health.

Below is a quick checklist summarizing these essential actions for each group.

Actionable Best Practices Checklist

This table breaks down the core responsibilities for each stakeholder in creating a healthier online ecosystem.

| Stakeholder | Key Action 1 | Key Action 2 |

|---|---|---|

| Platforms | Invest heavily in hybrid moderation systems (AI + Human). | Prioritize moderator well-being with robust mental health support. |

| Journalists | Rigorously verify all user-generated content before publishing. | Report on harmful content without amplifying its reach. |

| Users | Submit specific, accurate reports on policy-violating content. | Actively use block, mute, and filter tools to curate a safer feed. |

By working in concert, these actions form a multi-layered defense that makes our shared digital spaces safer and more trustworthy for everyone involved.

Common Questions About Content Moderation

If you're trying to get your head around content moderation, you're not alone. It's a complicated field that's always changing. Let's break down some of the most common questions people have.

What’s the Point of Social Media Content Moderation?

At its core, content moderation is all about making online platforms safer and more welcoming. Every platform has its own rulebook—often called community guidelines—and moderation is the process of enforcing those rules.

This means finding and dealing with content that's illegal, harmful, or just plain against the rules. We're talking about things like hate speech, bullying, graphic violence, and the endless flood of misinformation. Good moderation protects users, upholds the platform's reputation, and helps it stay on the right side of the law. It’s the bedrock of user trust.

How Does AI Actually Change Moderation?

Think of AI as the first line of defense. The sheer volume of content uploaded every second is simply too much for any team of humans to handle. AI systems can scan millions of posts, images, and videos in real-time, automatically flagging or removing content that clearly breaks the rules.

This is incredibly effective for obvious violations like spam or graphic images. But AI isn't a silver bullet. The future—and the present, really—is a partnership. AI handles the massive scale of clear-cut cases, which frees up human moderators to focus on the tricky stuff. The gray areas that require nuanced understanding of context, culture, and intent still need a human touch. This hybrid approach gives you both speed and accuracy.

The biggest challenges in content moderation today are threefold: the immense scale of content creation, the increasing sophistication of harmful media like AI-generated fakes, and the difficulty of applying policies consistently across different languages and cultures.

On top of all that, there’s the constant tug-of-war between protecting free speech and preventing real-world harm. And we can't forget the very real mental toll this work takes on human moderators. Supporting their well-being is one of the industry's most critical and ongoing responsibilities.

These hurdles make it clear that there's no single solution. Effective moderation demands a mix of smart technology, fair policies, and dedicated human oversight. It's a job that’s never truly done.

At AI Image Detector, we build sophisticated tools for journalists, fact-checkers, and platforms who need to know if an image is real or AI-generated.

Help stop the spread of fake images. Try our free detector tool and see how it works.