Ai Generated Content Detector: ai generated content detector explained

At its core, an AI-generated content detector is a specialized tool built to answer a simple, yet critical, question: was this created by a human or a machine? It sifts through text, images, and other media, hunting for the subtle digital fingerprints that AI models tend to leave behind. Think of it as a verification layer for a world increasingly filled with synthetic content.

The Urgent Need for AI Content Detection

Generative AI tools like ChatGPT, DALL-E, and Midjourney have completely reshaped the content creation game. Suddenly, anyone can generate student essays, news articles, or even photorealistic images in a matter of seconds. While this is a huge leap forward for creativity and efficiency, it also throws a major wrench into the works for anyone who relies on authentic information.

This explosion in AI-generated content has created a massive demand for reliable ways to check it. The market for these detection tools was valued at USD 2.24 billion in 2025, but it's expected to skyrocket to a staggering USD 32.09 billion by 2035—a compound annual growth rate of 30%. This isn't just a niche market; it's a direct response to a fundamental shift in how we create and consume information.

Why Verification Matters More Than Ever

Without a reliable way to tell human and machine-generated content apart, the risks are huge. Professionals in all sorts of fields are now grappling with this problem every single day.

Just think about these real-world situations:

- Educators are trying to uphold academic integrity when students can produce an entire essay from a single prompt.

- Journalists must verify the authenticity of source photos and videos to stop misinformation from spreading like wildfire.

- Businesses are dealing with everything from fake product reviews and doctored financial documents to massive-scale copyright infringement.

An AI-generated content detector is like a digital forensics expert. It meticulously examines the evidence—the words, pixels, and hidden patterns—to figure out where a piece of content really came from. A forensics team looks for physical fingerprints at a crime scene; these tools hunt for the almost invisible "digital fingerprints" left by an AI.

Here's a glimpse of how a tool like AI Image Detector presents its analysis, giving users a clear answer to help them make a decision.

The goal is to deliver a straightforward verdict, cutting through the noise to provide a clear, actionable result.

The real problem isn't just about catching AI-generated work. It's about protecting the value of human creativity, critical thought, and trust itself. When we can no longer be sure what's real, the very foundation of how we communicate starts to crumble.

To truly get a handle on the cat-and-mouse game of AI generation and detection, it helps to understand the broader field of Artificial Intelligence. This isn't just a fleeting trend. It's a permanent part of our information landscape, and that makes detection an essential skill for navigating this new reality.

How AI Content Detectors Uncover Hidden Clues

Think of an AI generated content detector as a digital forensic expert. Instead of looking for fingerprints at a crime scene, it hunts for the statistical "fingerprints" and structural patterns that AI models leave behind.

These tools don't "read" an article or "see" a picture like we do. They dive deep into the underlying data—analyzing the mathematical relationships between words or the arrangement of pixels—to spot the subtle tells of machine creation.

The game plan for detecting AI text is completely different from spotting AI images. For text, the dead giveaway is predictability. For images, it’s all about imperfection.

Decoding the Predictability of AI Text

Let's try a quick word game. If I say, "The dog chased the...," your brain instantly offers up words like "ball," "cat," or "car." AI language models work the same way, just on an unimaginable scale. They constantly predict the next most probable word based on the mountains of human text they were trained on.

This predictive nature is what makes AI writing sound so coherent, but it's also its Achilles' heel. This is where two powerful concepts come into play: perplexity and burstiness.

Perplexity is a way to measure how predictable a piece of writing is. Human writing is often delightfully unpredictable—we use creative metaphors, odd phrasing, and quirky sentence structures. AI-generated text, which is optimized to be as probable as possible, usually has very low perplexity. It just feels a little too perfect, a little too safe.

Burstiness describes the natural rhythm of human writing. We tend to write in bursts—a few short, punchy sentences followed by a longer, more descriptive one. AI models, on the other hand, often produce text with eerily uniform sentence lengths, lacking that organic, "bursty" flow.

An AI text detector crunches these numbers. If a text has unnaturally low perplexity and a flat, non-bursty rhythm, there's a good chance a machine wrote it.

This is a huge deal in the world of academic integrity. The AI detector market, valued at USD 0.58 billion in 2025, is expected to soar to USD 2.06 billion by 2030. This growth is fueled by a staggering 300% increase in AI-generated essays since tools like GPT-4 became mainstream. Many universities now use detectors that lean heavily on perplexity to flag synthetic writing.

Uncovering the Flaws in AI Images

When it comes to images, the detector plays the role of an art forgery expert, scanning for the tiny mistakes and logical impossibilities that AI models often make. Instead of grammar, it’s looking at pixels, light, and texture.

Here are the common clues an AI image detector is programmed to find:

Digital Artifacts and Noise: AI models sometimes leave behind bizarre, faint patterns or "noise" in areas that should be smooth, like a clear blue sky or a painted wall. These are microscopic glitches that are practically invisible to us but light up like a flare for an algorithm.

Inconsistent Lighting and Shadows: Getting the physics of light right is incredibly hard. An AI might generate an image where shadows fall in opposite directions or one person is lit from the front while the person next to them is lit from the side. These physical impossibilities are a smoking gun.

Unnatural Textures and Patterns: Look closely at things with repeating patterns—brick walls, wood grain, or even skin. AI can make these textures feel too perfect or oddly repetitive, missing the random imperfections of real life. The classic examples, of course, are people with extra fingers or warped facial features.

When you upload a JPEG or PNG, an AI Image Detector scans it for these exact inconsistencies. The more of these red flags it finds, the more confident it is that the image is AI-generated. You can get a deeper look into this process in our guide on how AI detectors detect AI.

AI Detection Techniques for Text vs Images

While both text and image detectors share the same goal, their methods couldn't be more different. Grasping this distinction is crucial for understanding their strengths and weaknesses.

The table below breaks down the core differences in their approach.

| Detection Focus | AI Text Detection | AI Image Detection |

|---|---|---|

| Primary Signal | Statistical predictability and linguistic patterns. | Visual inconsistencies and digital artifacts. |

| Key Metrics | Perplexity (word choice predictability) and Burstiness (sentence length variation). | Pixel noise, lighting physics, shadow consistency, and texture naturalness. |

| Common Failures | Misinterpreting simple or formulaic human writing as AI. | Overlooking subtle AI edits or being fooled by highly refined AI models. |

| Analogy | A literary critic analyzing an author's style and word choice. | A forensics expert examining a photograph for signs of tampering. |

Ultimately, one is looking for a voice that's too perfect, while the other is looking for a world that's physically impossible. Both are powerful ways to separate human creativity from machine replication.

Interpreting Detection Results and Confidence Scores

When an ai generated content detector finishes its work, it doesn't just flash a simple "yes" or "no." Instead, you'll get a confidence score, usually a percentage. This number is the key to the whole process, but it's also where most people get tripped up.

Think of it like a weather forecast. If a meteorologist predicts a 90% chance of rain, they aren't promising a downpour. They're just expressing a high degree of confidence based on all the data they have—wind patterns, pressure systems, you name it. It's a calculated probability, not a guarantee.

An AI detector’s score is the exact same idea. A 95% "Likely AI" score means the model found strong, repeated signals that point to machine generation. It's a compelling piece of evidence, but it isn't an ironclad verdict. The tool is there to guide your judgment, not to make the decision for you.

Beyond the Binary Human or AI

The real world is messy. Content is rarely 100% human or 100% AI anymore. A digital artist might use an AI model to create a complex background before painting the main subject themselves. A writer might ask an AI for a rough outline and then rewrite every single word. This is where things get nuanced.

An AI detector is trained to spot very specific fingerprints left behind by machine generation. To get a better feel for what those are, you can read our guide on what AI detectors look for. When a piece of content is a hybrid of human and machine effort, the tool might kick back a middle-of-the-road score because it's seeing a mix of conflicting signals.

This is exactly why context is king. You have to consider where the content came from and how it was likely made before jumping to conclusions.

Navigating Common Failure Modes

No detector is foolproof. Understanding their weak spots is essential for using them responsibly. The two big issues you'll run into are false positives and false negatives. Getting these wrong can have real consequences, from wrongly accusing a student of cheating to letting sophisticated misinformation slip through the cracks.

False Positives (Type I Error): This is when the detector flags human-made work as AI-generated. It tends to happen with text that is very formal, structured, or uses simple language. With images, a heavily edited photograph or certain digital art styles can sometimes trigger a false positive.

False Negatives (Type II Error): This is the opposite problem—the tool misses AI-generated content and calls it human. This is more common with output from the very latest AI models or when a savvy user has carefully edited the AI content to scrub away the most obvious digital artifacts.

The most important thing to remember is that a confidence score is just one piece of the puzzle. It’s powerful evidence, but it needs to be combined with your own critical eye and understanding of the situation to see the full picture.

A Practical Checklist for Making a Decision

When a detector gives you a result—especially one that feels off or lands in a gray area—don’t just accept it. Run through this quick checklist to make a more balanced and fair assessment.

Assess the Score Critically: Is the score overwhelmingly high, like 99%? Or is it more ambiguous, like 65%? A sky-high score is a much stronger signal than one sitting on the fence.

Consider the Content's Context: Where did this come from? Is it a student’s term paper, a news photo from a protest, or a new profile picture on a dating app? The stakes and expectations are completely different in each case.

Look for Other Red Flags: Beyond the score, does anything else seem off? Does the text have a weird, lifeless tone or make strange factual claims? Does the image have the classic AI tells, like mangled hands, bizarre shadows, or plasticky skin textures?

Evaluate the Creator's Plausible Workflow: Is it reasonable to think this person would use AI? A graphic designer experimenting with concept art is a world away from a photojournalist documenting a historical event.

By combining the data-driven insights from an ai generated content detector with your own human expertise, you can move past simple detection and get to genuine verification.

Real-World Applications for AI Detection Tools

Theory and confidence scores are interesting, but the real value of an AI-generated content detector clicks into place when it solves a genuine, high-stakes problem. These aren't just gadgets for tech geeks; they're quickly becoming must-have tools for anyone whose work depends on authenticity. From newsrooms to classrooms, detection tech offers a critical line of defense against synthetic media.

Let’s walk through a few scenarios where these detectors go from a neat idea to an essential asset, protecting integrity and preventing some pretty serious fallout.

Safeguarding Journalistic Integrity

Picture this: a journalist is up against a tight deadline when an anonymous source sends over a dramatic photo. It supposedly shows the aftermath of a political protest and looks like perfect front-page material. A few years back, the biggest worry would have been a bit of Photoshop.

Today, the threat is a whole lot more sophisticated. That entire scene could have been spun up by an AI model in seconds.

Running the image through a tool like AI Image Detector gives the reporter an immediate first-pass analysis. If it comes back with a 98% "Likely AI-Generated" score, that’s a massive red flag. It’s the signal to stop and dig deeper. The tool might have caught subtle giveaways the human eye would miss under pressure, like inconsistent shadows across the crowd or weird, repeating textures in the background buildings.

Without that quick check, publishing the image could lead to a major retraction, torpedo the news outlet's credibility, and spread dangerous misinformation. The detector doesn't replace journalistic ethics—it backs them up with a powerful verification step.

Upholding Academic Honesty

An art history professor is grading final papers and stumbles upon a submission that's just a little too perfect. It's a stunningly detailed analysis of a Renaissance painting, complete with a photorealistic digital recreation of a "lost" preparatory sketch. The student's work has been average all semester, so this sudden leap in quality is suspicious.

The professor can use a detector on both the text and the image. The written analysis might get flagged for having extremely low perplexity—a classic sign of AI writing. But the real giveaway is the sketch. When analyzed, it reveals clear digital artifacts and impossible lighting physics that just don't happen in a real charcoal drawing.

This gives the educator solid evidence to start a conversation with the student about academic integrity. If they didn't check, AI-generated work would get the A, devaluing the effort of honest students and completely missing the point of the assignment: to develop critical thinking and research skills.

Protecting Online Communities

The trust and safety teams at social media platforms and online marketplaces are in a constant battle against fake accounts. These profiles are used for everything from scams and harassment to coordinated influence campaigns. And a key part of any fake profile is a realistic-looking—but entirely nonexistent—person in the profile picture.

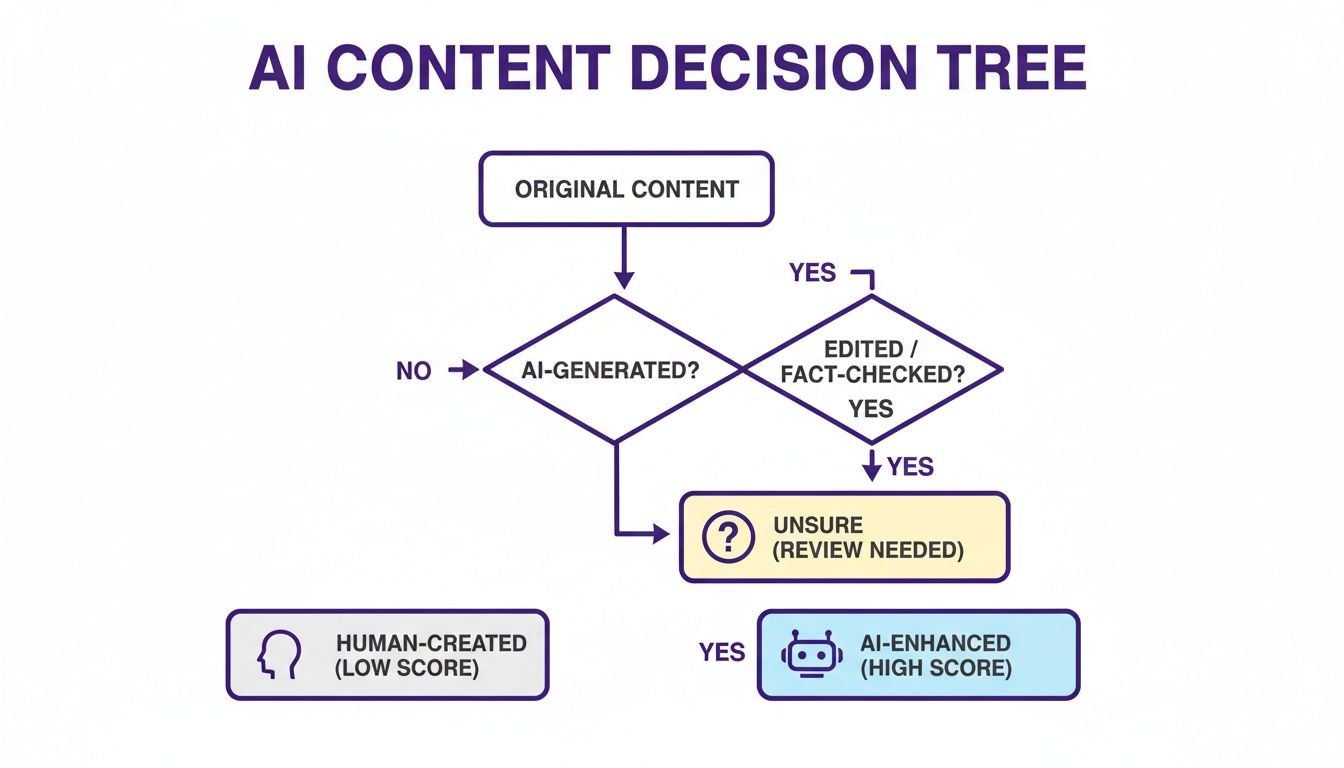

An AI-generated content detector can be integrated via an API to automatically screen new profile pictures as they're uploaded. A simplified decision-making workflow might look something like this.

This infographic lays out a basic decision tree for handling content based on what an AI detector finds.

As the flowchart shows, a high AI score can automatically flag an account for human review, helping teams focus their attention where it's needed most and act fast.

By flagging accounts with AI-generated profile pictures at scale, a platform can stop bad actors before they cause real harm. The alternative is playing catch-up, cleaning up the mess after the scams have already played out.

Deepfakes and synthetic images pose a serious threat to media integrity, making detectors essential for verifying authenticity in a world flooded with misinformation. The market for these tools reflects this urgency; valued at USD 19.98 billion in 2025, the global content detection market is projected to skyrocket to over USD 68.22 billion by 2034. This growth is fueled by things like the 550% surge in deepfake incidents since 2023, which has swamped platforms with forged media. You can learn more about the trends in the content detection market in recent industry reports.

Choosing the Right Detector for Privacy and Scale

Picking an AI-generated content detector isn't as simple as just looking for the highest accuracy score. Once you start using these tools regularly, you quickly run into bigger questions about data privacy and the ability to handle a large volume of content. The reality is, not all detectors are created equal, and your choice can seriously impact your data security.

Think about it. If you're a journalist verifying a sensitive photo from a source, or a company scanning proprietary documents, the last thing you want is for that data to end up on some third-party server. It could be used to train their models or, worse, get exposed in a data breach. This is why a "privacy-first" mindset is so important.

Why Data Privacy in Detection Matters

A privacy-first detector is built on a simple promise: it analyzes your content without keeping it. You upload a file, the tool does its job in real-time, gives you a result, and then your file is immediately deleted from its servers. This ephemeral process ensures your intellectual property, sensitive information, and personal data stay firmly in your control.

Be wary of free tools with vague or non-existent data policies. Many of these services operate by using your submissions to train their own AI, essentially turning your private content into their training data.

For individuals and businesses alike, the core principle should be: the tool works for you, you don't provide free training data for the tool. Choosing a detector that doesn't store your files is a critical step in maintaining digital sovereignty and security.

This is non-negotiable for professionals handling confidential material. The risk of a leak, no matter how small, is just too high a price to pay for a "free" analysis.

Scaling Detection with an API

For one-off checks, a simple drag-and-drop website is fine. But what happens when you need to screen thousands of images or documents every day? A social media platform, for instance, can't have someone manually check every single profile picture for AI generation. This is where an Application Programming Interface (API) becomes essential.

Think of an API as a secure, automated connection between two different pieces of software. Instead of a person physically uploading a file to a website, your own application can send the file directly to the detector's system and get the results back almost instantly. It’s like building the detector’s brain right into your own workflow.

This kind of automation is the only way to handle detection at a massive scale, protecting platforms and communities from a flood of synthetic content in real time.

An Example Workflow: API Integration

Let’s walk through a real-world scenario. A social network wants to automatically screen every new profile picture to combat the rise of fake accounts.

Here’s a simplified look at how they could integrate an AI image detector's API:

- User Uploads an Image: A new user signs up and uploads their profile picture to the social network.

- API Call is Triggered: The platform's backend automatically sends a copy of that image to the detector's API. This all happens behind the scenes in a fraction of a second.

- Detector Analyzes the Image: The detection model scans the image for tell-tale signs of AI and returns a clear verdict and a confidence score (e.g., "AI Probability: 97%").

- Platform Takes Action: The social network’s system instantly receives this result. Based on rules they've already set, it can take automated action:

- High AI Score (>95%): The account is automatically flagged for a manual review by a human trust and safety agent.

- Moderate Score (60-94%): The account might be placed in a temporary queue with limited permissions until it’s cleared.

- Low Score (<60%): The profile picture is approved, and the user’s account is activated immediately.

By using an API, the platform can screen 100% of new images efficiently. This frees up its human moderators to focus their time on the nuanced, complex cases where their judgment is truly needed. It’s a smart way to automate for safety and authenticity.

The Future of the AI Detection Arms Race

The dynamic between AI content creation and detection is a classic cat-and-mouse game. As generative models get better and better, their output becomes almost indistinguishable from human work. In turn, detection tools have to get smarter, kicking off a cycle of innovation on both sides. This back-and-forth isn't a problem—it’s a sign that the whole field is growing up.

Generative AI is moving past just cranking out basic text and images. These models are starting to master things like nuance, emotional tone, and complex reasoning, which makes their output feel incredibly authentic. Some developers are even trying to build an undetectable AI writer that intentionally erases the very statistical patterns that today's detectors are built to find.

Adapting to a More Sophisticated Reality

To stay relevant, the next generation of any leading ai generated content detector has to evolve beyond current methods. The future is all about more advanced techniques that can catch the subtle, almost imperceptible tells of a machine's handiwork.

Two areas, in particular, are leading the charge:

Digital Watermarking: This is like embedding a hidden, permanent signature directly into AI content the moment it's made. It's a completely invisible signal that a detector can instantly read, confirming the content's synthetic origin with zero doubt.

Multimodal Analysis: Instead of looking at a piece of text or an image on its own, future detectors will analyze content across different formats at once. Imagine a tool that checks the claims made in a video against the visual cues and the audio track, hunting for tiny inconsistencies that give away its AI roots.

The Human and Machine Partnership

Let's clear up a common myth: no detector will ever be 100% accurate, and that's okay. The point of these tools isn't to replace human judgment but to supercharge it. Think of them as expert assistants, giving you data-driven evidence to help you make a more confident call. An 85% AI score isn't a final verdict; it's a strong signal that a human expert should probably take a closer look.

This technology isn't just a temporary fix for a passing trend. As AI gets woven into everything we do, the need for tools that verify authenticity is only going to grow. Detection is becoming a permanent and essential part of how we navigate information.

Looking forward, this "arms race" isn't slowing down. Generative models will keep churning out more convincing fakes, and detectors will keep finding clever new ways to spot them. It's this constant competition that ensures that even as the line between human and machine gets blurry, we'll always have the tools to help us find it.

Got Questions? We've Got Answers

Still curious about how AI content detectors work in the real world? Let's tackle some of the most common questions that come up.

How Accurate Are AI Content Detectors, Really?

This is the big question, and the honest answer is: it depends. The best tools out there can be incredibly accurate, often hitting over 95% precision on content that's purely human or purely AI-generated. But no detector is foolproof.

Where things get a bit fuzzy is with hybrid content—think a human-written article with an AI-generated paragraph dropped in, or a heavily edited piece. The latest AI models are also getting smarter at mimicking human patterns. The key is to treat the detector's score as a powerful piece of evidence, not the final word.

Can a Detector Spot AI Edits on a Real Photograph?

Yes, this is exactly what many modern AI image detectors are built for. They're not just looking for images created from scratch by AI. Instead, they hunt for the subtle, digital fingerprints left behind by AI editing tools.

Think of it like a forensic analysis. The tool scans for things like unnatural textures, weird blending between elements, or lighting that just doesn't quite make sense. If it finds these clues on a real photo, you might get a mixed result or a lower confidence score. This is incredibly useful, as it tells you the image isn't fake, but it has been manipulated by AI.

A key takeaway is that these tools don't just give a binary "AI" or "Human" verdict. They often provide a spectrum of probability, helping you understand the nuance in how an image or text was created.

Are AI Content Detectors Free to Use?

Many are, especially for casual use. For example, our own AI Image Detector offers a free version that's perfect for checking a few images here and there. Most free tools have daily limits on the number of checks you can run or the size of the files you can upload.

For businesses and developers who need to check a high volume of content, paid plans are the way to go. These almost always include API access, which lets you plug the detection technology right into your own apps or workflows, along with higher usage caps and faster processing.

Ready to put it to the test? The AI Image Detector offers a free, privacy-first tool to check for AI generation in seconds. Try it for yourself at aiimagedetector.com.