How to Detect AI Image Content Like an Expert

To tell the difference between a real photo and an AI-generated one, you need a mix of smart tools and a sharp eye.The best approach combines automated detection with manual inspection, looking for tell-tale signs like odd textures, messed-up hands, and weird lighting—all common giveaways of AI. This skill is becoming absolutely essential as AI images pop up everywhere online.

The Growing Need To Detect AI Image Content

Not too long ago, faking a believable image took serious Photoshop skills and a lot of time. Now, anyone can conjure up incredibly realistic visuals in seconds with tools like Midjourney, DALL-E, and Stable Diffusion. While this is a game-changer for creativity, it also throws a wrench in things. The line between real and fake has never been so blurry.

Because of this, spotting AI-generated images isn't just a job for tech experts anymore. It’s a core part of being digitally literate for all of us. The ripple effects are huge, touching on almost every part of how we interact online.

Real-World Implications of AI Imagery

The explosion of AI imagery is fueled by powerful models trained on massive amounts of data. A look into data scraping practices for AI gives you a sense of how these models learn. As they get better, the need to verify what we see becomes urgent, especially in high-stakes situations.

Think about these real-world scenarios where spotting an AI fake is critical:

- Misinformation Campaigns: Fabricated images of political events, protests, or disasters can be weaponized to manipulate public opinion or spark chaos. A single, convincing fake photo can go viral before anyone has a chance to fact-check it.

- Social Media Authenticity: From fake influencer accounts pushing crypto scams to complex catfishing plots, AI-generated profile pictures make it frighteningly easy to build a believable—but completely false—online identity.

- Journalism and Media Ethics: News organizations have to be absolutely sure the images they publish are authentic to protect their credibility. Publishing just one unverified AI photo as real news can destroy a publication's reputation.

- Art and Copyright: The art community is in the middle of a heated debate about originality and ownership. Being able to separate human-made art from AI-generated pieces is vital for art contests, gallery exhibitions, and copyright law.

The ability to question and verify visual media is the first line of defense against digital deception. It’s about cultivating a healthy skepticism and having the tools to find answers.

Why Verification Matters More Than Ever

The game has changed. We're not just looking for obvious mistakes like a person with six fingers anymore. Today's AI can create images that are almost perfect at first glance, making a trained eye and reliable tools more important than ever. If you're looking to build up this skill, learning how to check if images are authentic is a great starting point: https://www.aiimagedetector.com/blog/images-for-authenticity

The point isn't to villainize AI. It's about creating a smarter, more discerning public. When you know how to spot a synthetic image, you can engage with online content more responsibly and protect yourself and others from being misled. The methods we’ll cover will give you the practical skills you need to navigate this new visual landscape with confidence.

While having a good eye for the strange quirks of AI art is a fantastic skill, automated tools are your first and fastest line of defense. These platforms are built to scan an image for the subtle digital fingerprints that AI models leave behind, giving you a probability score in seconds. If you have to sift through a ton of images, they're a lifesaver.

But you have to use them with the right mindset. No tool is perfect. Think of an AI image detector less like a final judge and more like an expert consultant. It offers a data-driven opinion that can steer your own manual review, pointing you toward suspicious areas you might have overlooked.

Popular AI Image Detection Platforms

The market for these detectors is blowing up. The global demand for AI-based image analysis was valued at around USD 10.79 billion in 2024 and is only expected to climb. This has led to some really solid tools hitting the market.

Here are a few of the most recognized names you'll run into:

- Hive AI Detection: This is a big one. Hive offers a whole suite of tools for detecting both AI text and images, and it's trusted by major companies for moderating content at a massive scale.

- Illuminarty: I really like this one for its detail. It gives you a clear percentage score and even tries to guess which AI model made the image, like Midjourney or Stable Diffusion. This is super helpful if you're doing a more technical deep-dive.

- AI or Not: Simple, clean, and fast. It’s a great choice for a quick check when you don't need a full-blown analysis.

Since each tool runs on slightly different algorithms, I often run a really suspicious image through two or three of them. If two out of three flag an image as AI-generated, my confidence in that call goes way up.

Here’s a look at the kind of clean, no-nonsense result you can get from a platform like Hive. It's designed to give you a quick, easy-to-read confidence score.

Understanding the Results and Their Limits

When you get a result back, it’s almost never a simple "yes" or "no." You’ll usually see a probability score, maybe a percentage or a label like "Likely AI-Generated."

Key Takeaway: An AI detector's score is just that—a score based on probability, not an absolute fact. A high "AI-generated" score means the image has a ton of patterns linked to known AI models, but it can’t completely rule out a heavily manipulated real photograph.

It’s also crucial to remember that this is a constant cat-and-mouse game. As image generators get better, they get better at hiding the obvious tells that older detectors look for. This means a tool’s accuracy can dip when a new, more advanced AI model is released. For a closer look at the tech behind this, our guide to AI image identification breaks it down further.

Honestly, a hybrid approach works best. Use an automated tool for the initial quick scan. If it flags something, that's your cue to switch gears and apply the manual inspection techniques we'll cover next with a much more critical eye.

Choosing the Right Tool for Your Needs

The best detector really depends on what you're doing. Are you a journalist on a tight deadline, a community moderator, or just someone curious about a weird picture on social media?

To make it easier, here’s a quick rundown of what each leading tool does best.

Comparison of Top AI Image Detection Tools

This table compares some of the top platforms to help you find the right fit for your specific verification needs.

| Tool Name | Key Feature | Reported Accuracy | Pricing Model | Best For |

|---|---|---|---|---|

| Hive AI Detection | Comprehensive AI content moderation suite | High, used by major platforms | Enterprise/API-based | Businesses needing large-scale, automated content screening. |

| Illuminarty | Identifies the potential source AI model | Generally reliable | Freemium with paid tiers | Investigators and researchers wanting more technical detail. |

| AI or Not | Simple, fast, and easy-to-use interface | Good for clear-cut cases | Free | Quick, casual checks by social media users or educators. |

Ultimately, the best way to get comfortable with these tools is to just play with them. Grab a photo you know is real and another you know was created with an AI like DALL-E. Run them both through a few platforms and see what happens. This hands-on trial-and-error is the fastest way to learn how they work and build them into your own process.

Mastering the Manual Eye Test for AI Images

While automated tools give you a great head start, they're not infallible. The best AI models are getting shockingly good at faking reality, and sometimes they can slip right past the algorithms designed to flag them. This is where your own critical eye becomes your most reliable tool.

Learning to manually inspect an image for AI artifacts is a skill. It’s about training your brain to spot the subtle, tell-tale inconsistencies that AI models almost always leave behind. Think of yourself as a digital detective. The goal is to build your confidence so you can question any piece of visual content that crosses your screen.

The Uncanny Valley of AI Hands and Fingers

For a long time, the most infamous giveaway in AI imagery has been human hands. For a whole host of complex reasons, AI models have always struggled to get hands right. It’s often the first and best place to start your investigation.

When you think an image might be AI-generated, zoom right in on the hands and look for the classic mistakes:

- Count the fingers. This is the big one. Seeing a person with six fingers (or sometimes only four) is a dead giveaway. It’s a bizarre mistake a human artist would never make, but one that an algorithm can easily miss.

- Check the anatomy. Do the fingers bend in a natural way? You'll often see fingers that look rubbery, are unnaturally long, or are twisted at impossible angles. Joints might be missing, or the proportions just feel off.

- Look at interactions with objects. Pay attention to how a hand holds a cup or rests on a table. AI-generated hands often seem to merge with objects or float just above the surface they're supposed to be touching.

I always think of hands in AI images as a stress test for the algorithm. Their complex structure is a minefield of potential errors, making them a goldmine for anyone looking for the truth.

Decoding Distorted and Nonsensical Text

Another glaring weakness for most image generators is text. While an AI can write an essay, it often fails spectacularly when asked to render simple words inside a picture. If you spot any text in the background—on a sign, a t-shirt, or a book cover—it's a critical clue.

AI-generated text usually looks like a garbled, alien script. Letters are warped, mashed together, or look like a language that doesn't actually exist. For example, a street sign in a supposedly American city might display characters that look more like Cyrillic or some bizarre fusion of different alphabets. Real text, even if it's blurry, still follows the rules of a real language. AI text often breaks those rules completely.

Spotting Asymmetry and Bizarre Blending

AI models are built on recognizing patterns, but they can get tripped up on maintaining perfect symmetry and logical consistency, especially with the little details. This is where you can catch them slipping.

- Mismatched Accessories: Look closely at things like earrings. It’s common to see an AI portrait where a person has a large, ornate earring in one ear and a completely different one—or none at all—in the other.

- Unusual Patterns: Check out patterned clothing or wallpaper. You might find that a repeating design has strange breaks, morphs into something else, or doesn't wrap realistically around a person or object.

- Strange Background Blending: Examine where different background elements meet. You might notice a tree branch that seems to unnaturally merge into a building or a fence post that dissolves into the grass. These "blending artifacts" happen because the AI doesn’t understand that objects are physically separate.

The AI image recognition market is exploding, projected to hit USD 9.79 billion by 2030. This massive growth just shows how sophisticated both the generation and detection sides are becoming, making your manual skills more important than ever.

By combining these manual checks, you’ll develop a solid process for spotting fakes. For a deeper dive, our guide on how to check if a photo is real offers even more strategies. The key is to approach every image with a curious and critical mindset, questioning the details until you’re sure of what you’re seeing.

Looking for Clues Hidden in Plain Sight

When you can't spot the obvious giveaways, like mangled hands or jumbled text, it's time to dig a little deeper. The best AI models have gotten pretty good at avoiding those classic blunders, but they still leave behind subtle, almost hidden, fingerprints. This is where you have to train your eye to see what others miss, and it's what separates a casual glance from a real analysis to detect an AI image.

This isn't about running through a simple checklist. It's about developing a gut feeling for what makes an image feel off—maybe it's a little too perfect or something just doesn't add up logically. These advanced clues are usually woven into the very fabric of the image itself, in the textures, the lighting, and the reflections.

If you really want to get good at this manual eye test, it helps to know how these images are made in the first place. Getting a handle on understanding AI rendering processes gives you context for why certain strange artifacts show up, making them much easier to catch.

The Unmistakable AI Sheen

One of the most common yet subtle giveaways is something I like to call the "AI sheen." It’s this unnaturally smooth, almost waxy or plastic-like quality you’ll see on surfaces, especially on people's skin. Real human skin is full of imperfections—pores, tiny hairs, fine lines, and slight color variations. Even the most sophisticated AI models have a hard time getting that right.

What they often spit out instead is skin that looks like it’s been airbrushed into oblivion. It has no real texture or depth; it just looks flat and flawless in a way that isn't human. This sheen isn’t just for people, either. You can spot it on other surfaces like wood, metal, or fabric, where the texture feels just a bit too uniform and synthetic.

Putting Light and Shadows Under the Microscope

Lighting follows the laws of physics, a concept AI models don't truly grasp. They're great at copying patterns from their training data, but they often mess up when trying to build a believable and consistent lighting environment across a whole scene. This is a goldmine for spotting fakes.

When you're looking at an image, ask yourself a few key questions:

- Where is the light coming from? If you see bright highlights on one side of an object, but the shadows are falling as if the light is coming from the opposite direction, that’s a huge red flag.

- Do the shadows look right? Look for shadows that are way too soft, weirdly sharp, or cast at impossible angles. A shadow should always match the object and the light source.

- Are reflections believable? Shiny things like glass, puddles, or mirrors should reflect what's around them. AI often fumbles here, creating reflections that are blurry, distorted, or just don't match the scene at all.

I’ve caught quite a few fakes by looking at the eyes in a portrait. You’ll see a perfect reflection of a window or a professional ring light, but then you look at the rest of the scene and that light source is nowhere to be found. It’s a small detail, but it’s damning evidence.

Examining Patterns and Textures

Repeating patterns are another weak spot for AI. It understands the idea of a brick wall or a knitted sweater, but it struggles to keep that pattern consistent and logical across a large area.

Zoom in and hunt for these kinds of flaws:

- Broken Patterns: You might see a perfect brick pattern that suddenly just... melts into a weird, blurry mess for no apparent reason. The AI essentially got tired and forgot to keep the pattern going.

- Unnatural Weaves: On clothes or carpets, the weave might look fine in one spot but then get twisted and distorted as it wraps around a person or another object.

- Impossible Geometry: Look closely at architectural details, tile floors, or complex wallpaper. You’ll often find geometric shapes that just couldn't exist in reality, with lines that don't connect or angles that defy physics.

Spotting these advanced clues takes patience. You're not looking for a single knockout punch anymore; you're building a case from a collection of small, subtle inconsistencies. By focusing on how texture, light, and pattern all work together (or don't), you'll develop the instinct to spot even the most polished AI fakes.

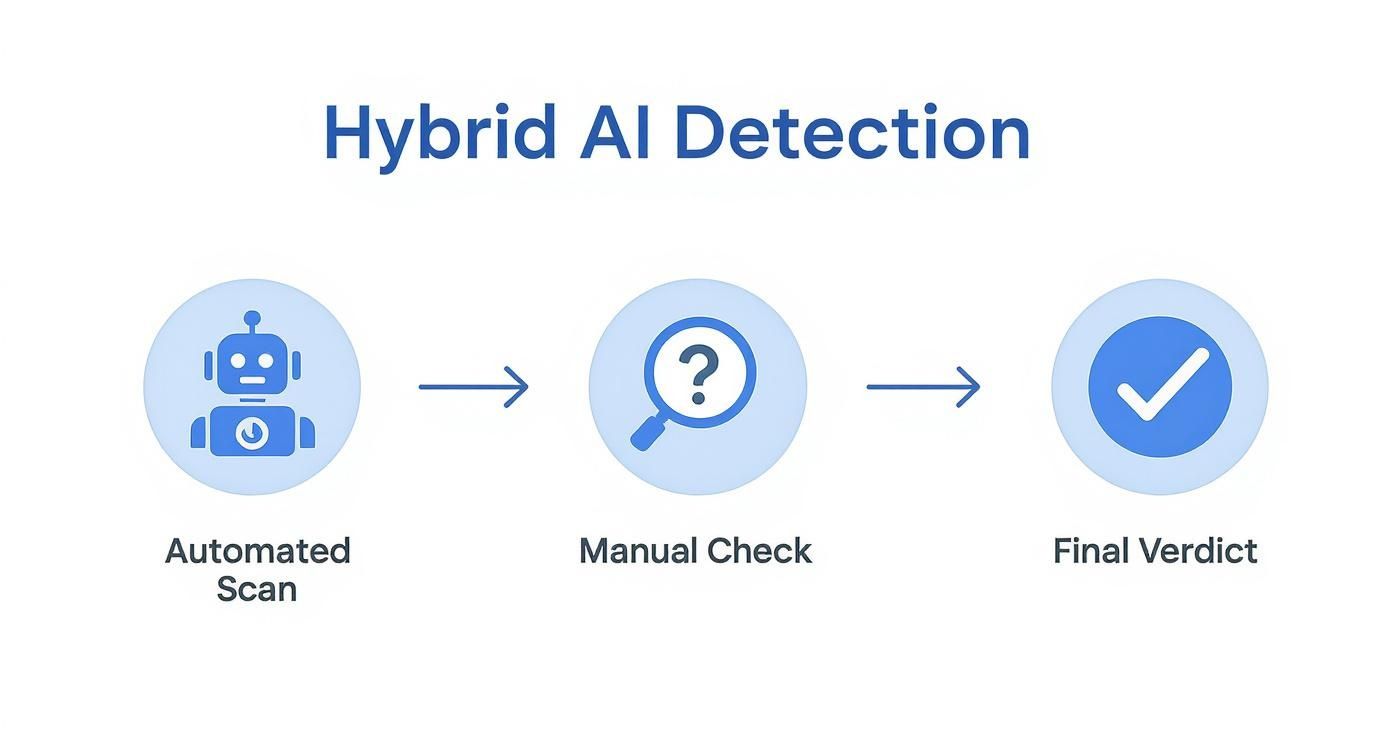

Putting It All Together: A Hybrid Approach

Relying on just one method—either an automated tool or your own eyes—leaves too much to chance. The most effective way I've found to spot an AI image is to combine the speed of technology with the irreplaceable nuance of human observation. This hybrid approach turns two good methods into one great strategy, giving you the best shot at making the right call.

The workflow itself is simple. I always start with a quick scan using a reliable AI detector. This gives me an immediate, data-driven baseline. If the tool flags the image, my job is to find the proof. If it says it's likely human, my job is to challenge that assumption and look for the subtle giveaways the algorithm might have missed.

Kicking Off Your Investigation

Let's walk through a real-world scenario. Imagine a controversial image of a political figure at a supposed protest starts spreading like wildfire online. The first step isn't to spend an hour poring over every pixel. Instead, you run it through a tool like our own AI Image Detector. In less than ten seconds, you get a probability score.

Say it comes back with a 75% likelihood of being AI-generated.

That result isn't the end of the investigation; it's the beginning. It tells you exactly where to focus your energy. Now, instead of scrutinizing the entire image with equal suspicion, you can zoom in on the classic AI weak spots we've covered—hands, background text, and the consistency of shadows.

This simple workflow shows how an automated scan should always come before a manual check. It’s all about efficiency and accuracy.

The key takeaway here is that the tool’s output is a guide, not a final judgment. It just points your manual inspection toward the most suspicious areas.

From Automated Score to Human Verdict

With that 75% score in hand, you start your own visual check of the protest image. I find it helps to ignore the main subject for a moment and zoom in on the crowd. Right away, you spot it: a protest sign in the background has jumbled, nonsensical letters that look like a made-up language. That's a massive red flag.

Next, you look at the lighting. The sun is clearly setting on the horizon, which should cast long shadows. But the politician's face is lit brightly from the front. The light sources just don't add up. They’re completely inconsistent.

The hybrid approach is about using technology to ask the right questions and using your own critical eye to find the definitive answers. One finds patterns; the other finds proof.

By combining the tool's high-probability warning with your own concrete findings—the garbled text and impossible lighting—you can confidently conclude the image is a fake. The technology behind these detectors is part of a massive image recognition market, valued at USD 53.3 billion in 2023. Its projected growth underscores just how vital these verification skills are becoming. For a deeper dive, you can explore market growth data from Grandview Research.

This two-step process is both efficient and incredibly thorough. It saves you from wasting time on obviously real photos while giving you a structured way to deconstruct the fakes. It's the most reliable method we have to navigate a visual world where reality and fiction are getting harder to tell apart.

Frequently Asked Questions

After getting the hang of the basic detection methods, you'll find that real-world scenarios often throw you a curveball. The tech moves fast, and the lines can get blurry. Here are some of the most common questions that pop up once you start putting these skills into practice.

Is it Possible to Be 100% Sure an Image is AI?

The honest answer? No. Getting to 100% certainty is the holy grail, but for now, it's out of reach. Think of it like a constant cat-and-mouse game: as soon as a reliable detection method appears, the AI image generators evolve to overcome it. Their output is getting shockingly good.

The best approach is to think like an investigator. You're not looking for a single knockout punch; you're building a case. You gather clues from different tools and combine them with your own manual checks. A high probability score from a detector plus some weirdness in the background gives you a very strong indication, but it’s always an assessment of probability, not a definitive verdict.

Are Some AI Generators Tougher to Spot Than Others?

Oh, absolutely. The difference between AI models can be night and day.

- Top-tier models like Midjourney (especially v6 and later) or the latest from DALL-E have gotten incredibly good at fixing the classic tells. Mangled hands and nonsensical text are largely things of the past for these platforms.

- Older or more basic models, on the other hand, still tend to leave those obvious, tell-tale artifacts behind, making them much easier to call out.

What really complicates things is the human element. A skilled prompt engineer can guide a powerful AI to avoid common pitfalls. Throw in a little Photoshop cleanup, and you've got a seriously convincing fake on your hands.

The real challenge comes from a skilled user working with a state-of-the-art AI. That combination produces the most difficult images to detect.

What if I Think an Image is AI but Can't Prove It?

When your gut is screaming "AI" but the evidence is thin, the best thing you can do is be cautious. It's all about responsible digital citizenship.

First, don't share it. This is especially critical if the image touches on sensitive subjects like news, politics, or public safety. Spreading a potential fake, even with a disclaimer, just fuels misinformation. If you’re a creator or journalist, your best bet is transparency. Let your audience know you have doubts. A quick reverse image search is also a smart move—you might find that a trusted source has already confirmed or debunked it.

If I Use AI to Edit a Real Photo, Does That Make it an "AI Image"?

This is a great question, and it really comes down to a matter of degree. The answer lands in a bit of a gray area.

If you use an AI tool for a minor fix, like using Adobe Photoshop's Generative Fill to remove a stray tourist from your vacation photo, most people wouldn't classify the whole thing as AI-generated. You've just cleaned it up.

But when the edits become substantial, that’s when it crosses a line. If you’re adding people who weren't there, inventing a whole new background, or changing the core context of the image, it’s no longer just a touched-up photo. It's a synthetic or heavily manipulated image. The key is how much the AI has changed what was real.

Ready to put your new skills to work? The AI Image Detector offers a fast, free, and private way to analyze any image. Just drag and drop a file to get a clear confidence score in seconds, helping you make smarter decisions about the content you encounter online.